Your First Step into Data Science: Terminologies, Pipelines, and Beyond

- 1.1 Foundations of Data Science, ML & AI

- 1.2 History and Evolution of Data Science & AI

- 1.3 What is Machine Learning?

- 1.4 What is Deep Learning?

- 1.5 AI vs ML vs DL vs Data Science — Conceptual Landscape

- 1.6 Types of Learning Paradigms

- 1.7 Categories of Machine Learning Models

- 1.8 Key Concepts and Terminologies

- What exactly is a “feature”? And what are we trying to predict?

- What is an “instance”?

- Overfitting, Underfitting, and the Art of Generalization

- Bias–Variance Tradeoff: A Fundamental Dilemma

- Loss Function vs Cost Function: How Models Learn

- Evaluation Metrics: A Preview

- How We Split the Data: Train, Validation, Test

- 1.9 Common Data Types and Formats in ML

- 1.10 Machine Learning Pipeline: A Practical Preview

- Closing Thoughts: The Ground Beneath Our Models

In 2021, a revolution happened in molecular biology — powered not by test tubes or microscopes, but by code.

For decades, one of the biggest unsolved problems in biology was this: given a chain of amino acids (the building blocks of proteins), can we predict what three-dimensional shape it will fold into? This isn’t just a theoretical puzzle — a protein’s shape determines how it behaves, how it interacts with other molecules, and whether it causes or cures disease.

Biologists had tried everything — experimental techniques like X-ray crystallography, slow and expensive computational simulations, even global competitions. But accurate protein structure prediction remained incredibly hard.

Then DeepMind’s AlphaFold happened.

Using a deep learning architecture inspired by transformers (the same family of models behind GPT and BERT), AlphaFold learned to predict protein structures with astonishing accuracy — in some cases, rivaling lab-based methods. When the results were released, scientists around the world were stunned. One researcher described it as “solving a problem that’s been open for 50 years.”

By 2022, AlphaFold had released predictions for nearly every known protein — over 200 million of them — and made them freely available to the scientific community. Drug discovery pipelines changed. Research in virology, immunology, agriculture, and rare diseases sped up overnight. Labs that didn’t have the resources to run expensive physical experiments could now just… download a structure and get to work.

That’s data science in action.

Not hype. Not another app recommending playlists. But a model that opened the floodgates for a new era of science — by learning from data, generalizing across biological systems, and solving a problem that humans had chipped away at for half a century.

This is the kind of impact data science can have.

Beyond the buzzwords and hype, it’s about solving real problems in the real world — with messy, imperfect data, but with meaningful results. It’s about making decisions better, faster, and more scalable — whether it’s diagnosing eye disease, forecasting energy demand, or catching fraud in financial systems. It’s not always glamorous. Most of it happens behind the scenes. But in the right hands, data becomes more than just numbers — it becomes a tool to tackle real-world problems in healthcare, infrastructure, climate, finance, and beyond.

Over the next few blogs, we’ll embark on a fascinating journey into the world of data science, machine learning, and AI — from the foundational concepts to practical tools, from the math behind the scenes to the systems that power real applications. But before we dive into models and metrics, it’s important to pause and build a strong conceptual foundation.

In the next few sections, we’ll lay down that groundwork — the mental toolkit that will guide us through everything that follows.

Let’s begin.

1.1 Foundations of Data Science, ML & AI

What is Data Science?

If you’ve ever wondered how your streaming app seems to know exactly what you want to watch next, or how banks flag fraudulent transactions in real time, the answer almost always involves data science. But what is data science, really?

At its core, data science is about extracting meaningful insights from data — not just describing what happened, but predicting what might happen next, and even prescribing what to do about it. It’s an interdisciplinary field that combines statistics, computer science, and domain expertise to understand patterns, build models, and drive decision-making.

In contrast to traditional programming — where we write explicit rules — data science is about letting the data guide us. We use algorithms to learn from the past so we can make smarter decisions in the future.

Why Is Data Called “The New Oil”?

The phrase “data is the new oil” has become a cliché, but it captures something important. Like oil, raw data isn’t very useful until it’s refined. It needs cleaning, transformation, modeling — and only then does it power products, decisions, and innovations.

In today’s world, data fuels everything from personalized recommendations and real-time traffic routing to detecting rare diseases and optimizing supply chains. Organizations that can harness data effectively gain a massive competitive edge — much like nations that once controlled oil supplies.

The Interdisciplinary Nature of Data Science

Data science doesn’t belong to a single department. It draws from a mix of skills:

- Statistics for making inferences and quantifying uncertainty

- Computer science for handling large-scale data and implementing algorithms

- Domain knowledge to ask the right questions and interpret results in context

This blend is what makes data science so powerful — and also why it’s not easy to master.

Real-World Applications

Data science isn’t confined to tech companies or research labs. It’s everywhere:

- Fraud detection in banking and e-commerce

- Personalization in advertising, content, and shopping

- Forecasting in finance, weather, demand planning, and healthcare

- Diagnosis support in medical imaging and pathology

- Operational efficiency in logistics, manufacturing, and infrastructure

The common thread? Using data to improve how systems behave in the real world.

The Data Science Project Lifecycle

Though each project looks different depending on the domain and problem, the overall lifecycle tends to follow a familiar structure:

-

Problem Formulation Clarifying the business or scientific question to be solved. What decision are we trying to support? What does success look like?

-

Data Collection Gathering raw data from databases, APIs, sensors, logs, or third-party sources. This might involve structured tables or unstructured formats like text and images.

-

Data Preprocessing Cleaning, filtering, transforming, and validating data to prepare it for analysis. This step is often underestimated but is crucial — noisy or biased data can break even the best models.

-

Modeling and Evaluation Applying statistical or machine learning models to learn from the data. This includes model selection, training, hyperparameter tuning, and evaluating performance using metrics.

-

Deployment and Monitoring Integrating the model into a production system or decision-making pipeline. Once deployed, models need to be monitored for drift, errors, and feedback loops to remain effective over time.

Each step is iterative. Data science isn’t a straight line — it’s a cycle of refining the problem, improving data quality, adjusting models, and rethinking solutions.

1.2 History and Evolution of Data Science & AI

Today, AI headlines speak of machines writing poems, diagnosing diseases, or generating images of people who don’t exist. But these capabilities didn’t appear out of thin air. They’re part of a long, winding journey that began not in cloud labs or research papers — but in philosophy, mathematics, and curiosity about the human mind.

Understanding how we got here is more than just trivia. It shows us how ideas evolve, how limits shift, and why data science — far from being a recent invention — is rooted in questions we’ve been asking for centuries.

Let’s step back and trace this journey.

The Early Sparks: Statistics Meets Computation

In the early 20th century, we had the math — statisticians were already thinking about things like regression, correlation, and uncertainty. But we didn’t yet have machines that could apply these ideas at scale.

That changed with the rise of computing after World War II.

Suddenly, abstract statistical ideas could be programmed, executed, and tested — not on paper, but on real data. Early pioneers began to wonder: Could we teach machines to learn from experience the way humans do? Not just follow instructions, but improve over time?

These weren’t wild speculations. They were the seeds of a new kind of science — one that merged mathematical rigor with computational power. The term “learning” was starting to gain a new meaning.

The Dartmouth Conference: AI is Born

Then came the summer of 1956.

At Dartmouth College in New Hampshire, a group of researchers gathered around a bold proposal: to explore the idea that “every aspect of learning or intelligence can be precisely described and simulated by a machine.” This workshop — modest in attendance, monumental in ambition — is now considered the official birth of Artificial Intelligence.

The optimism was sky-high. Researchers believed that within a generation, machines would match human intelligence in reasoning, language, and vision. For a brief moment, it looked like we were just a few steps away from mechanical minds.

But reality had other plans.

The Rule-Based Era: Expert Systems and Early Limitations

By the 1970s, the initial wave of AI excitement had shifted focus. Rather than trying to replicate general intelligence, researchers leaned into expert systems — rule-based programs designed to mimic the decision-making of specialists in narrow fields.

If you could encode a doctor’s reasoning into “if-then” logic, you could automate diagnosis. If you could simulate a mechanic’s troubleshooting flow, you could build a repair assistant.

These systems were powerful — in theory. But they were brittle in practice. They couldn’t handle uncertainty, exceptions, or ambiguity. And most critically, they couldn’t learn. Every rule had to be manually written.

This bottleneck led to the first of many AI winters — periods where funding dried up, enthusiasm waned, and the field was dismissed as overhyped.

But under the radar, something else was happening.

The Slow Rise of Neural Networks

Inspired loosely by how the brain works, neural networks had been proposed as early as the 1940s. These systems — with layers of “neurons” passing signals to one another — had one key advantage: they could be trained on data, not hard-coded with rules.

But for decades, they struggled. Lacking enough data, limited by hardware, and riddled with training issues, neural nets remained in the shadows — mathematically promising, but not practically useful.

Still, some researchers stuck with them. They refined training techniques, developed backpropagation, and slowly built the groundwork for something that wouldn’t bloom until much later.

The Era of Big Data and Statistical Learning

Meanwhile, the broader field of machine learning — learning patterns from data without being explicitly programmed — was finding traction.

Algorithms like decision trees, support vector machines, and k-nearest neighbors became workhorses of pattern recognition and classification. Unlike expert systems, these models could be trained. They could adapt. And thanks to improving storage and computational resources, they could be run at scale.

By the early 2000s, this approach was thriving — especially in fields like fraud detection, recommendation systems, and early personalization engines.

But even these models had limits. They still struggled with unstructured data — like images, speech, or natural language. That’s where deep learning would eventually change the game.

2012 and the Deep Learning Breakthrough

The tipping point came in 2012, at the ImageNet competition — an annual challenge where models classify objects in photos across 1,000 categories.

That year, a deep convolutional neural network called AlexNet, built by a small team at the University of Toronto, cut the error rate in half. The improvement wasn’t incremental. It was shocking.

And just like that, deep learning went from academic corner project to the new foundation of AI.

Within a few years, Google Translate moved from phrase-based rules to neural models. Speech recognition became natural. Image generation crossed into photorealism. Language models exploded in capability. Entire industries — healthcare, entertainment, finance, biology — began integrating deep learning into their pipelines.

From Models to Systems

And then things scaled.

We got better GPUs. We trained larger models. We created platforms like TensorFlow and PyTorch. We built data pipelines and infrastructure to support real-time learning. And slowly, AI moved from isolated research projects into product roadmaps, medical diagnostics, disaster forecasting, even protein structure prediction.

By the time models like AlphaFold and GPT emerged, they weren’t just academic marvels — they were public tools. They reshaped workflows in science, media, and business.

The history of data science and AI isn’t a straight line. It’s a story of setbacks and comebacks, of ideas waiting decades for the right hardware or the right data. But when you zoom out, a clear arc emerges — from handcrafted rules to learning algorithms, from small models to generative giants.

And now, as we stand in a world where models write, speak, predict, and generate, it’s more important than ever to understand where these capabilities came from — not just to use them, but to use them wisely.

In the next section, we’ll build on this history and ask a more focused question: what exactly is machine learning, and how does it differ from the broader umbrella of AI?

Let’s move forward.

1.3 What is Machine Learning?

In the previous sections, we explored the foundations and historical evolution of data science and artificial intelligence. Now, let’s delve into machine learning (ML)—a pivotal subset of AI that has transformed industries and daily life.

Machine Learning: A Subfield of AI

At its core, machine learning is a branch of artificial intelligence focused on developing algorithms that enable computers to learn from and make decisions based on data. Unlike traditional programming, where explicit instructions dictate behavior, ML algorithms identify patterns within data to make predictions or decisions without being explicitly programmed for specific tasks.

This paradigm shift allows systems to adapt and improve over time, handling tasks ranging from image recognition to natural language processing.

Learning Patterns from Data

Consider the task of email spam detection. Traditionally, developers would craft rules to filter out spam—looking for specific keywords or suspicious sender addresses. However, spammers quickly adapt, rendering static rules ineffective.

Machine learning offers a dynamic solution. By analyzing vast datasets of labeled emails (spam and not spam), an ML model learns the distinguishing features of spam messages. Over time, it refines its predictions, adapting to new spam tactics without manual intervention.

This approach exemplifies supervised learning, where models are trained on labeled datasets to predict outcomes for new, unseen data.

Types of Problems ML Solves

Machine learning addresses a diverse array of problems, broadly categorized as:

-

Classification: Assigning inputs to predefined categories. Examples include spam detection, image recognition, and sentiment analysis.

-

Regression: Predicting continuous values. Applications encompass stock price forecasting, temperature prediction, and sales projections.

-

Clustering: Grouping similar data points without predefined labels. Useful in customer segmentation and market research.

-

Dimensionality Reduction: Simplifying datasets by reducing the number of variables, aiding in data visualization and noise reduction.

-

Anomaly Detection: Identifying unusual patterns or outliers, crucial in fraud detection and system monitoring.

Each of these problem types leverages different ML algorithms and techniques, tailored to the specific nature of the data and desired outcomes.

ML vs. Traditional Programming Logic

Traditional programming relies on explicit instructions: developers write code that dictates every possible scenario the program might encounter. This approach is effective for well-defined tasks but struggles with complexity and variability.

Machine learning, conversely, enables systems to learn from data. Instead of coding rules, developers provide data, and the system identifies patterns and relationships. This distinction is crucial:

-

Traditional Programming: Input + Program = Output

-

Machine Learning: Input + Output = Program (Model)

This inversion allows ML systems to handle tasks with high variability and complexity, such as language translation, recommendation systems, and autonomous driving.

1.4 What is Deep Learning?

In recent years, the term deep learning has become nearly synonymous with artificial intelligence. From self-driving cars and real-time translation to ChatGPT and AlphaFold, many of the most breathtaking AI advances today are powered by deep learning. But what exactly is it?

And what makes it “deep”?

From Neural Networks to Deep Learning

At the heart of deep learning are neural networks — algorithms loosely inspired by how the human brain processes information. Each network consists of layers of interconnected units called “neurons,” which pass signals to one another. These networks can learn to map inputs (like an image or a sentence) to outputs (like a label or prediction) by adjusting the strength of those connections during training.

The basic idea of neural networks has been around for decades. But what changed recently is depth — the number of layers stacked between input and output. This is where deep learning gets its name. A shallow network might have just one or two layers. A deep network can have dozens, even hundreds.

Why does depth matter?

Each layer in a deep network learns to detect increasingly abstract representations of the data. In an image classifier, early layers might detect edges, mid-level layers might detect textures or shapes, and deeper layers might recognize eyes, wheels, or faces. This layered approach to representation learning is what allows deep learning to perform so well on complex, high-dimensional data.

Deep vs. Shallow Models

Shallow models — like logistic regression or even small neural nets — work well for problems where relationships are simple or mostly linear. But when data gets messier, more hierarchical, or highly structured (think images, audio, or language), shallow models struggle to capture the underlying patterns.

Deep models, on the other hand, can learn hierarchical feature representations directly from raw inputs. You don’t need to handcraft features like you used to — the model figures them out as part of the learning process.

Of course, depth comes with a cost: more data, more computation, and more careful tuning.

Which leads us to the next point.

The Role of Data Scale and Compute

Deep learning didn’t just take off because of better algorithms. It exploded because of three converging forces:

-

Big Data: The web, smartphones, and sensors have produced massive labeled datasets — millions of images, hours of audio, gigabytes of text.

-

Compute Power: The rise of GPUs (graphics processing units) allowed for massively parallel computations — perfect for training neural networks. Later, TPUs (Tensor Processing Units) and distributed training frameworks pushed this even further.

-

Tooling and Open-Source Ecosystems: Frameworks like TensorFlow, PyTorch, and Keras made building and training deep models more accessible than ever.

Together, these enabled researchers and engineers to build deep models that could finally match — and in some domains, surpass — human performance.

Real-World Applications of Deep Learning

So where is deep learning making a difference today?

-

Vision: Object detection, facial recognition, medical image analysis, autonomous driving

-

Speech: Voice assistants, transcription services, speaker identification

-

Language: Translation, sentiment analysis, large language models, chatbots

-

Control Systems: Robotics, industrial automation, reinforcement learning agents

The list keeps growing, and in many of these areas, deep learning has become the default — not just for research but for production systems across industries.

Deep learning represents a significant leap forward — not because it rewrote the rules of machine learning, but because it scaled them up with enough depth, data, and compute to uncover patterns we couldn’t previously reach.

In the next section, we’ll zoom out and look at how all these pieces fit together — AI, ML, deep learning, and data science — and clarify the often-confused relationships between them.

Let’s move on.

1.5 AI vs ML vs DL vs Data Science — Conceptual Landscape

This section gives your readers conceptual clarity — cutting through buzzwords and aligning their mental map before deeper technical dives.

1.5 AI vs ML vs DL vs Data Science — Conceptual Landscape

If you’ve been even remotely tuned in to the tech world lately, you’ve likely seen these terms thrown around: AI, machine learning, deep learning, data science — often used interchangeably, and sometimes even inaccurately. But while they overlap, they’re not the same.

Let’s untangle the mess.

The Big Picture: A Nested Landscape

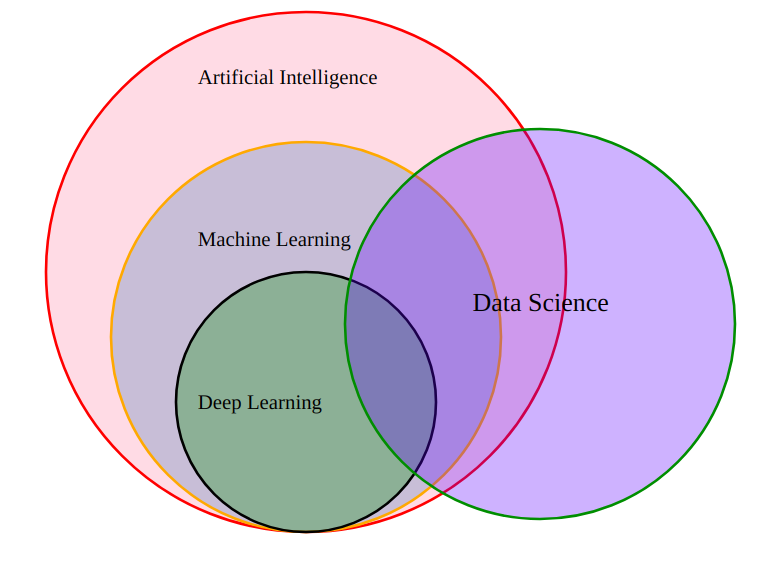

The best way to understand how these fields relate is to imagine nested circles:

-

Artificial Intelligence (AI) is the broadest field. It encompasses any method that enables machines to simulate intelligence — whether by rules, search, learning, or logic.

-

Machine Learning (ML) is a subset of AI. Instead of relying on hand-coded rules, ML systems learn patterns from data to make decisions or predictions.

-

Deep Learning (DL) is a specialized subfield of ML that uses multi-layered neural networks to model complex, high-dimensional data.

Then there’s Data Science — which overlaps with all three, but is not limited to them.

Data Science is the practice of extracting insights from data. It includes:

- statistical analysis

- business intelligence

- experimentation

- model building

- visualization

- and increasingly, machine learning and AI

In short, not all data science is AI, but much of modern AI (especially ML/DL) is a powerful tool in the data scientist’s toolkit.

Figure: Conceptual overlap between AI, ML, Deep Learning, and Data Science [Credit]

Where Do Other Fields Fit?

As data-driven systems mature, a whole ecosystem of supporting disciplines has emerged. Here’s how they fit into the landscape:

-

Statistics: The mathematical backbone of data science. It provides the theory behind sampling, inference, uncertainty, and modeling. Traditional statistics is more hypothesis-driven; ML is more data-driven.

-

Business Analytics: Often overlaps with data science, but typically emphasizes descriptive and diagnostic analytics — reporting, dashboards, KPIs. Data science tends to lean more predictive and prescriptive.

-

Data Engineering: Focuses on building pipelines and infrastructure for collecting, cleaning, and storing data — enabling data scientists and ML engineers to do their work. No models work without reliable data.

-

MLOps: A recent but essential field. MLOps stands for Machine Learning Operations — the process of deploying, monitoring, updating, and maintaining ML models in production. It brings together data science, DevOps, and software engineering.

Together, these roles form the modern data and AI stack — each solving a crucial piece of the problem.

Common Misconceptions and Buzzword Demystification

Let’s clear up a few persistent myths:

“AI and ML are the same thing.” Not quite. ML is a part of AI, but not all AI involves learning. Classic AI techniques like rule-based systems or symbolic reasoning don’t involve learning from data.

“Deep learning is always better.” Only sometimes. Deep learning shines with unstructured data (images, audio, text) and massive datasets. But for small datasets or tabular data, classical ML methods often perform better, faster, and with more interpretability.

“Data science is just statistics with Python.” It’s much more than that. While it includes statistics and programming, it also demands critical thinking, communication, experimentation, and a solid grasp of domain context.

“MLOps is just DevOps.” Not exactly. While MLOps borrows principles from DevOps, it introduces unique challenges — like versioning datasets and models, monitoring for concept drift, and retraining pipelines.

Putting It All Together

Imagine building a product that recommends news articles to users:

- Data Engineering sets up the pipelines to collect user click data.

- Data Science explores patterns in that data — which topics trend when, which segments behave similarly.

- Machine Learning models learn what each user likes and predicts what they might click next.

- Deep Learning might come in if you’re dealing with text embeddings, NLP, or user behavior modeling.

- MLOps ensures that the models are deployed, monitored, and retrained as preferences evolve.

And across all of this, AI is the umbrella idea — of systems behaving intelligently, adapting to input, and improving performance.

1.6 Types of Learning Paradigms

At a high level, machine learning is about learning from data. But how a model learns — and what kind of data it learns from — can vary widely depending on the task, domain, and resources.

This gives rise to distinct learning paradigms — each with its own assumptions, workflows, and strengths. Understanding these paradigms isn’t just academic — it directly informs how you’ll structure a problem, collect data, and design solutions.

Let’s explore them one by one, with real-world context and concrete practices.

Supervised Learning

What It Is

Supervised learning is like teaching with an answer key. You show the model examples of inputs along with the correct outputs, and ask it to learn a general rule that maps one to the other.

Each training example in supervised learning is a pair: Input → Correct output (label) The model’s goal is to learn a function that, given a new input, produces an accurate output — even if it’s never seen that specific example before.

Examples

- Image classification: You show the model thousands of labeled images — cats, dogs, pandas. Later, it should correctly label a new image it has never seen.

- Loan default prediction: You feed in a customer’s profile — income, age, credit score — along with whether they repaid the loan or not. The model learns to predict future loan outcomes.

- Spam detection: Emails are labeled as “spam” or “not spam”. The model learns which words, patterns, or sender behaviors signal spam.

Key Concepts

- Classification: Output is a category (discrete). E.g., “positive” vs “negative”, or digits 0–9.

- Regression: Output is a number (continuous). E.g., predict the price of a house or the temperature tomorrow.

Practices and Workflow

- Data collection: You need labeled examples — this can be expensive and time-consuming.

- Data preprocessing: Clean missing values, encode categories, normalize features.

- Model training: Choose a model (e.g., logistic regression, decision tree, neural net).

- Evaluation: Use metrics like accuracy, F1-score, or RMSE depending on the task.

- Deployment and monitoring: Monitor real-world performance — accuracy can drift as data distribution changes.

When to Use

Supervised learning is ideal when:

- You know the output you care about

- You have (or can collect) labeled data

- The goal is prediction, classification, or ranking

Unsupervised Learning

What It Is

Unsupervised learning is what we do when we don’t have labels — only raw inputs. The goal is not to predict, but to discover structure or relationships hidden in the data.

The model explores the data to answer questions like:

- Are there natural groupings?

- Which variables vary together?

- Can we reduce this data into something simpler?

Examples

- Customer segmentation: Given purchase histories of thousands of customers, group them into segments with similar behavior — without knowing in advance what the segments are.

- Anomaly detection: Detect abnormal behavior in credit card transactions — without labeled “fraud” examples.

- Topic modeling: Discover themes from a collection of research papers or news articles — without hand-tagging every article.

Common Techniques

- Clustering: Grouping similar data points (e.g., k-means, DBSCAN).

- Dimensionality Reduction: Representing data in fewer dimensions while preserving structure (e.g., PCA, t-SNE).

- Association Rules: Identifying relationships between items (e.g., “people who bought bread also bought butter”).

When to Use

- Labels are unavailable or unreliable

- You’re exploring the data or building features

- You want to visualize or simplify complex datasets

Practical Advice

- Use clustering for segmentation

- Use PCA/t-SNE to visualize and denoise data

- Validate clustering with silhouette scores or domain knowledge

Semi-Supervised Learning

What It Is

Semi-supervised learning sits between supervised and unsupervised. It’s used when we have a small amount of labeled data and a much larger amount of unlabeled data.

The idea is to let the model learn structure from the unlabeled data, guided by the few labels we do have.

Examples

- Medical diagnosis: Annotated scans by radiologists are rare and costly. But there are thousands of unlabeled scans that can still help the model learn representations.

- Speech transcription: You might only have transcripts for a small portion of your audio files. The rest can still be useful for learning acoustics.

- Document tagging: Most documents might not have tags. You train on a few tagged examples and use the rest to improve generalization.

Techniques

- Pseudo-labeling: The model predicts labels for the unlabeled data, then retrains on its own confident predictions.

- Consistency training: The model should give the same output even when inputs are slightly perturbed.

- Graph-based methods: Similar examples (even unlabeled) are assumed to have similar outputs.

When to Use

- When labeled data is expensive, but raw data is abundant

- When performance on small supervised sets needs a boost

Self-Supervised Learning

What It Is

Self-supervised learning is a newer, revolutionary paradigm where the model generates its own supervision — by solving cleverly designed pretext tasks.

It learns general representations from unlabeled data, which can later be fine-tuned on specific downstream tasks.

Examples

- BERT: Learns language representations by masking out words in a sentence and predicting them.

- GPT: Predicts the next word in a sequence — training on billions of sentences without any manual labels.

- SimCLR (vision): Learns to recognize different views of the same image as “similar” and others as “different.”

Why It Works

These proxy tasks force the model to learn structure, patterns, and associations. For instance, if a model can predict a missing word, it must understand grammar, context, and meaning.

Applications

- Foundation models in NLP (BERT, GPT)

- Image classification (pretrained vision transformers)

- Speech recognition (wav2vec)

- Cross-modal retrieval (CLIP for vision + language)

When to Use

- When large-scale labeled data is not available

- When you want to pretrain general-purpose models

Reinforcement Learning

What It Is

Reinforcement learning is about learning through interaction. An agent learns to make decisions by taking actions in an environment, observing the outcomes, and adjusting its behavior to maximize long-term reward.

There are no fixed labels. The learning signal comes from feedback — rewards or penalties based on the actions taken.

Examples

- AlphaGo / AlphaZero: Learned to play Go at superhuman level via self-play and reward feedback.

- Robotics: A robot arm learns how to stack blocks or grasp an object through repeated trials.

- Real-time Bidding: Online ad systems learn which bids perform better based on click-through rewards.

- Autonomous driving: Learn steering, braking, and lane positioning through environment feedback.

Core Components

- Agent: The decision-maker

- Environment: Where the agent acts

- State: The current context

- Action: Choices available to the agent

- Reward: Numeric feedback signal

- Policy: A strategy that maps states to actions

Unique Aspects

- Involves delayed rewards — actions now may affect long-term outcomes

- Requires exploration to discover effective policies

- Often simulated before deployment to reduce real-world risk

When to Use

- When learning via interaction is feasible

- When environments have feedback loops

- In robotics, game theory, logistics, control systems

Summary Table

| Paradigm | Data Needed | Core Goal | Example Use Cases |

|---|---|---|---|

| Supervised Learning | Labeled data | Learn input-output mapping | Spam filtering, price prediction, medical diagnosis |

| Unsupervised Learning | Unlabeled data | Discover structure or relationships | Customer segmentation, anomaly detection, topic modeling |

| Semi-Supervised Learning | Small labeled + large unlabeled | Leverage raw data to enhance learning | Medical imaging, scientific data, low-resource NLP |

| Self-Supervised Learning | Unlabeled data + proxy tasks | Learn transferable representations | GPT, BERT, CLIP, image and audio pretraining |

| Reinforcement Learning | Environment + feedback loop | Maximize long-term reward via actions | Game AI, robotics, operations, recommendation loops |

Each learning paradigm brings a different philosophy and toolkit to the table. As a practitioner or researcher, understanding when and why to use each — and how they can be combined — is one of the most valuable skills you can develop.

In the next section, we’ll turn our attention to the practical types of ML models — not in theory, but in terms of the problems they solve and how they’re used in real applications.

Let’s continue.

1.7 Categories of Machine Learning Models

1.7.1 Problem-Oriented View

By now, we’ve explored how machines can learn — the different paradigms of supervised, unsupervised, self-supervised, and reinforcement learning. But a natural next question follows:

“What kinds of models do we actually use in real-world machine learning tasks?”

The answer isn’t just a list of algorithms — it’s more helpful to think in terms of problem types. The kind of model you use depends on the nature of the problem you’re solving. Are you predicting a number? Classifying a label? Grouping similar things? Detecting something rare?

This section builds a mental map of core ML model categories, organized by the kind of task they’re designed to solve. You’ll start to see that many machine learning problems fall into familiar patterns — and choosing a model becomes a matter of recognizing the problem type, understanding the constraints, and selecting the right modeling strategy.

Regression Models

Goal: Predict a continuous quantity from input features.

Regression models are the go-to tools when your target variable is numerical — think price, temperature, duration, risk score. The model learns to fit a continuous function that maps inputs to outputs.

Examples:

- Predicting house prices based on size, location, and amenities

- Estimating sales revenue given ad spend and seasonality

- Forecasting demand for electricity or food delivery

Common Models:

- Linear Regression

- Ridge, Lasso, Elastic Net

- Decision Tree Regression

- Gradient Boosting Regression

- Neural Network Regressors

Key Ideas:

- Loss functions are often Mean Squared Error (MSE) or Mean Absolute Error (MAE)

- Regularization is essential to avoid overfitting

- Feature scaling can improve convergence, especially for gradient-based models

Classification Models

Goal: Assign input instances to one of several predefined classes.

Classification is perhaps the most common task in applied ML. From predicting churn to diagnosing disease, these models help decide which category a new observation belongs to.

Examples:

- Classifying emails as spam or not spam

- Identifying whether a transaction is fraudulent

- Predicting whether a patient has a disease based on symptoms

Common Models:

- Logistic Regression

- Decision Trees, Random Forests

- Support Vector Machines (SVM)

- Naive Bayes

- Gradient Boosting (XGBoost, LightGBM)

- Neural Networks (MLPs, CNNs)

Variants:

- Binary classification: Two classes (e.g., spam vs not spam)

- Multi-class classification: More than two classes (e.g., animal type)

- Multi-label classification: Multiple binary labels per instance

Clustering Models

Goal: Group similar data points together — without pre-existing labels.

Clustering is useful when you’re exploring the data and want to discover hidden groupings or behavioral patterns. It’s a form of unsupervised learning, often used for segmentation and anomaly detection.

Examples:

- Customer segmentation in marketing campaigns

- Grouping news articles by topic

- Organizing products based on usage patterns

Common Models:

- k-Means

- DBSCAN

- Agglomerative (hierarchical) clustering

- Gaussian Mixture Models (GMMs)

Challenges:

- Choosing the number of clusters (k)

- Interpreting clusters meaningfully

- Sensitivity to scaling and initialization

Time Series Forecasting Models

Goal: Predict future values of a variable over time, using its past behavior.

Time series problems are special because order matters — data points are sequentially dependent. These models learn temporal patterns, seasonality, trends, and noise.

Examples:

- Stock price forecasting

- Traffic flow prediction

- Server CPU utilization prediction

Common Models:

- ARIMA, SARIMA

- Exponential Smoothing (ETS)

- Prophet (by Meta)

- Recurrent Neural Networks (RNNs), LSTMs, GRUs

- Transformer-based models for time series (e.g., Time Series Transformer)

Best Practices:

- Use time-aware train-test splits (no shuffling)

- Detrend and deseasonalize when needed

- Evaluate with horizon-aware metrics like MAPE, RMSE

Dimensionality Reduction Models

Goal: Reduce the number of input features while preserving structure and variation.

High-dimensional data can be noisy and difficult to interpret. Dimensionality reduction helps simplify the dataset — for visualization, storage, or speeding up downstream modeling.

Examples:

- Visualizing handwritten digits in 2D

- Reducing image or text embedding size

- Compressing features for clustering

Common Models:

- Principal Component Analysis (PCA)

- t-distributed Stochastic Neighbor Embedding (t-SNE)

- Uniform Manifold Approximation and Projection (UMAP)

- Autoencoders (unsupervised deep learning)

Applications:

- Noise reduction

- Visualization

- Feature extraction for downstream models

Anomaly Detection Models

Goal: Identify rare or unusual observations that deviate significantly from the norm.

These models are critical in domains where rare events have high stakes — fraud, system failures, or medical emergencies. Unlike classification, anomalies are often not well-represented in training data.

Examples:

- Detecting credit card fraud

- Spotting network intrusions

- Identifying faulty sensors in industrial systems

Common Models:

- One-Class SVM

- Isolation Forest

- Autoencoder-based outlier detectors

- Statistical thresholds (z-score, IQR)

Challenges:

- Class imbalance (few anomalies)

- No clear labels for training

- Defining “normal” behavior varies by context

Recommendation Systems

Goal: Suggest relevant items to users based on preferences or behavior.

These systems are behind many of the digital experiences we now take for granted — streaming services, e-commerce, and content feeds. The goal is personalization at scale.

Examples:

- Netflix recommending shows based on your viewing history

- Amazon suggesting products you might like

- Spotify curating your next playlist

Common Approaches:

- Collaborative Filtering: Learn from user-item interactions (e.g., matrix factorization)

- Content-Based Filtering: Recommend based on item/user features

- Hybrid Systems: Combine collaborative and content-based

- Deep Learning Recommenders: Use embeddings and attention for rich interactions

Key Concepts:

- Implicit vs explicit feedback

- Cold-start problem (new user or new item)

- Ranking vs rating prediction

1.7.2 Theoretical Axes: How Models Think and What They Assume

When learning machine learning, it’s tempting to approach models as a buffet — a menu of algorithms where you pick what works best empirically. And while that’s often true in practice, there’s immense value in asking: “How does this model reason about the world? What assumptions does it make? What kind of learner is it?”

That’s what this section is about.

Instead of grouping models by task (as we did in 1.7.1), we now classify them along conceptual axes — dimensions that describe how models learn, represent information, and make predictions. These axes help you build intuition for why certain models behave the way they do, and why they succeed (or fail) in different settings.

This theoretical lens is especially helpful when:

- You’re comparing algorithms that perform similarly in accuracy but behave very differently

- You want to diagnose underfitting/overfitting in a principled way

- You’re building models for high-stakes domains like healthcare or finance, where interpretability and robustness matter

Let’s begin with one of the most fundamental conceptual distinctions in ML: Parametric vs Non-Parametric models.

Parametric vs Non-Parametric Models

This axis tells us how much the model assumes about the structure of the solution before seeing the data — and how that assumption affects its ability to generalize, scale, and adapt.

Parametric Models

Parametric models assume that the function we want to learn has a fixed, finite structure — usually defined by a set number of parameters.

Once we choose a model class (say, a linear regression with an intercept and slope), the model doesn’t grow in complexity as the dataset grows. It just adjusts its fixed parameters (like weights or coefficients) to best fit the data.

Examples:

- Linear Regression (fixed number of weights)

- Logistic Regression

- Naive Bayes (fixed probability tables)

- Neural Networks (with fixed architecture)

Characteristics

- Fixed capacity: Model complexity is independent of data size.

- Fast to train: Fewer parameters = faster optimization.

- Assumption-heavy: Works best when the true underlying relationship matches the model’s assumptions.

- Risk of underfitting: If the fixed structure is too simple for the task.

Analogy

Think of a parametric model like choosing a mold and pouring data into it. If the mold fits the shape of the data, great. If not, there’s only so much stretching it can do.

Non-Parametric Models

Non-parametric models don’t fix the number of parameters beforehand. Instead, their complexity grows with the data. They “remember” the training data (either explicitly or implicitly) and use it to make predictions in a more flexible, data-driven way.

Examples:

- k-Nearest Neighbors (stores entire dataset)

- Decision Trees (grow branches as needed)

- Gaussian Processes

- Kernel Methods (like SVM with RBF kernel)

Characteristics

- Flexible: Can adapt to highly nonlinear, complex patterns.

- Data-efficient for memorization: Great for interpolation in local regions.

- Often slower: Especially at inference time, since prediction involves scanning or comparing to the full dataset.

- Risk of overfitting: Without regularization or pruning.

Analogy

A non-parametric model is like having a growing notebook of examples. The more you see, the better your memory becomes. You don’t assume the shape of the function — you learn it from data as needed.

Trade-offs: Parametric vs Non-Parametric

| Feature | Parametric Models | Non-Parametric Models |

|---|---|---|

| Complexity | Fixed, regardless of data size | Grows with data |

| Flexibility | Lower, more rigid | High, adapts to data |

| Interpretability | Often easier to interpret | Can be opaque or tree-based |

| Training Speed | Generally fast | Slower, especially with large data |

| Inference Speed | Fast (once trained) | Often slower due to data lookups |

| Risk | Underfitting if too simple | Overfitting if not regularized |

This axis helps you decide:

- Should I keep the model simple and interpretable? (Parametric)

- Or allow complexity to grow with data? (Non-Parametric)

- Do I need fast inference on-device? (Parametric)

- Or do I want to capture nuanced behavior in messy, nonlinear data? (Non-Parametric)

Probabilistic vs Non-Probabilistic Models

After understanding how parametric and non-parametric models differ in flexibility and capacity, let’s shift focus to a different question:

Does the model express uncertainty? Or does it make hard decisions without showing its confidence?

This axis — probabilistic vs non-probabilistic — deals with how models represent and communicate belief about predictions.

Probabilistic Models

A probabilistic model doesn’t just give you a prediction — it gives you a distribution over possible outcomes. It estimates \(P(Y \mid X)\), the probability of a label given the input, rather than committing to a single decision.

This is extremely useful in real-world scenarios where uncertainty matters.

Examples:

- Logistic Regression: Gives you the probability that a sample belongs to class 1.

-

Naive Bayes: Models class-conditional distributions and uses Bayes’ Rule to compute P(class features). - Bayesian Models: Instead of point estimates, learn distributions over parameters (e.g., weights have priors and posteriors).

- Gaussian Processes: Predict not just the mean function but also a confidence band for regression tasks.

What You Get:

- Probabilities that sum to 1 across classes

- A measure of confidence or uncertainty

- Interpretability: You can ask why the model is unsure

Why It Matters:

In fields like medicine, finance, or autonomous systems, the model’s confidence can be as important as its prediction. You don’t just want to know what the model predicts — you want to know how sure it is.

Non-Probabilistic Models

Non-probabilistic models don’t model uncertainty explicitly. They learn to draw decision boundaries or memorize examples, but do not tell you how confident they are about a prediction.

Examples:

- Support Vector Machines (SVM): Classify by maximizing the margin between classes. The result is a hard decision — class A or B — with no probability output (unless you add post-processing like Platt scaling).

- k-Nearest Neighbors (k-NN): Makes decisions based on majority voting among nearest neighbors. It doesn’t model a distribution — the “confidence” is just the vote ratio.

- Decision Trees (without probabilistic leaf modeling): Just follow the path and return a label.

Why Use Them?

- Often easier to train and tune

- Can perform very well on certain tasks

- No need for probabilistic calibration when hard decisions suffice

Practical Differences

| Feature | Probabilistic Models | Non-Probabilistic Models |

|---|---|---|

| Output | Probability distribution | Hard label or decision |

| Confidence estimation | Explicit and principled | Requires tricks (e.g., voting or margin distance) |

| Calibration | Supported natively or with tuning | Needs additional post-processing |

| Typical use cases | Healthcare, finance, risk-sensitive tasks | Clear-margin problems, real-time decisions |

Probabilistic vs Deterministic (in General Modeling)

The distinction between probabilistic and non-probabilistic models in ML is rooted in a broader modeling philosophy found across the sciences: the difference between deterministic and probabilistic models.

Let’s unpack this carefully.

Deterministic Models

A deterministic model operates under a strict principle:

Given the same input, it always produces the same output.

There’s no element of chance or randomness — the system’s behavior is fully defined by the model equations and parameters. If you know the input, and the model structure, the outcome is completely predictable.

These models often reflect systems governed by physical laws or logical rules, where behavior is stable and precisely understood.

Examples:

- Physics equations: Newton’s second law, \(F = ma\) — the force is always the mass times the acceleration.

- Rule-based systems: A tax calculation program with hard-coded slabs. Same salary → same tax.

- Classical control systems: A PID controller’s output is fully specified given its tuning parameters and current state.

Deterministic models are ideal when:

- The system is governed by strict laws

- Randomness is negligible or irrelevant

- Precision and repeatability are more important than flexibility

Probabilistic Models

A probabilistic model, by contrast, embraces uncertainty. It says:

The same input might lead to different outputs — and I’ll model that uncertainty explicitly.

Instead of mapping inputs to single outputs, it maps inputs to distributions over possible outputs. This allows the model to express ambiguity, noise, or incomplete knowledge about the system.

Examples:

- Weather prediction: “There’s a 70% chance of rain tomorrow.” The system doesn’t claim certainty — it gives a probability distribution over possible weather states.

- Medical diagnosis models: Given symptoms, a patient might have Disease A with 60% probability, Disease B with 30%, and so on.

- Bayesian models: Model parameters themselves are treated as random variables with probability distributions.

Probabilistic models are powerful when:

- The underlying system is inherently random (e.g., user behavior, quantum physics)

- You want to model confidence or risk

- You prefer reasoning in terms of likelihoods, not just outcomes

So How Does This Relate to ML?

In machine learning:

- A probabilistic model (e.g., logistic regression, Naive Bayes, Gaussian processes) explicitly models distributions — often \(P(Y \mid X)\) — and outputs probabilities.

- A non-probabilistic model (e.g., SVM, k-NN, decision trees without probability leaves) makes direct decisions or predictions, but doesn’t quantify how uncertain it is.

That said, many ML models — even probabilistic ones — behave deterministically once trained.

For example, a logistic regression model trained on fixed data will always output the same probability for the same input. So at deployment time, it’s functionally deterministic, even though it was trained under probabilistic principles.

Why This Axis Matters

This distinction becomes vital when:

- You want your model to be well-calibrated (i.e., its predicted probabilities reflect real-world frequencies)

- You’re designing systems that require uncertainty-aware decisions

- You’re building ensembles or Bayesian pipelines

- You need interpretability or trustworthiness in predictions

Generative vs Discriminative Models

Our final theoretical axis compares how models approach the learning problem itself — not just what they predict, but what part of the data-generating process they try to understand or model.

At its core, this distinction asks:

Do we try to model how the data was generated? Or do we skip that and directly model how to make decisions or classifications?

Let’s explore.

Discriminative Models

Discriminative models focus on the boundary between classes. They answer the question:

Given an input \(X\), what is the most likely output \(Y\)?

In other words, they model the conditional probability distribution:

\[P(Y \mid X)\]They don’t try to model how the input data is distributed — they only care about how to separate classes or predict outcomes effectively. This often makes them simpler, more focused, and more accurate for prediction tasks, especially when data is abundant.

Examples:

- Logistic Regression: Models the probability of class membership given features.

- Support Vector Machine (SVM): Finds the optimal decision boundary without modeling data distribution.

- Random Forest / XGBoost: Focus on accurate classification by minimizing predictive error.

- Neural Networks (for classification): Learn direct mappings from inputs to class labels.

Strengths:

- Typically better at classification tasks

- Fewer assumptions about data distribution

- Easier to train and tune for prediction accuracy

- Faster inference and less computational overhead

Generative Models

Generative models aim to learn how the data was generated. They model the joint probability:

\[P(X, Y)\]This means they learn both:

- \(P(Y)\): The prior over classes

- \(P(X \mid Y)\): How each class generates data

Once they’ve learned this joint distribution, they can derive the conditional:

\[P(Y \mid X) = P(X, Y) / P(X)\]But more than just classification, generative models can also be used to:

- Simulate or generate new data

- Handle missing inputs

- Enable unsupervised or semi-supervised learning

Examples:

- Naive Bayes: Models class-conditional feature distributions and uses Bayes’ rule.

- Gaussian Mixture Models (GMMs): Unsupervised generative clustering.

- Hidden Markov Models (HMMs): Model time series by assuming hidden generative states.

- GANs (Generative Adversarial Networks): Learn to generate realistic data samples like images or text.

- Variational Autoencoders (VAEs): Generate new samples from learned latent distributions.

Strengths:

- Can generate new data samples

- Useful when labeled data is scarce

- Can handle more complex inference tasks, like missing data imputation

- Better for semi-supervised, unsupervised, and transfer learning

Key Differences

| Aspect | Discriminative Models | Generative Models |

|---|---|---|

| What it models | Conditional probability: $$P(Y \mid X)$$ | Joint distribution: $$P(X, Y)$$ |

| Main goal | Best prediction of class or output | Understand data generation, allow sampling |

| Typical use | Classification, decision boundaries | Data synthesis, unsupervised tasks, semi-supervised learning |

| Can generate new data? | No | Yes |

| Learning difficulty | Simpler to train and optimize | Harder due to modeling full data distribution |

| Examples | Logistic Regression, SVM, Random Forest | Naive Bayes, GANs, VAEs, HMMs |

When to Use Which?

- Use discriminative models when your primary goal is accurate classification or prediction, especially when you have plenty of labeled data and don’t care about generating new samples.

- Use generative models when you need to understand the underlying data, simulate new data, work with unsupervised or semi-supervised setups, or do representation learning.

Practical Intuition

Imagine you’re trying to identify whether an email is spam or not.

-

A discriminative model learns: “Given the features of this email (words, sender, structure), how likely is it to be spam?” → It focuses on separating spam from non-spam.

-

A generative model learns: “What does a spam email typically look like?” and “What does a non-spam email typically look like?” → Then it compares which of the two is more likely to have produced the observed input.

1.8 Key Concepts and Terminologies

At this point in our journey, we’ve seen different kinds of models, different ways to classify them, and the mathematical lenses through which they reason about the world. But before we dive deeper into algorithms, it’s time to zoom in on the language of machine learning itself — the shared vocabulary that makes it all tick. These are concepts that will surface again and again, whether you’re working with decision trees, deep neural networks, or probabilistic models.

What exactly is a “feature”? And what are we trying to predict?

At the heart of any supervised machine learning task is a simple goal: learn a mapping from inputs to outputs. We observe the world in some form — a sentence, a sequence of numbers, a pixel grid, or a tabular record — and we’d like to use that observation to predict something.

The things we use to make those predictions are called features. They’re measurable properties of the input — numbers like age or salary, categorical variables like product type, or even the latent embeddings of an image or sentence.

What we want to predict is called the target or label. In a spam classifier, the label might be “spam” or “not spam.” In a house price predictor, it’s the dollar value. Features are your clues. Labels are the truths you hope to uncover from those clues.

Behind the scenes, these clues and truths are represented as vectors and values. Mathematically, if you have a dataset of \(n\) examples, each input \(x_i\) is a vector in \(\mathbb{R}^d\), and each label \(y_i\) is a number (for regression) or a category (for classification). The dataset looks like:

\[\mathcal{D} = \{(x_1, y_1), (x_2, y_2), \dots, (x_n, y_n)\}\]These \(x_i\) are your feature vectors, and they live in a space with dimension equal to the number of features — which could be 3 or 300,000.

What is an “instance”?

An instance (or example or sample — the words are used interchangeably) is just a single row in your dataset. It’s one observation — one house with its size, location, price; one email with its word counts and label; one medical record with its features and diagnosis.

The full dataset is a collection of such instances, each with its own \(x_i\) and \(y_i\). And the collection is what we use to learn patterns.

Overfitting, Underfitting, and the Art of Generalization

If you’ve ever crammed for an exam by memorizing the questions from a past paper, only to realize the real test was slightly different — you’ve experienced the machine learning equivalent of overfitting.

Overfitting happens when a model learns the training data too well. It doesn’t just find the general trends — it learns the quirks, the noise, the exceptions. It performs beautifully on the data it has seen, but falls apart on anything new.

On the other end is underfitting — when the model is too simple to capture the patterns in the training data in the first place. Imagine trying to fit a straight line through a curved trend: you’re not even close.

The sweet spot we aim for is called generalization — the model performs well on unseen data. That’s the ultimate goal.

The tension between overfitting and underfitting is one of the oldest and deepest themes in ML. And it’s closely tied to something even more fundamental: the bias–variance tradeoff.

Bias–Variance Tradeoff: A Fundamental Dilemma

Let’s say we train many versions of a model on different training sets drawn from the same distribution. We then ask: how far are the model predictions from the true outcome on average?

Mathematically, the expected error of the model can be decomposed like this:

\[\mathbb{E}[(y - \hat{f}(x))^2] = \text{Bias}^2 + \text{Variance} + \text{Irreducible Error}\]- Bias reflects error from erroneous assumptions — e.g., fitting a linear model to a non-linear world.

- Variance reflects how much the model’s prediction would change if you retrained it on a different sample — high variance means it’s too sensitive to the data.

- The irreducible error is the noise inherent in the problem — things no model can predict.

A model with high bias is simple but may miss the complexity. A model with high variance is complex but may “hallucinate” patterns. Striking the right balance is the core of good model design.

Loss Function vs Cost Function: How Models Learn

To learn anything, a model needs feedback — a way to know how wrong it is, so it can get better.

That’s where the loss function comes in.

A loss function measures the error for a single prediction. Think of it as a penalty: the further the predicted value is from the true label, the greater the loss.

For regression tasks, the most common is squared error:

\[\mathcal{L}(y, \hat{y}) = (y - \hat{y})^2\]For classification, we often use cross-entropy, which compares predicted class probabilities to the true label:

\[\mathcal{L}(y, \hat{p}) = -\log \hat{p}(y)\]The cost function (also called the objective function) is just the average loss over all examples:

\[J(\theta) = \frac{1}{n} \sum_{i=1}^{n} \mathcal{L}(y_i, \hat{f}(x_i))\]And what does learning mean? Minimizing this cost — finding the parameters \(\theta\) that make the predictions as accurate as possible.

Evaluation Metrics: A Preview

We’ll explore metrics in much more depth later, but let’s peek at a few essentials.

For regression, we often use:

-

Mean Squared Error (MSE):

\[\text{MSE} = \frac{1}{n} \sum_{i=1}^{n} (y_i - \hat{y}_i)^2\] -

R² Score:

\[R^2 = 1 - \frac{\sum_i (y_i - \hat{y}_i)^2}{\sum_i (y_i - \bar{y})^2}\]

For classification:

- Accuracy is the fraction of correctly predicted labels.

- Precision, recall, and F1-score come into play when the dataset is imbalanced.

- ROC-AUC helps assess how well the model ranks positive cases over negatives.

How We Split the Data: Train, Validation, Test

No matter how powerful your model is, if you evaluate it on the same data you trained it on, you’ll be fooling yourself. That’s why we always split the data into:

- A training set to learn model parameters.

- A validation set to tune hyperparameters and make decisions about model architecture or early stopping.

- A test set — sacred and untouched — to evaluate performance on truly unseen data.

A common split is 70% train, 15% validation, and 15% test — though in low-data regimes, we use cross-validation to get more reliable estimates.

One cardinal rule: no peeking into the test set until the very end.

1.9 Common Data Types and Formats in ML

Before you build models, write code, or plot ROC curves, there’s something even more fundamental you must understand: what kind of data are you dealing with? Machine learning doesn’t start with algorithms — it starts with data. And that data comes in many shapes, structures, and storage formats.

This section is about learning to recognize those shapes and choosing the right tools and representations for each.

Structured, Unstructured, and Semi-Structured Data

Let’s start by looking at the three broad categories of data you’ll encounter.

Structured Data

This is the most traditional and the easiest to handle. Think of it like a spreadsheet: every row is an instance (observation), and every column is a feature with a clearly defined data type — integer, float, string, boolean, date.

Examples:

- Customer records in a CRM

- Transaction logs

- Sensor readings

- A table of movies with genre, rating, and release year

Structured data fits naturally into relational databases (SQL) and is the default format for many ML tasks, especially in business analytics, finance, or operations.

Unstructured Data

Now step into the wild. Unstructured data doesn’t fit neatly into tables. It’s messy, rich, and often the most valuable — but also the hardest to process.

Examples:

- Raw text (emails, social media posts, documents)

- Images and videos

- Audio recordings

- Web pages, scanned PDFs

Unstructured data requires domain-specific preprocessing: NLP pipelines for text, convolutional networks for images, spectrograms for audio, etc.

Semi-Structured Data

This is the in-between world. It’s not flat like a table, but it still has some inherent structure — just not as rigid or uniform.

Examples:

- JSON objects

- XML files

- NoSQL documents (MongoDB)

- Log files or events with key-value pairs

You’ll often find semi-structured data when scraping websites, calling APIs, or logging user activity. It usually needs flattening or transformation before modeling.

Common File Formats in ML Projects

Each type of data typically comes in its own flavor of file formats. Knowing how to handle these is part of being an effective data scientist.

| Format | Use Case |

|---|---|

| CSV | Universally used for tabular data; easy to read and write; lacks support for metadata |

| Excel | Common in business workflows; supports multiple sheets, formatting |

| JSON | Ideal for nested data (APIs, configs); semi-structured |

| Parquet | Efficient, columnar format; great for large datasets in distributed environments (e.g., Spark, BigQuery) |

| TXT/LOG | For raw logs, corpus text, token streams |

| Image formats | JPEG, PNG, TIFF for computer vision tasks |

| Audio | WAV, MP3 for speech/audio tasks |

Pro tip: In real-world projects, you’ll often start with messy Excel or JSON dumps, and spend a significant amount of time cleaning, parsing, and standardizing before you can even think about modeling.

Data Modalities: Beyond Tables

Not all data is tabular. In fact, many cutting-edge ML applications deal with multi-modal data — where structure and format vary widely.

Let’s look at some major data modalities:

Tabular

Your standard structured format. Rows and columns. Great for tree-based models, logistic regression, and business data pipelines.

Time-Series

Sequential data where order and timestamp matter. Examples:

- Stock prices

- Sensor data

- Medical monitoring (ECG, heart rate)

Requires temporal modeling (e.g., LSTM, ARIMA, transformers for time-series).

Image

2D or 3D pixel arrays. Requires computer vision pipelines and models that capture spatial relationships (CNNs, ViTs).

Audio

Waveforms, frequencies, spectrograms. Speech recognition, music analysis, speaker identification — all fall under this.

Text

Natural language, documents, product reviews, tweets. Needs tokenization, embeddings, and NLP models.

Each modality brings its own challenges: preprocessing, representation, and architecture choices. Understanding what kind of data you have is essential for choosing the right modeling approach.

Access and Storage: Where Does the Data Live?

Once you know what your data looks like, the next question is: where is it stored, and how do you access it?

Traditional Databases

- Relational (SQL): PostgreSQL, MySQL, SQLite. Best for structured, transactional data.

- NoSQL: MongoDB, Cassandra, DynamoDB — designed for flexibility and scalability; good for semi-structured formats.

Cloud Platforms

- Google Cloud Storage (GCS)

- Amazon S3

- Azure Blob Storage

Often used for large-scale storage, especially for raw files, logs, or image/audio datasets. Access is usually via SDKs or APIs.

APIs and Streams

- REST APIs for external data sources

- GraphQL for flexible querying

- Streaming platforms like Kafka, Flink, or real-time telemetry

These are common in dynamic, real-time data environments like IoT, fintech, or social platforms.

In Practice

Knowing your data type, structure, and format isn’t just a technical checklist — it shapes the entire data pipeline:

- How you clean and preprocess the data

- Which models are appropriate

- What tools and infrastructure you’ll need

- How results will be interpreted and deployed

The better you understand the nature of your data, the more confident you’ll be in modeling it — and the more likely your models will reflect real-world patterns instead of artifacts.

1.10 Machine Learning Pipeline: A Practical Preview

At this point, we’ve talked about the kinds of data you’ll encounter, the types of learning paradigms you might use, and the models you’ll soon begin training. But before we dive into algorithms, there’s something more important to understand: how everything fits together.

Machine Learning is not just about fitting models — it’s about solving problems end to end. That means collecting data, understanding it, processing it, learning from it, and then using those learnings to power decisions in the real world.

This journey — from raw data to real-world impact — is what we call the Machine Learning Pipeline.

The Big Picture: From Data to Deployment

Let’s walk through the major stages of this pipeline. This is the scaffold you’ll hang all your knowledge on — a structure that will return again and again as we build models and systems.

1. Raw Data

Everything begins here. Data is often noisy, unstructured, incomplete, and messy. You might be handed an Excel sheet, a folder of images, or a firehose of real-time JSON logs. No matter the form, the starting point is usually disorganized and inconsistent.

2. Preprocessing

Before you can feed data to a model, it needs to be cleaned and made consistent. This phase includes:

- Handling missing values

- Dealing with outliers

- Normalizing or standardizing numerical values

- Encoding categorical variables

- Parsing dates and text

For images, this could mean resizing and normalization. For audio, removing background noise. For text, cleaning, tokenizing, and lowercasing.

This is where data hygiene is enforced. A model trained on dirty data is like a student learning from bad notes — confusion is inevitable.

3. Feature Engineering

Once the data is clean, we try to extract signal from noise — creating or selecting features that best represent the underlying problem.

This could include:

- Creating derived metrics (e.g., age from date of birth)

- Extracting n-grams or embeddings from text

- Aggregating transaction counts per user

- Creating lag features in time-series

Good features can dramatically improve model performance. And sometimes, clever feature engineering beats fancy algorithms.

4. Modeling

Now comes the part most people associate with machine learning — choosing a model and training it.

Depending on the problem, you might choose:

- A linear model like logistic regression

- A decision tree or forest

- A neural network

- A probabilistic model

- Or even a hybrid ensemble

Training means optimizing model parameters to minimize a cost function, using methods like gradient descent.

You’ll also begin splitting your data into training, validation, and test sets — to avoid overfitting and ensure that the model generalizes.

5. Evaluation

You’ve built a model. But is it any good?

Here, you’ll define metrics that make sense for the business or scientific goal.

- For classification: accuracy, precision, recall, F1-score, ROC-AUC

- For regression: MSE, MAE, R²

- For ranking: NDCG, MAP

- For imbalance: confusion matrix analysis

Evaluation is more than just looking at a number. It’s about understanding where your model works, where it fails, and what risks those failures carry.

6. Deployment

Finally, if the model performs well, it can be integrated into a real-world application. This could mean:

- A web service that serves predictions via API

- A batch pipeline that runs nightly

- A mobile app integrating an on-device model

- A business dashboard that helps humans decide faster

Deployment isn’t the end — it’s the start of feedback loops, model monitoring, drift detection, and retraining.

Real-World Use Cases Across Domains

- Healthcare: Predict disease onset from patient history (EHR → preprocessing → features like comorbidity counts → model → deploy in a hospital workflow)

- Finance: Detect fraud in credit card transactions (real-time JSON logs → feature pipelines → anomaly detection models)

- Retail: Recommend products based on past purchases (transaction tables → user-product features → collaborative filtering)

- Manufacturing: Predict machine failure from sensor data (time-series → lag features → RNN or tree-based models)

Each of these pipelines may use different tools or models — but they all follow the same high-level structure.

Closing Thoughts: The Ground Beneath Our Models

We often think of machine learning as a world of clever algorithms and dazzling predictions — and yes, that’s part of the appeal. But underneath it all lies something more grounded: a deep relationship between data, structure, and insight.

In this opening chapter, we didn’t just define machine learning or rattle off its categories — we sketched out a mental map. We looked at how learning happens, how models differ, what shapes data can take, and how it flows through the machinery of a pipeline. More importantly, we tried to get comfortable with the language of machine learning — not just terms, but the ideas behind them.

Because here’s the truth: without this foundation, everything else becomes a trick. With it, every new concept — every algorithm, optimization method, or architecture — becomes easier to understand and connect. You’re not memorizing recipes; you’re learning how to cook.

So if this felt like a lot, that’s okay. You’re not supposed to master all of it at once. This is a landscape we’ll explore together, one layer at a time. We’ll revisit these concepts often — from different angles, with code, math, visual intuition, and practical examples.

In the next chapters, we’ll zoom in. We’ll start building. We’ll step into the world of algorithms — not as isolated tools, but as natural extensions of the ideas you’ve seen here.

But for now, pause and let this map settle. You’ve taken the first important step in becoming not just a user of machine learning — but a thinker in it.

Let’s get ready to build.