Data Preprocessing Part 1: Exploring, Profiling, and Collecting Data the Right Way

- Introduction: The Real Work Begins Before the First Model

- Data Preprocessing — Overview

- Data Collection and Understanding

- Types of Data

- Strategies for Data Collection

- Exploratory Data Analysis (EDA): Your First Conversation with the Data

- What Is EDA, Really?

- Goals of EDA: What Are You Trying to Learn?

- Initial Data Inspection

- 1. Dimensions: Get a Sense of Dataset Size

- 2. Data Types: Make Sure Your Columns Are What They Claim to Be

- 3. Sample Data: Peek Inside Before Diving Deep

- 4. Missing Values: Quantify What’s Absent

- 5. Duplicate Rows: Don’t Let Redundancy Sneak In

- 6. Sampling for Large Datasets

- 7. Streaming Data Windows

- Summary: High-Level Scanning Before Deep Dive

- Key EDA Techniques: A Practical Guide for Data Scientists

- A. Univariate Analysis

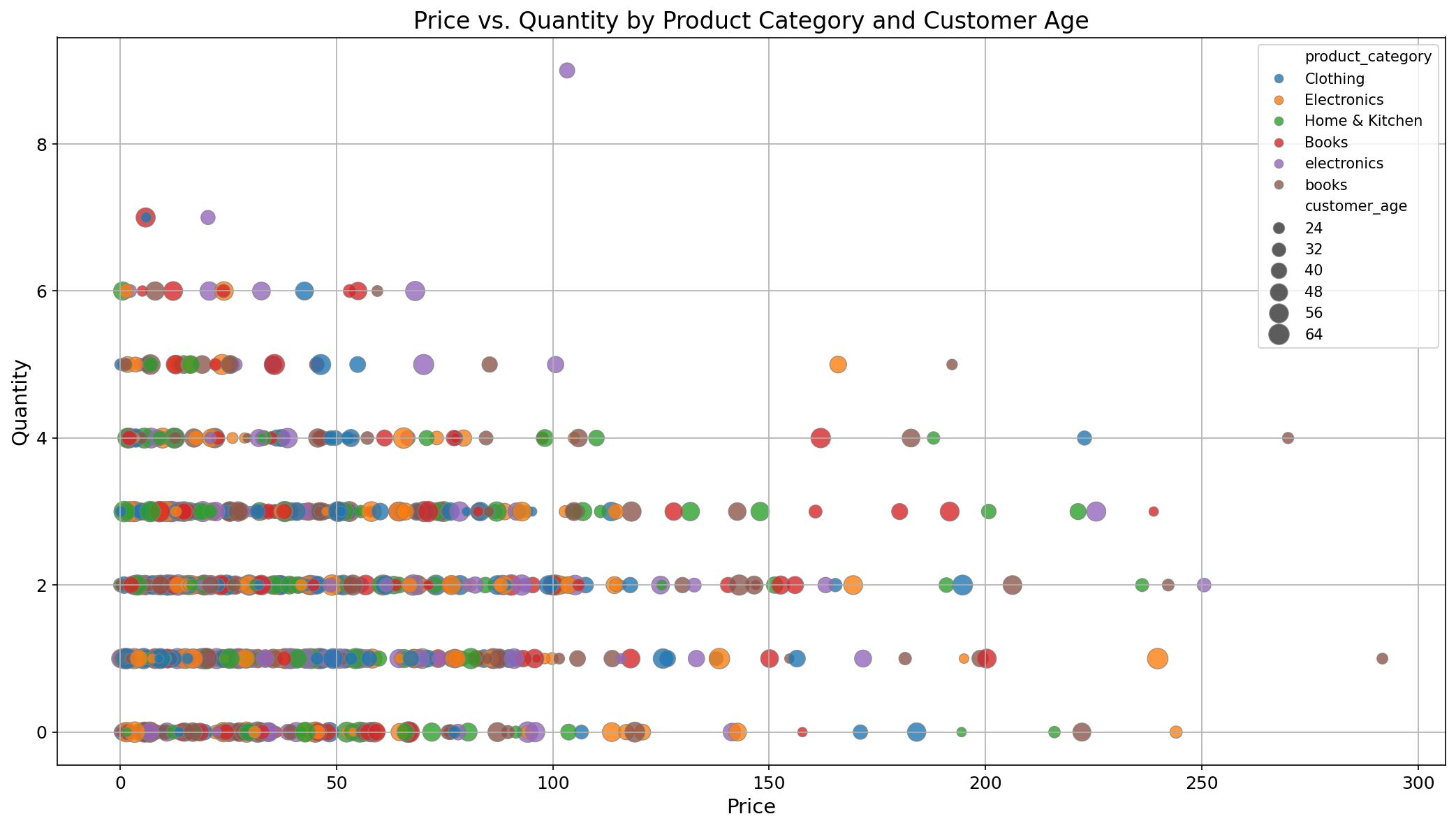

- B. Bivariate and Multivariate Analysis

- B.1. Why Bivariate and Multivariate Analysis Matter

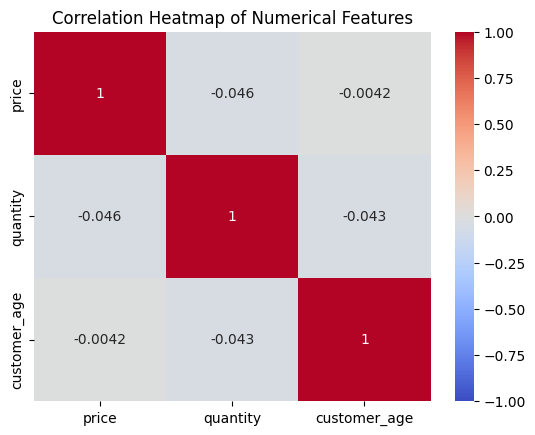

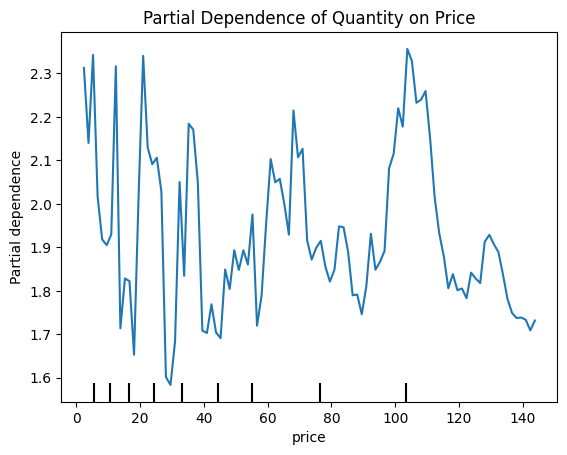

- B.2. Numerical vs. Numerical Analysis

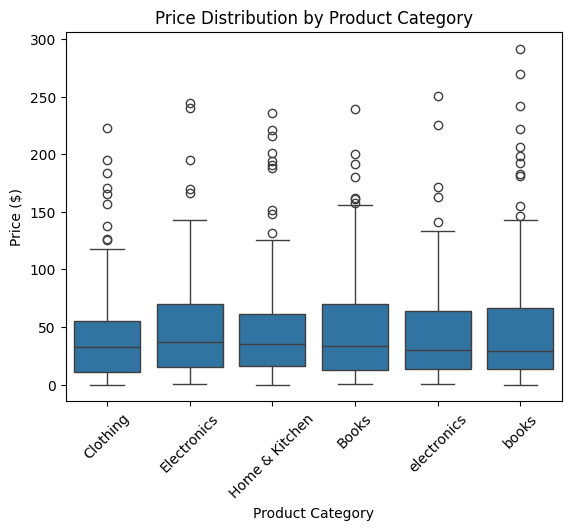

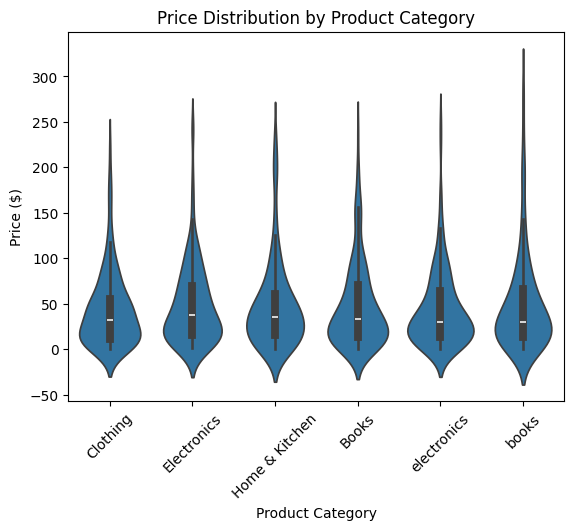

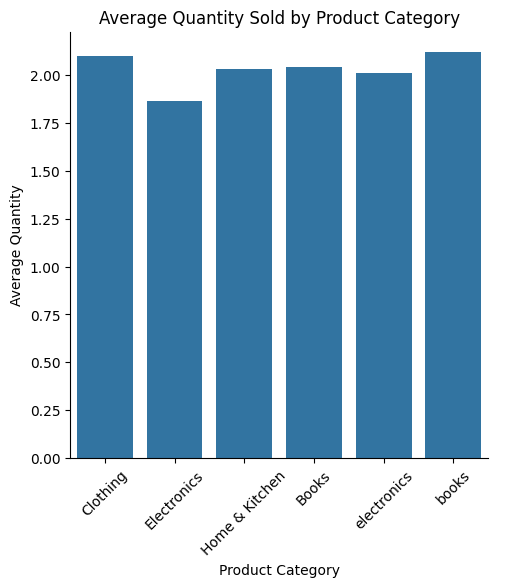

- B.3. Categorical vs. Numerical Analysis

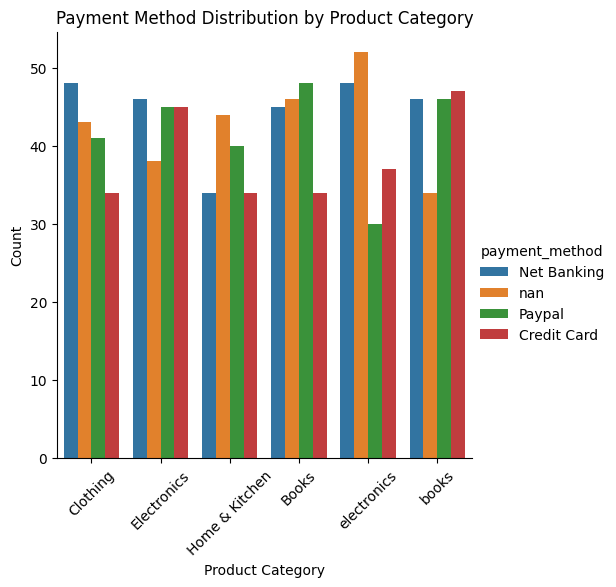

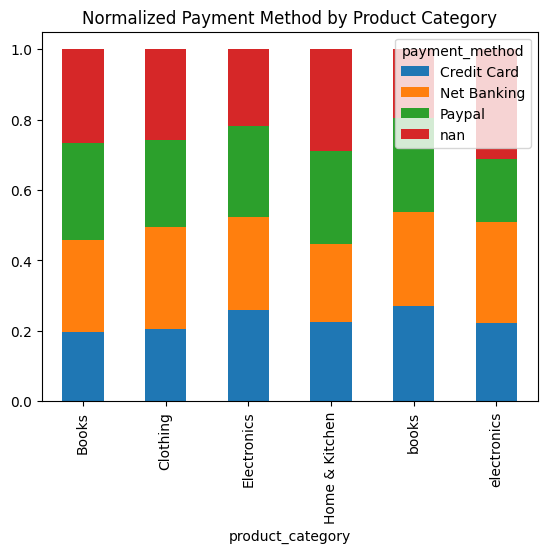

- B.4. Categorical vs. Categorical Analysis

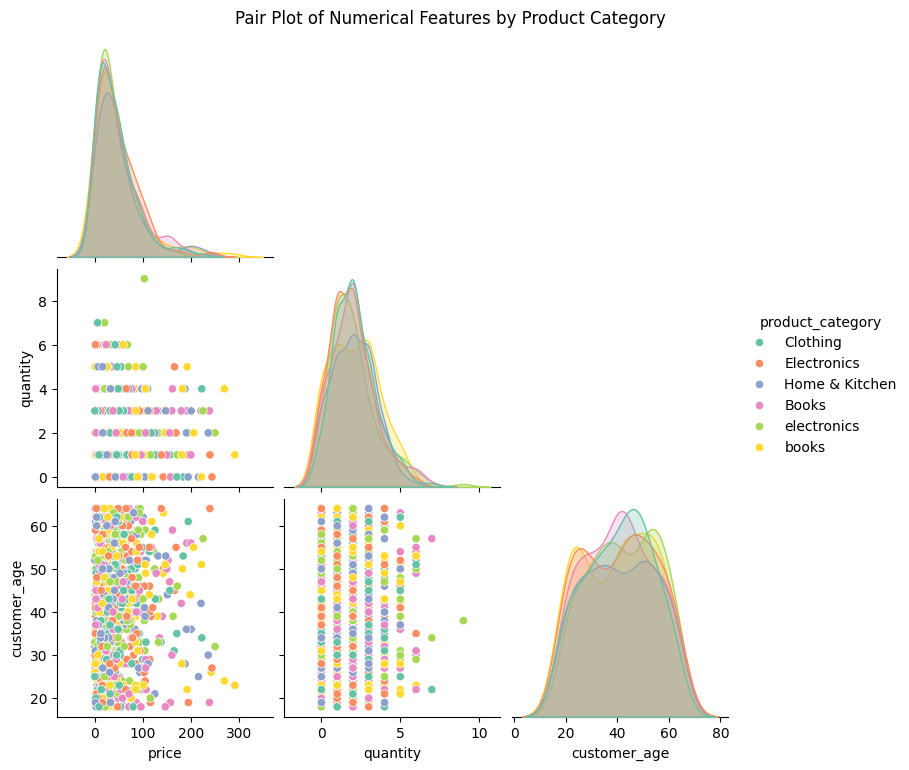

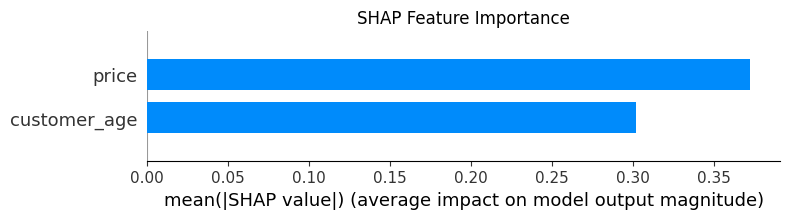

- B.5. Multivariate Analysis: The Big Picture

- B.6. Practical Tips for Effective Analysis

- B.7. Common Pitfalls and How to Avoid Them

- B.8. Final Thoughts

- C. Domain-Specific Checks: Tailoring EDA to Context

- D. Statistical Tests: Verifying Patterns with Rigor

- E. Bias and Fairness Analysis

- F. Production Monitoring Insights

- Diagnostic Checklist for EDA

- Practical Tips for Robust EDA

- Linking EDA to Action

- Wrapping Up

Introduction: The Real Work Begins Before the First Model

Let’s be honest—when people talk about machine learning, they usually jump straight to the flashy stuff. Neural networks. Transformers. Model accuracy. Leaderboards. But if you’ve actually built anything real-world with ML, you already know: that’s just the tip of the iceberg.

The real work? It happens way before that. In spreadsheets full of missing values. In timestamp formats that don’t match. In weird categorical labels like “N/A”, “Unknown”, and “NULL” all meaning the same thing. In outliers that make your scatter plots look like fireworks. That’s the part no one shows on LinkedIn. And yet, that’s where good models are made—or broken.

This preprocessing blog series is about that part.

Because whether you’re working on clickstream logs at Spotify, recommendation systems at Amazon, or churn models at a startup, the truth is the same: raw data is rarely model-ready. It’s messy, incomplete, biased, and often just plain confusing. And no matter how great your algorithm is, if the input is junk, the output will be too.

That’s where data preprocessing and feature engineering come in. These aren’t just boilerplate steps you rush through to get to the “real” work. They are the real work. It’s here that you understand the quirks of your data, clean up the mess, reshape things into a useful form, and create features that actually tell a story your model can learn from.

In this blog series, we’re going to walk through what it really takes to get data into shape for machine learning. No shortcuts, no hand-waving. Just practical, thorough, battle-tested techniques you’ll actually use. Here’s what’s coming up:

- Blog 1: Understanding the data—types, sources, quirks, and how to make sense of them

- Blog 2: Cleaning things up—missing data, outliers, inconsistencies

- Blog 3: Transforming data—scaling, encoding, handling messy formats

- Blog 4: Engineering features—both classic and clever techniques

- Blog 5: Dealing with imbalanced data—because real-world problems are rarely balanced

- Blog 6: Reducing dimensionality and choosing the right tools—because not every dataset fits in memory

Each post is packed with examples, visuals, Python code, and practical tips drawn from real projects. Whether you’re prepping for production or just trying to make your first model actually work, this series is here to help you do it right.

So let’s start at the beginning—why preprocessing matters, and what makes real-world data so challenging in the first place.

Data Preprocessing — Overview

The Role of Preprocessing in the Machine Learning Pipeline

In any ML pipeline, preprocessing is the stage where the raw, unfiltered mess becomes something structured, useful, and learnable. It sits right after data collection and right before model training.

Think of it like this:

Raw Data → Preprocessing → Feature Engineering → Modeling → Evaluation → Deployment

Why is this step so important?

- Because your models are picky. Many algorithms assume data is clean, numeric, standardized, and free of weird anomalies. If that’s not true, your results won’t be either.

- Because bad data = bad insights. You might get a high accuracy score, but if your input data was flawed, your predictions could be wildly wrong when it matters.

- Because preprocessing gives you control. Instead of feeding your model whatever came out of the database, you’re shaping the signal—and silencing the noise.

Done well, preprocessing makes modeling smoother, more interpretable, and more effective. Done poorly, it leads to bugs, brittle models, and wasted time retraining on nonsense.

Common Challenges in Real-World Datasets

If you’ve worked with real data, you already know it’s rarely clean. Some of the most common headaches you’ll face:

- Missing values: Maybe 30% of users didn’t fill in their age, or your IoT sensor glitched and skipped a few minutes of logging.

- Inconsistent formatting: One column says “yes” and “no”, another says “TRUE” and “FALSE”. Great.

- Outliers: A few records show users spending 12,000 minutes watching videos in a day. Bot? Glitch? Who knows.

- Data leakage: Some columns accidentally contain future info—like a “payment received” field in a model trying to predict who will default.

- Imbalanced classes: Only 2% of your customers churn. That’s good for business, bad for model training.

- Too many features: Thousands of columns, many of them useless. Welcome to high-dimensional data.

- Unstructured formats: Free text, images, audio files. None of which are usable until you process them the right way.

In the next part, we’ll get our hands dirty with data collection and exploratory analysis—what kind of data you’re working with, where it comes from, what it means, and how to begin making sense of it all.

Data Collection and Understanding

Before we start building models, tuning hyperparameters, or even cleaning data, there’s something far more fundamental we need to do: understand the data we have. This might sound obvious—but in practice, it’s where many machine learning projects start to go sideways.

Think of this as the “getting to know your dataset” phase. What kind of data is it? Where did it come from? How was it collected? Is it even suitable for the problem you’re trying to solve?

Skipping this step is like trying to write a novel without learning anything about the characters. You might produce something, but it won’t make much sense—and your model won’t either.

In this section, we’ll walk through how to look at your data with a curious, critical eye. Not just for the sake of completeness, but to truly understand its structure, context, and quirks. We’ll cover:

- The different types of data you might encounter, from structured tables to messy text, images, or time-series logs

- How data collection strategies differ across domains like e-commerce, healthcare, or sensor networks

- The kinds of issues you’re likely to run into—like timezone mismatches, inconsistent formats, or datasets too large to fit in memory

- How to think about ethical considerations, especially when your data includes sensitive or biased information

- And how to lay the groundwork for effective exploration and analysis with good documentation and sampling strategies

Whether you’re working with transaction logs, product catalogs, survey responses, or telemetry streams, this section is about developing the instincts to ask the right questions—and spot the red flags—before the modeling ever begins.

Let’s start by looking at the types of data you’ll commonly deal with in real-world machine learning workflows.

Types of Data

One of the first questions you should ask when you begin exploring a dataset is: What kind of data am I dealing with? The answer will shape nearly every downstream decision—from how you clean and preprocess it, to what types of models will work best.

Different types of data come with different structures, challenges, and requirements. Here’s a breakdown of the most common ones you’ll encounter, along with practical examples and the typical preprocessing each one needs.

Structured Data (Tabular)

This is the most familiar format—data organized neatly into rows and columns, often found in CSV files, relational databases, or Excel sheets. Each row represents a single observation (like a user or a transaction), and each column is a feature (like age, salary, or number of clicks).

Examples:

- Customer records with fields like age, location, account balance, and subscription type

- Sensor logs recording temperature, pressure, and timestamps every 10 seconds

- Transaction tables with purchase ID, item price, quantity, and payment method

Preprocessing Needs:

- Handle missing values and duplicates

- Normalize numerical features

- Encode categorical variables (one-hot, ordinal, target encoding, etc.)

- Detect and treat outliers

Tree-based models like Random Forests and XGBoost work very well on this kind of data, but proper preprocessing still matters a lot for stability and performance.

Text Data

Text data is unstructured by nature. It doesn’t come in tidy columns, but instead as raw strings: reviews, support tickets, tweets, emails, doctor’s notes, and more. While it may look simple, extracting meaning from it requires multiple steps.

Examples:

- Product reviews in an e-commerce platform

- Chat transcripts from customer support

- News headlines or blog posts

- Medical diagnosis descriptions

Preprocessing Needs:

- Tokenization (splitting text into words or subwords)

- Lowercasing, punctuation removal, and stopword filtering

- Stemming or lemmatization (optional)

- Vectorization using methods like TF-IDF, Word2Vec, or BERT embeddings

- Handling misspellings or slang in user-generated content

Text data is commonly used with NLP models, ranging from traditional logistic regression with TF-IDF features to large transformer-based architectures.

Image Data

Image data is structured very differently: it consists of pixels arranged in matrices, often with multiple color channels (RGB). Models don’t work directly with the image files—they need the raw pixel arrays in a consistent format.

Examples:

- Photographs for product catalogs

- X-ray or MRI scans in medical imaging

- Handwritten digits for digit recognition systems

Preprocessing Needs:

- Resize images to a fixed dimension

- Normalize pixel values (e.g., scaling from 0–255 to 0–1)

- Data augmentation (rotations, flips, cropping) to reduce overfitting

- Convert to grayscale or manage color channels if needed

Convolutional Neural Networks (CNNs) are the go-to architecture for image data.

Time-Series Data

Time-series data captures how a signal changes over time. Each observation is timestamped and may exhibit patterns like seasonality, trends, or sudden spikes.

Examples:

- Stock prices recorded at 5-minute intervals

- Power consumption of a smart meter

- Website traffic or clickstream logs by the hour

- Heart rate readings from wearable devices

Preprocessing Needs:

- Parse and sort timestamps

- Handle missing intervals or gaps in data

- Create lag features, rolling averages, or trend indicators

- Check for and decompose seasonality or stationarity

- Apply time-aware splits for train/test evaluation

Models like ARIMA, LSTMs, and temporal transformers are commonly applied here.

Multimodal Data

Multimodal datasets combine multiple data types—say, text descriptions, images, and numeric metadata—all representing the same entity.

Examples:

- Product listings with a title (text), image, price (numerical), and category (categorical)

- Posts on a forum with author info (structured), text (unstructured), and attached media (images/videos)

- Clinical trials with tabular patient data, imaging scans, and physician notes

Preprocessing Needs:

- Process each modality separately (text preprocessing, image normalization, etc.)

- Align features across modalities (e.g., match image and description for the same product ID)

- Handle missing modalities (e.g., an item missing a description but having an image)

- Fuse features before or during modeling (early or late fusion strategies)

Working with multimodal data often requires more complex pipelines and multi-branch model architectures.

Geo-Spatial Data

Geo-spatial data includes information tied to a specific location—latitude, longitude, and possibly more.

Examples:

- Delivery logs with GPS coordinates

- Wildlife tracking datasets with animal movement over time

- Store locations and user footfall heatmaps

Preprocessing Needs:

- Validate and standardize coordinate formats

- Visualize using maps for pattern detection

- Cluster spatial points (e.g., DBSCAN for detecting zones of activity)

- Engineer features like distance to nearest hub, region encoding, or geohashes

- Combine with other layers (e.g., weather, elevation, road networks) for enriched modeling

Specialized models like spatial-temporal networks or graph-based models are often used when spatial relationships are key.

What to Look For

Identifying the correct data type early on helps you decide:

- What preprocessing steps are required

- What kinds of models are likely to perform well

- How to validate and visualize the data

- Whether your problem is even tractable given the available features

Also take time to define:

- The unit of observation (e.g., user, transaction, image, session)

- The target variable type (binary, multi-class, continuous, timestamped, etc.)

These framing choices will shape not only your modeling path, but your preprocessing decisions from the ground up.

Strategies for Data Collection

Once you’ve understood what kind of data you’re working with, the next step is to figure out where it’s coming from and how it’s being collected. Data collection is not a passive step—it’s a strategic choice that shapes the quality, relevance, and usability of everything that follows.

Poor collection practices lead to poor data, no matter how good your models or preprocessing pipelines are. On the flip side, thoughtful collection aligned with your problem and domain can save you hours—if not days—of wrangling and cleaning.

In this section, we’ll explore key data sourcing strategies across different contexts, along with trade-offs and practical considerations.

Source Identification

There’s no single place data comes from. Depending on your use case, you might be tapping into internal systems, pulling from the public web, or consuming real-time event streams. Here are some of the most common sources and what to watch out for:

1. Internal Systems and Logs

This is often your richest and most relevant data source—coming straight from within the organization or platform you’re analyzing.

Examples:

- User interaction logs

- Purchase histories and billing records

- Application event logs

- CRM (Customer Relationship Management) system exports

Considerations:

- Ensure data joins across systems are valid (e.g., matching user IDs or session tokens)

- Logs may be verbose—filter out what’s actually useful

- Pay attention to timezone consistency, logging frequency, and data completeness

2. External APIs

When internal data is limited, APIs can be a valuable way to enrich or supplement it with outside information.

Examples:

- Weather APIs to provide environmental context

- Social media APIs for sentiment analysis or engagement signals

- Open data portals for demographics, geography, or economic indicators

Considerations:

- Watch for rate limits and authentication requirements

- Responses may vary in structure—build resilient ingestion pipelines

- Data may update in real-time or batch—align frequency with your needs

- Always read the API documentation and terms of use

3. Web Scraping

For public data not offered via API, scraping can be an alternative—but it comes with caveats.

Examples:

- Product descriptions and prices on retail websites

- News articles, blogs, or forums

- Review pages, FAQs, and support forums

Considerations:

- Respect robots.txt and legal terms—scraping without permission can breach terms of service

- Websites may change structure without notice—build parsers that can fail gracefully

- You may need to throttle requests to avoid getting blocked

- Consider headless browsers (e.g., Selenium) for dynamic pages

4. Manual Entry or Surveys

In some cases, especially early in a project, data collection is manual—via spreadsheets, call center transcripts, or structured forms.

Examples:

- User feedback forms

- Customer satisfaction surveys

- Operator notes from call centers or service teams

Considerations:

- Manual input is often error-prone—expect typos, missing fields, or inconsistent entries

- Standardize formats, units, and response choices during design

- Add metadata where possible (e.g., timestamps, respondent IDs)

- Smaller sample sizes may require statistical validation or augmentation later

5. Streaming Sources

Real-time data ingestion is increasingly common in domains like IoT, digital platforms, and monitoring systems.

Examples:

- Clickstream events on a website or app

- Sensor outputs from devices or machines

- Live telemetry from vehicles, wearables, or industrial systems

Considerations:

- Data may arrive in micro-batches or as continuous streams—choose your architecture accordingly (e.g., Kafka, Flink, Spark Streaming)

- Backpressure, out-of-order events, and system latencies are common challenges

- Windowing and buffering may be needed for aggregations or lag features

- Design your storage system (e.g., data lake, event log) to support reprocessing if needed

Collecting data isn’t just about volume—it’s about fitness for use. The right source for one problem might be irrelevant or misleading for another. And the more you understand your sources early on, the better your preprocessing and modeling decisions will be down the road.

Up next, we’ll dive into how domain context affects not just what data you collect—but how you interpret and prepare it for analysis.

Domain-Specific Nuances

Now that we’ve looked at where data comes from, it’s time to zoom in a bit more: what kind of data is it, and what unique quirks come with the territory?

Because let’s face it—data doesn’t exist in a vacuum. The way it’s structured, how often it arrives, how messy or sensitive it is—all of that depends on the domain it comes from. And if you ignore those domain-specific signals, you risk applying the wrong preprocessing strategy and building a model that’s technically accurate but practically useless.

Here’s how that plays out in the wild:

Let’s say you’re working with healthcare data.

You open up a dataset of electronic health records, and it looks pretty straightforward at first: patient age, gender, cholesterol levels, diagnosis codes, prescribed meds.

But then you notice that some patients are missing lab results. Others have measurements in different units—some in mg/dL, others in mmol/L. And a few fields contain sensitive identifiers that probably shouldn’t be there in the first place.

That’s the reality of healthcare data: it’s messy, sensitive, and filled with clinical nuance. You can’t just plug this into a model and hope for the best. You’ll need to:

- Standardize units before any modeling.

- Impute missing data carefully—because a missing test might mean “not needed” rather than “forgotten.”

- Strip out or mask personal identifiers to meet legal and ethical standards.

What looks like a missing value in healthcare might carry medical significance, so preprocessing needs to be slow, deliberate, and domain-aware.

Now switch gears to e-commerce.

Imagine you’re analyzing website clickstream logs. Each record has a timestamp, a product ID, a session ID, and an event type like “view” or “add to cart.” You quickly realize two things:

- Some users generate thousands of events, while others drop off after one click.

- Behavior changes wildly by day of the week, or even time of day.

This is noisy, high-volume data with lots of repetition. It’s also highly seasonal—think holiday sales or weekend traffic spikes.

Your job? Turn these raw logs into something your model can understand. That might mean:

- Aggregating clicks into session-level features (e.g., number of views before purchase).

- Engineering time-based features (e.g., recency, hour of interaction).

- Encoding product categories to reduce dimensionality without losing meaning.

Here, user behavior is your signal—but only after you’ve cleaned, grouped, and contextualized it.

Working with finance data? That’s a different beast.

Say you’re looking at transaction records from a trading platform. The timestamps are precise to the second (or even millisecond). You notice wild price jumps during market volatility—things that would be considered outliers in most domains.

But in finance? Those spikes aren’t bugs. They’re the whole point.

You also see multiple data streams—prices, volumes, quotes—all moving in parallel. If they’re not aligned down to the exact timestamp, your models will misfire.

Here, preprocessing means:

- Aggregating high-frequency data into intervals (e.g., 1-minute bars).

- Creating rolling statistics like moving averages or volatility indicators.

- Resisting the urge to “clean” away market noise—because it might be the most predictive signal you have.

Financial data demands respect for temporal precision and an understanding that extreme values often are the truth.

And what about sensor or IoT data?

Maybe you’re analyzing temperature readings from 100 different sensors in a manufacturing plant. At first glance, it’s just rows of numbers. But look closer, and you’ll see:

- Some sensors report every 5 seconds, others every 10.

- A few devices stopped sending data altogether for several hours.

- One sensor is stuck reporting exactly 23.0°C over and over again—suspiciously constant.

IoT data is notorious for being noisy, asynchronous, and full of device-specific quirks. Preprocessing here means:

- Interpolating gaps (but only where it makes sense).

- Smoothing noise using moving averages or filters.

- Creating derivative features like rate of change or direction of drift.

You’re not just denoising—you’re trying to reconstruct a signal from partial, inconsistent inputs.

Finally, recommendation systems—an entirely different challenge.

Suppose you’re building a model to suggest products or movies based on user behavior. The raw data? A sparse matrix where users are rows, items are columns, and the values are clicks, ratings, or watch time.

Most of that matrix is empty—because no user interacts with more than a tiny slice of the catalog.

On top of that, many interactions are implicit. A user watching a video doesn’t necessarily mean they liked it. A skipped song doesn’t always mean it was disliked.

Your preprocessing needs to:

- Distill meaningful engagement signals from messy behavior logs.

- Engineer features like total interactions, last interaction time, or diversity of history.

- Handle cold-start problems—new users or new items with no past data.

This is where sparse matrices, embeddings, and hybrid content-collaborative features come into play.

Every domain tells a different story. What counts as noise in one field is a goldmine in another. What’s “missing” in one context might be medically significant, legally protected, or simply irrelevant in another.

When you understand the domain your data comes from, you start to:

- Ask better questions.

- Design more thoughtful preprocessing pipelines.

- Build models that actually make sense in the real world—not just on paper.

Next, let’s explore the practical, cross-domain challenges of data acquisition—because collecting the right data in the right format is a challenge in itself.

Data Acquisition Challenges

Even when you’ve figured out what kind of data you need—and where to get it—actually acquiring that data can feel like walking through a minefield. You’re rarely handed a clean, ready-to-use dataset. Instead, you’re navigating through half-documented APIs, poorly timestamped logs, inconsistent formats, and files so large your machine gasps just trying to open them.

Let’s unpack some of the most common (and frustrating) challenges that come up during data acquisition—and how to think about solving them.

Timezone Mismatches

This is a classic gotcha, especially in time-series datasets. Imagine combining logs from two systems—one logging in UTC, another in local time. Or even worse, logs that switch between standard and daylight saving time without any clear documentation.

Why it matters:

A one-hour shift might not sound like much, but it can completely break event ordering, create phantom trends, or cause your model to “learn” artificial behaviors. This is especially problematic when you’re doing session-based analysis, churn prediction, or anomaly detection based on time windows.

What to do:

- Always convert timestamps to a standard format (usually UTC) during ingestion.

- Use timezone-aware datetime objects in your code (

pandas.to_datetime(..., utc=True)). - Check for DST transitions—if your data straddles them, align everything to a single reference.

Unit Inconsistencies

Have you ever seen a temperature column with values like 100, 38, and 273 all mixed together? Chances are, one’s in Fahrenheit, another in Celsius, and the last in Kelvin.

This is more common than it should be, especially when merging data from different countries, devices, or teams that weren’t on the same page.

Why it matters:

Models are only as smart as their input features. If those features represent apples and oranges, your model’s interpretation of the world is going to be wrong.

What to do:

- Standardize units at the very beginning of preprocessing.

- Include unit checks in your data validation logic (e.g., flag temperature > 200°C).

- When in doubt, consult metadata—or the people who created the data—before assuming correctness.

Missing Timestamps or Gaps in Streaming Data

In real-world streaming systems, data doesn’t always arrive in a neat, continuous flow. Maybe a sensor went offline. Maybe the system buffered data but never flushed it. Maybe there was network latency or a crash.

Why it matters:

Even a small gap in time-series data can disrupt rolling window calculations, confuse models trained on temporal order, or introduce bias into aggregate statistics.

What to do:

- Use time-based resampling to detect and fill gaps (e.g.,

resample('5min').ffill()). - Be cautious with imputation—don’t fill in hours of sensor silence with “normal” values unless you’re sure that’s appropriate.

- Log and analyze missingness itself—it may be a useful feature (e.g., devices that frequently go silent are more likely to fail).

Access Restrictions

Even if data is publicly available, that doesn’t mean it’s freely accessible. APIs may require authentication keys, impose rate limits, or restrict the number of fields or records returned.

Why it matters:

A slow or throttled API can bottleneck your pipeline. Worse, some APIs have undocumented quirks—returning different schemas depending on request parameters, or going down intermittently.

What to do:

- Use caching to avoid hitting the same endpoint repeatedly.

- Respect rate limits and implement exponential backoff strategies.

- Log all requests and responses to help debug inconsistencies.

- If authentication is required, ensure your credentials are stored securely and not hard-coded into your scripts.

Dealing with Scale

Sometimes the challenge isn’t the format, but the sheer volume. Maybe you’ve got billions of rows across multiple tables. Maybe your logs weigh in at a few terabytes. Whatever the case, your laptop isn’t going to cut it.

Why it matters:

Trying to load massive datasets into memory leads to crashes, endless processing times, and wasted effort. Sampling can help—but it needs to be done in a way that preserves the underlying distribution.

What to do:

- Use distributed processing tools like Spark or Dask for handling large datasets.

- Store intermediate results in cloud-native formats (e.g., Parquet, Feather) that support fast reads and column-based access.

- Use cloud query engines (e.g., BigQuery, Athena) when working in an enterprise or data lake environment.

- If sampling, use stratified sampling to maintain class distributions or temporal structure.

Collecting data isn’t just about pointing to a source and hitting download. It’s about understanding how the data got there, how reliable it is, and what assumptions it carries. And it’s about having the right tools and mental models in place to deal with scale, structure, and unpredictability.

In the next section, we’ll go even deeper and talk about how to assess data quality and volume—so that you’re not just collecting data, but collecting it with purpose.

Data Volume and Quality

Once you’ve acquired your data, the next step is to ask: Do I have enough? And is what I have any good?

This isn’t just about counting rows—it’s about whether the data captures the underlying variability of the problem you’re trying to solve. Whether it’s too small to generalize from, too large to work with efficiently, or just plain messy, your approach to modeling will be shaped by both quantity and quality.

Balancing Volume with Variability

Let’s start with volume. One of the most common misconceptions in data science is the idea that more data is always better. While it’s often true that more data can help complex models generalize, not all data points contribute equally. You want data that spans across meaningful segments: different user types, behaviors, time periods, and edge cases.

Small Datasets:

- May not capture edge-case behaviors or seasonal variations.

- Often suffer from high variance and overfitting risks.

- Require careful validation and may benefit from augmentation (e.g., synthetically generating samples, bootstrapping, or domain-based feature synthesis).

Large Datasets:

- Can be difficult to visualize, inspect, or process on a single machine.

- Require sampling for EDA, ideally in a stratified or time-aware way.

- Enable deeper modeling strategies (e.g., ensembles, deep learning) but also demand robust pipeline design to avoid bottlenecks.

Tip: More data only helps if it adds diversity and signal—not just redundancy.

Quality: The Quiet Killer

Data quality issues often sneak in under the radar—subtle enough to go unnoticed during ingestion, but damaging enough to derail analysis or modeling down the line.

Watch for:

- Duplicates: Repeated entries inflate counts and skew statistics.

- Inconsistent Formats: Dates in multiple formats, categorical variables with typos or mixed casing (“Premium”, “premium”, “PREMIUM”).

- Invalid Values: Out-of-range entries like -5 in an age column, or 200 in a temperature reading.

- Silent Errors: Mislabeled data, swapped columns, or features whose values were shifted during import.

Tip: Implement validation checks right after ingestion: unique counts, range enforcement, schema validation, and null inspections.

Streaming Data Considerations

In a streaming environment, data quality challenges are even harder. You may not have the luxury of seeing the full dataset at once.

To deal with volume and quality in a stream:

- Use sliding window analysis to monitor trends over recent time blocks (e.g., last 15 minutes, last 10,000 events).

- Track data drift and schema changes over time (e.g., new fields appearing, value distributions shifting).

- Log quality metrics in real-time: missing fields, anomalous values, unexpected volume changes.

Ethical Considerations

Not all data issues are technical. Some of the most important ones are ethical.

When you collect or analyze data—especially data about people—you’re responsible for more than just performance metrics. You’re responsible for respecting privacy, avoiding harm, and building systems that treat individuals and groups fairly.

Here’s what to keep in mind:

Privacy and Regulation

- Regulations like GDPR (Europe) and CCPA (California) impose strict rules around what data can be collected, how it’s stored, and how users must be informed.

- Personally identifiable information (PII) such as names, IP addresses, locations, or contact info must be treated with care—even when “anonymized.”

- Consent matters. If you’re using survey data, customer behavior logs, or scraped content, ask whether the users knew their data would be used this way.

Tip: If you’re in doubt, strip it out. Always err on the side of minimalism when handling sensitive data.

Bias and Representation

Bias isn’t just a data science buzzword—it’s a real problem with real consequences.

Maybe your training data overrepresents one demographic and underrepresents another. Maybe a product recommendation algorithm works better for urban users than rural ones. Maybe a classifier has higher false positive rates for one group than another.

These aren’t just statistical quirks. They’re fairness issues. And they often originate in the data collection phase.

What to watch for:

- Skewed distributions: Are certain groups over/underrepresented?

- Historical bias: Are you inheriting unfair patterns from past human decisions (e.g., hiring, grading, or sentencing)?

- Label bias: Are ground truth labels subjective or inconsistently applied?

Tip: Explore demographic distributions early. Use fairness metrics later. But always keep ethical framing in mind during collection and preprocessing.

Verifying Relevance and Representativeness

Here’s a simple but powerful habit: before diving into modeling, pause and ask—

“Does this dataset reflect the real-world context of the problem?”

To answer that, create a data dictionary. Document:

- Feature names

- Data types

- Units of measurement

- Expected ranges

- Frequency of collection

- Notes on how values are derived or recorded

This doesn’t just help you. It helps your teammates, your future self, and your model audit process.

Example:

"watch_time": float, measured in minutes, expected range: 0–360. Logged at the end of each viewing session.

In large-scale or distributed settings, verifying representativeness becomes even more crucial. Sample carefully for EDA. Use tools that can query across data partitions or cloud storage. Ensure you’re not just capturing the easy data—but the edge cases, the minorities, and the surprises.

Coming up next, we’ll turn our attention to how to make sense of all this data once it’s collected—by exploring it. In the next section, we dive into Exploratory Data Analysis (EDA)—your first real conversation with the data.

Exploratory Data Analysis (EDA): Your First Conversation with the Data

By this point, you’ve collected your data, checked it for quality, ensured it’s ethically sourced, and maybe even wrangled a few formats into shape. Now comes a subtle but powerful shift in mindset: instead of just cleaning or organizing data, you’re listening to what it’s trying to tell you.

This is where Exploratory Data Analysis (EDA) comes in. Think of it as the detective phase of your workflow—an opportunity to ask questions, look for clues, and let the data surprise you. You’re not modeling yet. You’re building intuition, uncovering patterns, spotting pitfalls, and often redefining your understanding of the problem altogether.

Whether you’re dealing with a thousand rows or a billion, this stage lays the groundwork for every decision that follows.

What Is EDA, Really?

EDA isn’t just about making pretty charts. At its core, it’s an investigative process that helps you answer essential questions like:

- What kind of data am I really working with?

- Are there any glaring issues that would trip up a model?

- What kind of transformations might help improve signal clarity?

- Are there hidden structures or clusters I didn’t expect?

In structured workflows, EDA helps formalize data understanding. In agile or experimental projects, it helps you fail fast—by quickly revealing mismatches, biases, or dead ends before you invest too deeply.

Goals of EDA: What Are You Trying to Learn?

Let’s walk through the core goals of EDA—each one tied to a practical downstream use case.

1. Understand the Data Structure

Start with the basics:

- What are the number of rows and columns?

- What are the data types? Are there date fields stored as strings?

- How much memory does the dataset consume?

Why it matters: Data type mismatches can silently break your code later. Knowing the shape and structure up front helps you estimate feasibility (can you work in-memory? do you need sampling or Spark?).

2. Spot Patterns and Trends

Use visualizations and summary statistics to uncover correlations, cycles, or clusters.

Why it matters: These patterns inform feature engineering. For example, seasonality in transaction volume could lead you to create “day of week” or “holiday” flags. A strong correlation between two features might indicate redundancy—or a meaningful interaction.

3. Identify Anomalies

Anomalies might be outliers, missing values, data entry errors, or systemic issues.

Why it matters: These could distort your model’s understanding of reality. A sudden spike in page views might be an error—or a campaign. Either way, you want to know about it before it shapes your model.

4. Assess Data Quality

Look for:

- Duplicate records

- Typos in categorical fields (e.g., “Premium”, “premium”, “PREMIUM”)

- Implausible values (e.g., age = -10)

Why it matters: Garbage in, garbage out. EDA is often where hidden data quality problems surface.

5. Understand Distributions

Every feature has a shape. Some are bell-curved, others are right-skewed, some are bimodal.

Why it matters: The distribution of a feature affects how you scale it, transform it, and model it. For instance:

- Highly skewed features might benefit from log transforms.

- Heavy-tailed features may need clipping or winsorization.

- Zero-inflated features (e.g., most users have 0 returns) require different modeling strategies.

6. Domain Contextualization

Numbers don’t speak for themselves—they need a narrative.

For example:

- A spike in “watch time” could reflect binge-watching behavior—or an error in logging durations.

- A drop in transactions might signal seasonality, not failure.

Why it matters: Context prevents misinterpretation. Without it, even the most polished EDA risks being irrelevant.

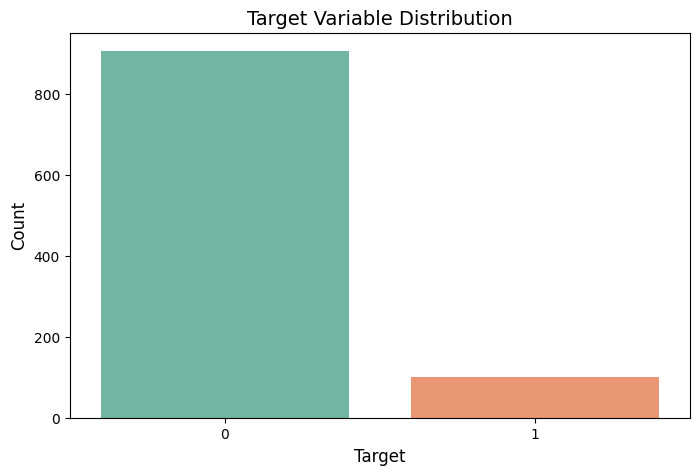

7. Bias and Fairness Considerations

Ask:

- Is the dataset overrepresenting one group over another?

- Are there meaningful outcome disparities across demographic features?

Why it matters: Unchecked bias in your training data leads to unfair predictions. EDA is the first and best opportunity to surface these issues.

8. Production Readiness

Think ahead:

- Which features are likely to drift in production?

- Are there any that require live updates (e.g., session length)?

- Which metrics should be monitored post-deployment?

Why it matters: EDA isn’t just about the present dataset—it’s about future-proofing your model pipeline for stability, monitoring, and adaptation.

EDA isn’t just a checklist—it’s a conversation with your data. One that’s essential if you want to make informed modeling choices, prevent technical debt, and build systems that actually reflect the world they operate in.

In the next part, we’ll roll up our sleeves and get hands-on with the first steps of EDA: inspecting your dataset’s structure, datatypes, missing values, and more.

Initial Data Inspection

Before jumping into visualizations or statistical summaries, you should always begin with a high-level scan of your dataset. This phase is less about deep analysis and more about getting your bearings—just enough to understand the shape and structure of what you’re dealing with.

It’s a bit like walking into a new apartment before you start decorating. You want to check how big the rooms are, what’s already there, and whether anything looks off at first glance. In data terms, that means: rows, columns, data types, missing values, duplicates, and a quick peek at the contents.

Let’s walk through each of these steps using a simulated e-commerce transactions dataset named df_demo.

import pandas as pd

import numpy as np

# Set random seed

np.random.seed(42)

# Generate the sample dataset

n = 1000

df_demo = pd.DataFrame({

'user_id': np.random.randint(1000, 1100, size=n),

'order_date': pd.date_range(start='2023-01-01', periods=n, freq='h'),

'product_category': np.random.choice(

['Electronics', 'Clothing', 'Books', 'Home & Kitchen', 'electronics', 'books'],

size=n

),

'price': np.round(np.random.exponential(scale=50, size=n), 2),

'quantity': np.random.poisson(lam=2, size=n),

'country': np.random.choice(['US', 'UK', 'IN', 'CA', np.nan], size=n),

'payment_method': np.random.choice(['Credit Card', 'Paypal', 'Net Banking', np.nan], size=n),

'timestamp': pd.date_range(end=pd.Timestamp.now(), periods=n, freq='min')

})

# Introduce missing values

df_demo.loc[df_demo.sample(frac=0.05).index, 'price'] = np.nan

df_demo.loc[df_demo.sample(frac=0.03).index, 'quantity'] = np.nan

# Add duplicate rows

df_demo = pd.concat([df_demo, df_demo.iloc[:5]], ignore_index=True)

# Export the first few rows to include in blog

df_demo.head()

Result

| user_id | order_date | product_category | price | quantity | country | payment_method | timestamp |

|---|---|---|---|---|---|---|---|

| 1051 | 2023-01-01 00:00:00 | Clothing | 45.50 | 1.0 | CA | Net Banking | 2025-06-07 01:32:12.071076 |

| 1092 | 2023-01-01 01:00:00 | Electronics | 76.59 | 2.0 | nan | nan | 2025-06-07 01:33:12.071076 |

| 1014 | 2023-01-01 02:00:00 | Home & Kitchen | 32.78 | 4.0 | UK | nan | 2025-06-07 01:34:12.071076 |

| 1071 | 2023-01-01 03:00:00 | Books | 2.08 | 4.0 | nan | Paypal | 2025-06-07 01:35:12.071076 |

| 1060 | 2023-01-01 04:00:00 | Clothing | 8.96 | 1.0 | IN | Paypal | 2025-06-07 01:36:12.071076 |

This demo dataset mimics a real-world e-commerce scenario. It contains 1,005 rows of transaction-like data with the following columns:

-

user_id: A pseudo-identifier for each customer. -

order_date: The datetime of the order, spread across hourly intervals. -

product_category: Categories like “Electronics”, “Books”, and “Clothing”—with some inconsistencies (e.g., “books” vs “Books”) to simulate messy categorical data. -

price: Prices generated using an exponential distribution to reflect the skewed nature of real transaction data. -

quantity: Quantity values drawn from a Poisson distribution centered around 2. -

country: Randomly assigned country codes with some missing values to test handling of incomplete location data. -

payment_method: Includes several common options, but again with some missing entries. -

timestamp: Simulates minute-wise activity logs, useful for time-series or streaming data analysis.

To make it more realistic, we intentionally introduced:

- Missing values in

'price','quantity', and'payment_method' - Duplicate rows (5 exact copies)

- Inconsistent casing in

'product_category'

This gives us a dataset that’s clean enough to work with but messy enough to be instructive—perfect for showcasing the first steps of EDA.

1. Dimensions: Get a Sense of Dataset Size

The first thing to ask: how big is this dataset?

# Basic shape

print("Dataset shape:", df_demo.shape)

Dataset shape: (1005, 8)

This tells you how many rows and columns you’re working with. A shape of (1005, 8) in our case means 1,005 rows (including a few duplicates) and 8 columns.

Why this matters: It sets expectations for processing time, visualization limits, memory usage, and even what modeling techniques are feasible.

2. Data Types: Make Sure Your Columns Are What They Claim to Be

Often, data types are misinterpreted during import. For example, dates may be read as plain strings, numerical codes may be treated as integers when they’re actually categories.

# Quick look at column types and non-null counts

df_demo.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1005 entries, 0 to 1004

Data columns (total 8 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 user_id 1005 non-null int64

1 order_date 1005 non-null datetime64[ns]

2 product_category 1005 non-null object

3 price 955 non-null float64

4 quantity 975 non-null float64

5 country 1005 non-null object

6 payment_method 1005 non-null object

7 timestamp 1005 non-null datetime64[ns]

dtypes: datetime64[ns](2), float64(2), int64(1), object(3)

memory usage: 62.9+ KB

You might see:

-

objectfor text fields like'product_category' -

float64for'price', especially because it includes missing values -

datetime64for'order_date'and'timestamp', already parsed correctly

Tip: A wrongly typed column won’t just affect analysis—it might silently fail in modeling.

3. Sample Data: Peek Inside Before Diving Deep

Always check the actual data values—not just metadata. Look at both the head and tail to spot anomalies like shifted columns, extra whitespace, or rows that shouldn’t be there.

# View first few rows

df_demo.head()

# View last few rows

df_demo.tail()

In our demo, the 'product_category' has inconsistencies like 'Books' and 'books' that could split category counts if not handled later.

Why this matters: Visual inspection catches human-readable quirks automated tools often miss.

4. Missing Values: Quantify What’s Absent

Missing data is almost inevitable. The goal here is to measure it early—so you’re not surprised during preprocessing.

# Count missing values per column

df_demo.isnull().sum().sort_values(ascending=False)

price 50

quantity 30

user_id 0

order_date 0

product_category 0

country 0

payment_method 0

timestamp 0

dtype: int64

To check percentage-wise missingness:

# Percentage missing

missing_percent = df_demo.isnull().mean().sort_values(ascending=False) * 100

print(missing_percent)

price 4.975124

quantity 2.985075

user_id 0.000000

order_date 0.000000

product_category 0.000000

country 0.000000

payment_method 0.000000

timestamp 0.000000

dtype: float64

You’ll find that around 5% of 'price' and 3% of 'quantity' are missing, and some entries for 'payment_method' or 'country' are also missing.

5. Duplicate Rows: Don’t Let Redundancy Sneak In

We deliberately added duplicate records to simulate real-world data issues.

# Number of duplicate rows

print("Duplicate rows:", df_demo.duplicated().sum())

# View duplicate rows if needed

df_demo[df_demo.duplicated()]

Duplicate rows: 5

user_id order_date product_category price quantity country \

1000 1051 2023-01-01 00:00:00 Clothing 45.50 1.0 CA

1001 1092 2023-01-01 01:00:00 Electronics 76.59 2.0 nan

1002 1014 2023-01-01 02:00:00 Home & Kitchen 32.78 4.0 UK

1003 1071 2023-01-01 03:00:00 Books 2.08 4.0 nan

1004 1060 2023-01-01 04:00:00 Clothing 8.96 1.0 IN

payment_method timestamp

1000 Net Banking 2025-06-07 07:08:59.924664

1001 nan 2025-06-07 07:09:59.924664

1002 nan 2025-06-07 07:10:59.924664

1003 Paypal 2025-06-07 07:11:59.924664

1004 Paypal 2025-06-07 07:12:59.924664

Remove them if they’re not meaningful:

# Drop duplicates

df_demo = df_demo.drop_duplicates()

Why this matters: Duplicates can skew distributions and inflate model confidence unfairly.

6. Sampling for Large Datasets

When you’re dealing with massive datasets—millions of rows or more—it’s often impractical (and unnecessary) to explore the entire thing right away. Loading it into memory might crash your notebook. Visualizing it might overload your browser. And even something as simple as df.head() won’t reveal much about the bigger picture.

This is where sampling comes in.

But sampling isn’t just about grabbing a random chunk of data and hoping it represents the whole. If your dataset is imbalanced—say, 95% of your rows belong to one product category—then a random sample might not include rare but important cases. That’s why we prefer stratified sampling.

Stratified sampling ensures that the distribution of key groups—like product categories, customer segments, or outcome classes—is preserved in the sample, even if you’re only looking at 5–10% of the data.

Let’s walk through an example using our demo dataset.

Suppose we want to take a 10% stratified sample based on 'product_category'. This ensures that all product categories are fairly represented in the sample, even if some are rare in the full dataset.

# Stratified sample by 'product_category' (10% of each group)

sample_df = df_demo.groupby('product_category', group_keys=False).sample(frac=0.1, random_state=42)

sample_df.head()

user_id order_date product_category price quantity country \

464 1098 2023-01-20 08:00:00 Books 82.74 3.0 UK

774 1028 2023-02-02 06:00:00 Books 23.26 2.0 nan

860 1050 2023-02-05 20:00:00 Books 9.14 3.0 US

344 1037 2023-01-15 08:00:00 Books 35.64 5.0 UK

875 1037 2023-02-06 11:00:00 Books 82.36 1.0 IN

payment_method timestamp

464 Net Banking 2025-06-07 14:52:59.924664

774 Paypal 2025-06-07 20:02:59.924664

860 Credit Card 2025-06-07 21:28:59.924664

344 nan 2025-06-07 12:52:59.924664

875 nan 2025-06-07 21:43:59.924664

Let’s break this down:

-

groupby('product_category')splits the data by each category (e.g., ‘Electronics’, ‘Books’, etc.). -

.sample(frac=0.1)takes 10% from each group independently. -

group_keys=Falseprevents pandas from adding the group label as an index. -

random_state=42ensures reproducibility of the sampling process.

Now, if you check the value counts before and after sampling, you’ll see that the relative proportions are preserved:

# Full dataset distribution

print(df_demo['product_category'].value_counts(normalize=True))

# Sampled dataset distribution

print(sample_df['product_category'].value_counts(normalize=True))

product_category

Electronics 0.173

books 0.173

Books 0.172

electronics 0.167

Clothing 0.164

Home & Kitchen 0.151

Name: proportion, dtype: float64

product_category

Books 0.171717

Electronics 0.171717

books 0.171717

electronics 0.171717

Clothing 0.161616

Home & Kitchen 0.151515

Name: proportion, dtype: float64

The distributions will closely match. This makes your exploratory analysis—histograms, boxplots, scatter matrices—more reliable and representative of the full dataset, without needing to analyze every row.

Use case: You want to plot feature distributions, correlations, or check for outliers, but loading the full dataset would be overkill or even infeasible.

Stratified sampling gives you the best of both worlds:

- Speed and efficiency for fast iteration

- Integrity and balance to retain important signals across groups

When you eventually move into modeling, you’ll still want to work with the full dataset. But for early-stage exploration and sanity checks, this approach helps you get quick insights while keeping your machine happy.

7. Streaming Data Windows

Sometimes your dataset isn’t a static snapshot—it’s a moving river of updates. Think IoT sensor readings, server logs, or clickstream events—these arrive continuously, often minute by minute, or even faster.

Our demo dataset mimics this with a timestamp column populated at 1-minute intervals. In real life, analyzing this kind of streaming data requires a mindset shift. You’re not just asking “what’s in the data,” but also “when did this happen” and “what’s happening right now?”

Let’s simulate how you’d inspect just the most recent activity, and how to apply a rolling window to observe short-term trends.

Step 1: Focus on Recent Data

First, convert the timestamp column to a proper datetime format (if it isn’t already). Then filter the data to include only the last 24 hours.

import pandas as pd

# Ensure the timestamp is in datetime format

df_demo['timestamp'] = pd.to_datetime(df_demo['timestamp'])

# Filter: only rows from the past 1 day

recent_df = df_demo[df_demo['timestamp'] > pd.Timestamp.now() - pd.Timedelta(days=1)]

This gives you a subset of the data that simulates what you’d see if you’re monitoring a live dashboard or investigating an incident from the last day.

Step 2: Rolling Windows — See Smoothed Trends

Raw minute-level data is often noisy. To get a better sense of how a metric behaves over time, use a rolling average. For example, the average quantity ordered over the past 30 minutes:

# Set timestamp as index and compute rolling average

rolling_avg = recent_df.set_index('timestamp')['quantity'].rolling('30min').mean()

This smooths out sudden spikes and dips, giving you a time-aware trendline—perfect for understanding demand surges, server load, or behavioral changes over time.

Step 3: Visualize the Trend

You can use matplotlib or plotly to visualize the rolling trend:

import matplotlib.pyplot as plt

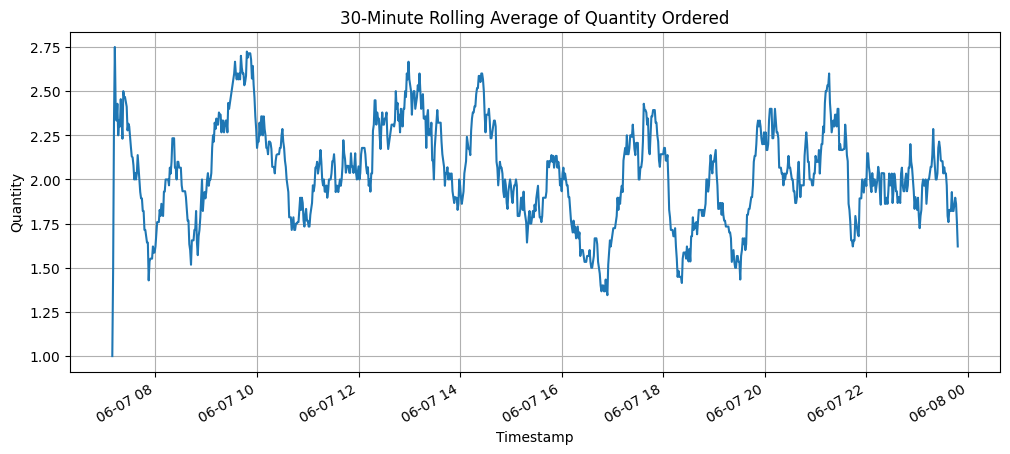

rolling_avg.plot(figsize=(12, 5), title='30-Minute Rolling Average of Quantity Ordered')

plt.xlabel('Timestamp')

plt.ylabel('Quantity')

plt.grid(True)

plt.show()

This kind of line plot lets you see the pulse of your system over time.

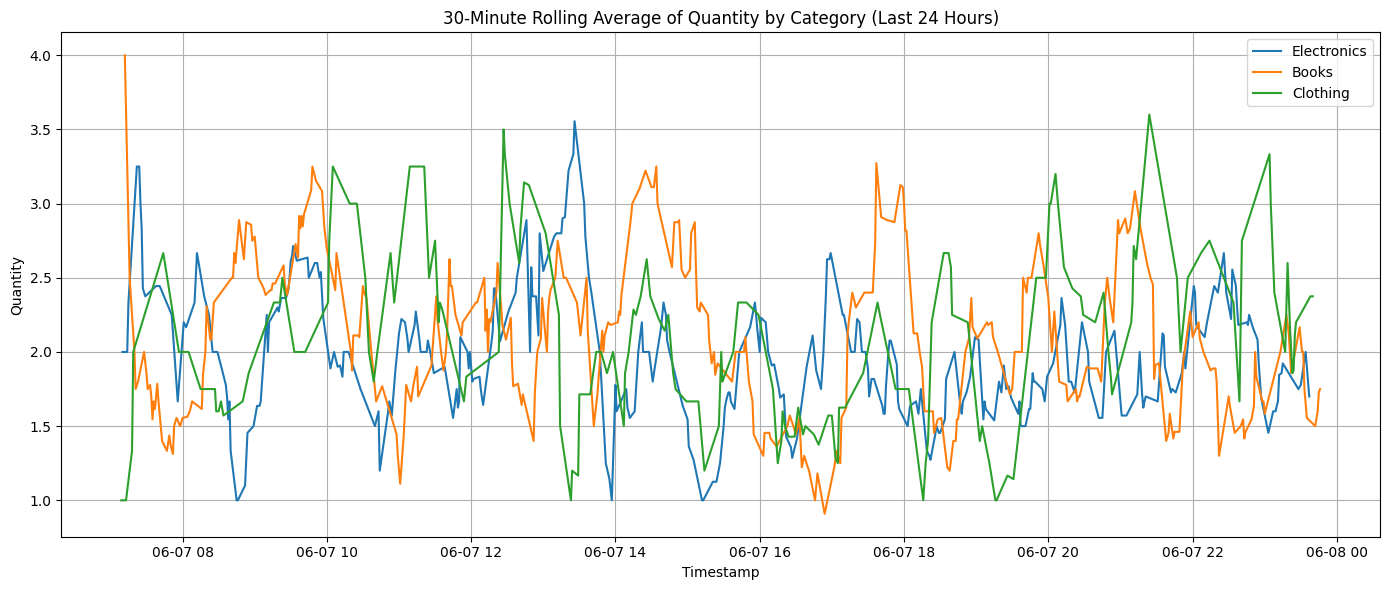

Sometimes, it’s not enough to look at overall trends—you want to understand what’s happening within a specific product category over time. For example, are orders for Electronics spiking late at night? Are Books steadily declining?

Let’s zoom in on one category—Electronics—and compute a 30-minute rolling average of the quantity ordered, just like a live dashboard might do in production.

import pandas as pd

import matplotlib.pyplot as plt

# Ensure timestamp is in datetime format

df_demo['timestamp'] = pd.to_datetime(df_demo['timestamp'])

# List of product categories to compare

categories = ['electronics', 'books', 'clothing']

# Initialize the plot

plt.figure(figsize=(14, 6))

# Loop through each category and plot its rolling average

for cat in categories:

mask = (df_demo['product_category'].str.lower() == cat) & \

(df_demo['timestamp'] > pd.Timestamp.now() - pd.Timedelta(days=1))

cat_df = df_demo[mask].copy()

cat_df = cat_df.set_index('timestamp').sort_index()

rolling_avg = cat_df['quantity'].rolling('30min').mean()

plt.plot(rolling_avg, label=cat.capitalize())

# Plot styling

plt.title('30-Minute Rolling Average of Quantity by Category (Last 24 Hours)')

plt.xlabel('Timestamp')

plt.ylabel('Quantity')

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

This type of analysis is useful when:

- Monitoring live category-specific demand in e-commerce.

- Detecting anomalous behavior in one product class (e.g., bot abuse or flash sale spikes).

- Supporting real-time inventory decisions or dynamic pricing strategies.

Why this matters:

In time-sensitive domains, patterns change quickly. Analyzing only static aggregates (like overall averages) hides this.

Whether you’re detecting anomalies, spotting user drop-offs, or reacting to a spike in activity, EDA for time-series or streaming data must respect temporal context.

Bonus: You can even do rolling standard deviation, cumulative sums, or time-based grouping (e.g., hourly totals with resample('H')) to get more nuanced insight from time-ordered data.

Summary: High-Level Scanning Before Deep Dive

Initial inspection is like reading the back cover of a novel before committing to the story. You’re looking for:

- What’s there (dimensions, types, examples)

- What’s missing (nulls, structure, context)

- What needs fixing before any serious analysis

With your first scan complete, you’re ready to begin richer univariate and multivariate analysis in the next steps of EDA.

Key EDA Techniques: A Practical Guide for Data Scientists

Exploratory Data Analysis (EDA) is the cornerstone of any data science project. Before jumping into preprocessing, feature engineering, or modeling, a skilled data scientist asks: What story does the data tell? EDA is about uncovering the dataset’s structure, quirks, and patterns through a blend of statistical rigor, visualization, and domain intuition. This section equips you with a robust toolkit to interrogate your data systematically, ensuring you make informed decisions for modeling and deployment.

Here’s what we cover in this enhanced section:

- Univariate Analysis: Understand the distribution, spread, and anomalies of individual variables.

- Bivariate and Multivariate Analysis: Explore relationships and interactions between variables.

- Missing Data Analysis: Assess patterns and implications of missing values.

- Time-Series and Temporal Analysis: Detect trends, seasonality, or drift in time-based data.

- Domain-Specific Checks: Contextualize findings for industries like healthcare, finance, or e-commerce.

- Statistical Tests: Validate assumptions and quantify relationships.

- Bias and Fairness Audits: Ensure ethical integrity and avoid biased outcomes.

- Production Readiness Insights: Prepare for deployment with checks for data drift, pipeline stability, and monitoring.

EDA isn’t a one-size-fits-all checklist—it’s a dynamic process tailored to your data and objectives. Each technique serves a purpose: univariate analysis reveals the shape and quirks of individual features, bivariate/multivariate analysis uncovers predictive relationships, and domain-specific checks ensure anomalies aren’t mistaken for noise. For example, a zero in a medical dataset (e.g., blood pressure) might signal an error, while a zero in e-commerce (e.g., cart value) could be valid. Statistical tests formalize your hypotheses, bias audits safeguard fairness, and production checks ensure your model stays robust in the wild.

Think of EDA as a diagnostic phase: you’re not just summarizing data—you’re building intuition to drive better modeling, feature selection, and business decisions. Let’s dive into the techniques, starting with univariate analysis.

A. Univariate Analysis

Every journey into a dataset begins with understanding its individual parts. Univariate analysis is the practice of examining each variable in isolation—one feature at a time—to understand its nature, distribution, variability, and any anomalies hiding in plain sight. While it may sound elementary, this step is foundational: before we compare variables or feed them into models, we need to grasp their standalone behavior.

This kind of analysis helps answer questions like:

- What does the distribution of a feature look like?

- Are there outliers that might skew our results?

- Is the variable skewed or symmetric?

- Are there rare categories we need to consolidate?

Univariate analysis is especially important for catching data issues early, informing decisions about transformations, binning, or encoding, and even helping choose appropriate modeling techniques later. For instance, a highly skewed variable may need to be log-transformed, and a categorical variable with dozens of rare levels might benefit from grouping.

In the sections that follow, we’ll dive deep into both numerical and categorical features—showing how to analyze them using summary statistics, visualizations, and Python code, all while discussing what those results mean in practice. Whether you’re working with prices, quantities, product categories, or countries, the ability to look closely and reason about a single column of data is a core data science skill. Let’s begin.

A.1. Univariate Analysis: Numerical Features

Univariate analysis focuses on understanding a single variable’s central tendency, spread, shape, and anomalies. Let’s use price and quantity as example numerical features in a retail dataset.

1. Descriptive Statistics

Start with describe() in pandas for a snapshot of key metrics:

print(df_demo[['price', 'quantity']].describe())

price quantity

count 955.000000 975.000000

mean 46.910660 2.025641

std 46.221301 1.429507

min 0.010000 0.000000

25% 13.520000 1.000000

50% 32.850000 2.000000

75% 65.940000 3.000000

max 291.780000 9.000000

Key metrics include:

- Count (n): Number of non-null values, flagging potential missingness.

-

Mean (\(\mu\)): Average value, sensitive to outliers:

\[\mu = \frac{1}{n} \sum_{i=1}^{n} x_i\] -

Standard Deviation (\(\sigma\)): Measures spread around the mean:

\[\sigma = \sqrt{\frac{1}{n} \sum_{i=1}^{n} (x_i - \mu)^2}\] - Quartiles (Q1, Q2, Q3): Divide data into four equal parts, revealing skewness and outlier potential.

- Min/Max: Highlight extreme values that may need investigation.

Practical Insight: Compare mean and median to detect skewness. If \(\mu > \text{median}\), the distribution is right-skewed (e.g., high-priced outliers in

price). If \(\mu < \text{median}\), it’s left-skewed. Use the median for robust central tendency in skewed data. Actionable Tip: If the count is much lower than expected, investigate missing data patterns (see Missing Data Analysis below).

2. Skewness and Kurtosis

These metrics quantify distribution shape:

-

Skewness (\(\gamma_1\)): Measures asymmetry:

\(\gamma_1 = \frac{\frac{1}{n} \sum_{i=1}^{n} (x_i - \mu)^3}{\sigma^3}\)

- Positive (> 0): Long right tail (e.g., premium-priced items).

- Negative (< 0): Long left tail (e.g., discounts or capped values).

- Near 0: Symmetric distribution.

-

Kurtosis (\(\gamma_2\)): Measures tail weight and outlier prevalence:

\(\gamma_2 = \frac{\frac{1}{n} \sum_{i=1}^{n} (x_i - \mu)^4}{\sigma^4} - 3\)

- Positive: Heavy tails (more outliers).

- Negative: Light tails (fewer outliers).

from scipy.stats import skew, kurtosis

print("Skewness of Price:", skew(df_demo['price'].dropna()))

print("Kurtosis of Price:", kurtosis(df_demo['price'].dropna()))

Skewness of Price: 1.8796745737176626

Kurtosis of Price: 4.3079925960441265

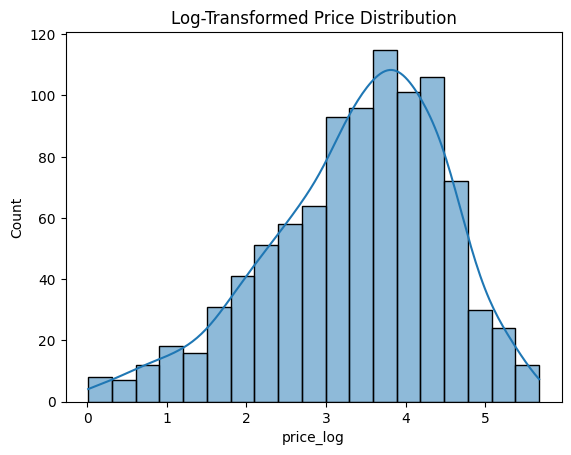

Why It Matters: Skewed features may require transformations (e.g., log, square root, or Box-Cox) to stabilize variance for models like linear regression. High kurtosis signals potential outliers that could destabilize gradient-based algorithms. Real-World Example: A skewness of 1.88 in

pricesuggests a few high-priced items inflating the mean. Consider:

import numpy as np

df_demo['price_log'] = np.log1p(df_demo['price']) # Log-transform to reduce skewness

sns.histplot(df_demo['price_log'], kde=True)

plt.title('Log-Transformed Price Distribution')

plt.show()

3. Visualizations

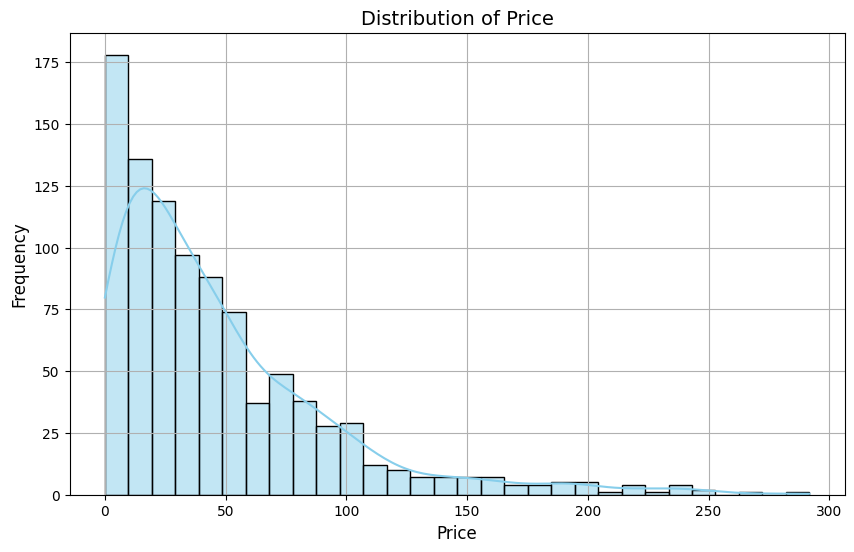

Numbers tell you what’s happening in the data—but visuals show you how and why. When it comes to understanding a numerical feature like price, nothing beats a solid visualization to uncover insights that would otherwise remain hidden in summary statistics. Plotting helps you detect distribution shapes, data skew, outliers, anomalies, and even hints of underlying data-generating processes.

Let’s walk through three visual tools: histograms, box plots, and interactive charts—each serving a unique purpose in the univariate analysis toolbox.

a. Histogram with KDE: Distribution Shape

A histogram slices your data into bins and stacks up the frequency of observations in each bin. This gives a direct picture of the data distribution.

plt.figure(figsize=(10, 6))

sns.histplot(df_demo['price'], kde=True, color='skyblue', bins=30)

plt.title('Distribution of Price', fontsize=14)

plt.xlabel('Price', fontsize=12)

plt.ylabel('Frequency', fontsize=12)

plt.grid(True)

plt.show()

What to look for:

- Skewness: Does the histogram lean left or right? A long right tail indicates positive skew (common in income or price data).

- Modality: One peak (unimodal)? Multiple peaks (bimodal/multimodal)? Peaks could reflect natural groupings in the data—like luxury vs. budget products.

- Zero-inflation: Do you see a giant spike at zero or near-zero values? This could signal missing/placeholder values, or a real-world phenomenon (e.g., free products).

- Gaps or Cliffs: Missing ranges could point to systematic filtering or censoring in the data.

KDE (Kernel Density Estimate):

Setting kde=True overlays a smooth density curve on top of the histogram. KDE doesn’t bin data; it estimates the probability density function directly using kernels (typically Gaussian).

This helps:

- Smooth jagged histograms caused by sparse bins

- Highlight subtle shoulders or tails in the distribution

- Compare distribution shape visually across multiple variables (later in bivariate analysis)

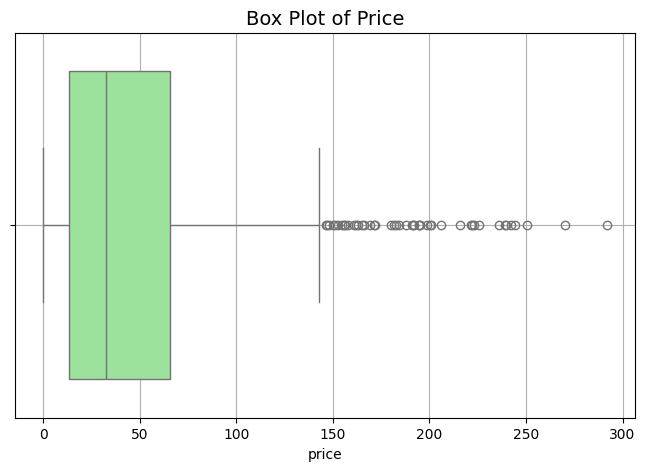

b. Box Plot: Outliers and Spread

While histograms give you frequency, box plots summarize quartiles, median, and outliers in one compact view. They’re also excellent for comparing multiple variables or segments side by side.

plt.figure(figsize=(8, 5))

sns.boxplot(x=df_demo['price'], color='lightgreen')

plt.title('Box Plot of Price', fontsize=14)

plt.grid(True)

plt.show()

Interpretation:

- The box captures the interquartile range (IQR = Q3 - Q1), where the bulk of your data lives.

- The line in the box shows the median.

- Whiskers extend to 1.5×IQR from the box edges (standard Tukey definition).

- Dots beyond whiskers are outliers—values that may warrant removal, transformation, or further investigation.

Real-World Insight:

A wide box? High variability. A box close to one end? Skewed data. Outliers? Possibly data errors, rare events, or heavy-tailed distributions. You’ll want to decide case by case: are they noise, or signal?

c. Interactive Plot: Exploratory Power for Big Data

When dealing with large datasets, static visuals can fall short. This is where Plotly shines—offering zoom, hover, filter, and export capabilities right inside your browser or Jupyter notebook.

import plotly.express as px

fig = px.histogram(df_demo, x='price', nbins=30, title='Interactive Price Distribution')

fig.update_layout(xaxis_title='Price', yaxis_title='Count')

fig.show()

Why use it?

- Zoom in on dense clusters

- Hover to inspect exact counts per bin

- Interactively filter by category (e.g., show price histograms by

product_category) - Makes your EDA more presentable for stakeholders, notebooks, or dashboards

Takeaways & Pro Tips

- Use histograms to study distribution shape, modality, and skewness.

- Add KDE overlays for better trend visualization, especially with continuous data.

- Use box plots to flag potential outliers and assess data spread quickly.

- Leverage interactive visualizations (e.g., Plotly) for large-scale or exploratory analysis—especially when you want to drill down by filters.

- Visuals are not just decoration—they’re diagnostic tools. A histogram with a long tail tells you to try log-scaling. A box plot with dozens of outliers might signal data entry errors or an expensive product tier you didn’t expect.

4. Outlier Detection

Use the Interquartile Range (IQR) method to identify outliers:

Q1 = df_demo['price'].quantile(0.25)

Q3 = df_demo['price'].quantile(0.75)

IQR = Q3 - Q1

outliers = df_demo[(df_demo['price'] < Q1 - 1.5*IQR) | (df_demo['price'] > Q3 + 1.5*IQR)]

print(f"Number of outliers in price: {outliers.shape[0]}")

Number of outliers in price: 46

Mathematically:

\[\text{Outliers if } x < Q_1 - 1.5 \times \text{IQR} \quad \text{or} \quad x > Q_3 + 1.5 \times \text{IQR}\]Why It Matters: Outliers can skew models (e.g., linear regression) or be critical signals (e.g., fraud detection). In retail, high

priceoutliers might be luxury items, not errors. Actionable Tip: Use domain knowledge to decide whether to cap, transform, or retain outliers. For example, cap extreme prices:

df_demo['price_capped'] = df_demo['price'].clip(upper=Q3 + 1.5*IQR)

Advanced Technique: For multivariate outlier detection, consider isolation forests or DBSCAN:

from sklearn.ensemble import IsolationForest

iso = IsolationForest(contamination=0.1, random_state=42)

outliers = iso.fit_predict(df_demo[['price', 'quantity']].dropna())

print(f"Multivariate outliers detected: {(outliers == -1).sum()}")

A.2. Univariate Analysis: Categorical Features

For categorical variables like product_category and country, focus on frequency, imbalance, and rare categories.

1. Frequency Distribution

print(df_demo['product_category'].value_counts(normalize=True))

product_category

Electronics 0.173134

Books 0.172139

books 0.172139

electronics 0.166169

Clothing 0.165174

Home & Kitchen 0.151244

Name: proportion, dtype: float64

Why It Matters: Class imbalance (e.g., 80% of products in one category) can bias models. Rare categories may need consolidation. Actionable Tip: Use

normalize=Trueto get proportions, helping identify dominant or rare categories.

Modernized visualization with Product Category Distribution:

import plotly.express as px

# Prepare data

category_counts = df_demo['product_category'].value_counts().reset_index()

category_counts.columns = ['category', 'count'] # Rename columns properly

# Plotly bar chart

fig = px.bar(

category_counts,

x='category',

y='count',

title='Product Category Distribution',

labels={'category': 'Category', 'count': 'Count'}

)

fig.update_layout(xaxis_title='Category', yaxis_title='Count')

fig.show()

2. Rare Categories

In real-world datasets, especially with categorical variables like product_category, it’s common to encounter long tails—a handful of categories appear very frequently (e.g., “electronics”, “clothing”), while many others appear just a few times (e.g., “gardening tools”, “musical instruments”).

From a modeling standpoint, these rare or low-frequency categories pose several challenges:

- Noise vs. Signal: Rare categories may not contain enough data to capture a meaningful signal. They can introduce variance without much predictive power.

- Encoding Complexity: Techniques like one-hot encoding or target encoding will allocate extra dimensions for each unique category. Rare ones bloat the feature space unnecessarily and can lead to sparse, high-dimensional data.

- Overfitting Risk: Since rare categories might only appear a handful of times, models can mistakenly treat them as important, especially in tree-based models, resulting in overfitting.

Let’s address this with code:

# Calculate normalized frequency distribution

category_freq = df_demo['product_category'].value_counts(normalize=True)

# Identify categories with <1% frequency

rare_categories = category_freq[category_freq < 0.01].index.tolist()

print("Rare Categories:", rare_categories)

This step flags categories that account for less than 1% of the data — an intuitive threshold, though domain knowledge can suggest tighter or looser cutoffs.

Handling Rare Categories

A common strategy is to group them under a single label, like 'Other', so we can:

- Preserve frequency information.

- Avoid over-parameterizing our model.

- Keep category counts manageable in downstream encoding.

# Replace rare categories with 'Other'

df_demo['product_category_clean'] = df_demo['product_category'].apply(

lambda x: 'Other' if x in rare_categories else x)

Now, product_category_clean is a transformed version where rare labels have been consolidated.

Why It Matters: Consolidating rare categories reduces noise, guards against model overfitting, and keeps encoded feature dimensions tractable—especially when working with models that don’t handle sparsity well.

If you’re working with models that require numerical inputs, like logistic regression or neural networks, use:

- Target Encoding: Replace categories with their average target outcome (e.g., average churn rate per category).

- Frequency Encoding: Replace each category with its frequency (raw count or normalized proportion).

Both methods reduce dimensionality and incorporate signal from the target distribution. But be careful: target encoding should be applied with cross-validation or out-of-fold strategies to prevent data leakage.

A.3. Missing Data Analysis

Missing data isn’t just an inconvenience—it can bias your model, reduce accuracy, or invalidate assumptions if mishandled. In many real-world datasets, especially those from domains like healthcare, finance, or e-commerce, it’s not uncommon to find some percentage of null values scattered across features. So instead of jumping straight to filling them in, we first need to understand the nature, structure, and pattern of this missingness.

Step 1: Quantify Missingness

We begin by measuring the proportion of missing values per column:

missing_data = df_demo.isnull().mean() * 100

print("Percentage of missing values per column:\n", missing_data[missing_data > 0])

Sample output:

Percentage of missing values per column:

price 4.98

quantity 2.99

price_log 4.98

price_capped 4.98

dtype: float64

Why It Matters: A few percentage points might seem negligible, but their impact depends on how they’re distributed and whether the missingness is systematic.

Understanding Missingness Mechanisms

Not all missing values are created equal. Statisticians categorize them into:

-

MCAR (Missing Completely At Random): The probability of a value being missing is unrelated to any other feature or the value itself. Example: data loss during transmission.

-

MAR (Missing At Random): The missingness depends on observed data. For instance,

pricemight be missing more often inproduct_category = 'donation'. -

MNAR (Missing Not At Random): Missingness depends on the unobserved value itself. Example: Users with extremely high spending may intentionally omit their income.

Understanding which mechanism applies is critical:

- MCAR allows unbiased deletion or mean imputation.

- MAR needs conditional imputation (e.g., grouped means).

- MNAR may require more complex models or external data.

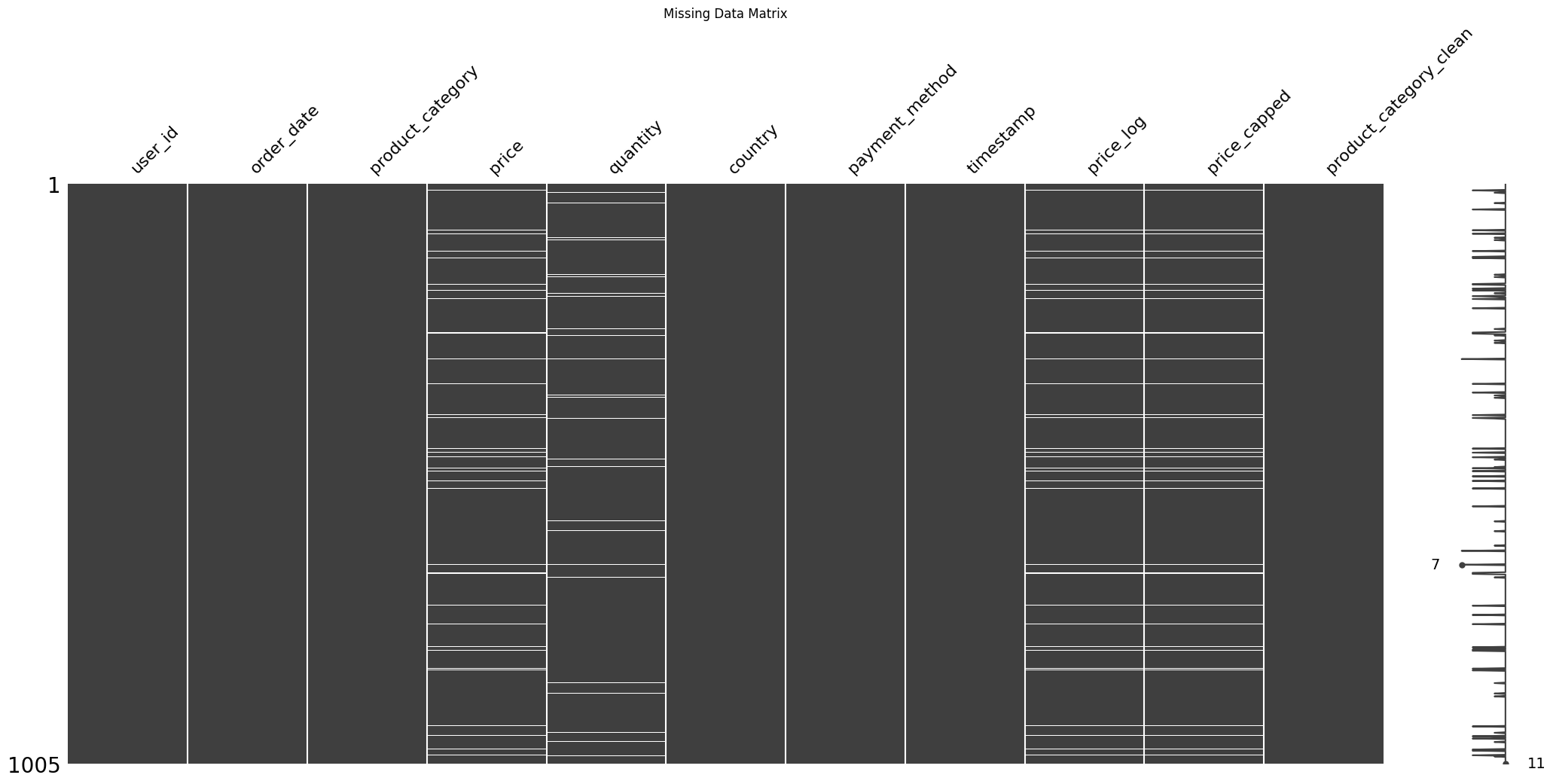

Step 2: Visualize Missingness

Tabular stats are useful, but visual patterns can often reveal structure—e.g., clustering of missing values in rows, patterns by time, or conditional gaps across features.

import missingno as msno

msno.matrix(df_demo)

plt.title('Missing Data Matrix')

plt.show()

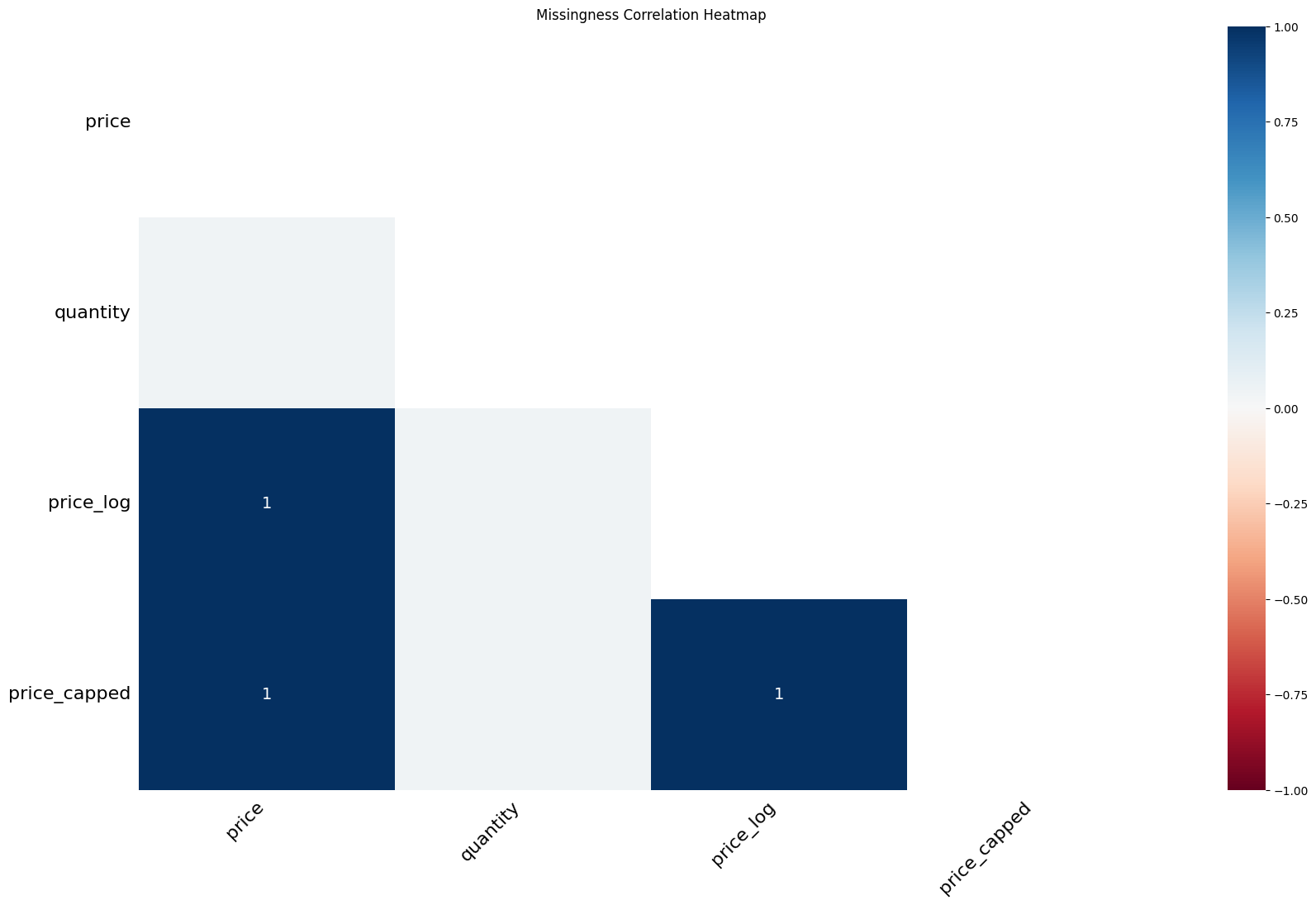

To explore correlation of missingness across columns, use:

msno.heatmap(df_demo)

plt.title('Missingness Correlation Heatmap')

plt.show()

This is especially helpful in identifying co-missing variables, which may share a cause (e.g., same source system).

Actionable Tips and Modeling Implications

-

Imputation Strategy Should Match Pattern: If

priceis missing only in a certain category, consider imputing category-wise medians or predictive models, not global means. -

Don’t Impute Blindly: Mean or median imputation is fast—but can bias the distribution and erase important variance. It’s best reserved for MCAR cases or features with minimal impact.

-

Use Advanced Techniques for MAR/MNAR:

- KNN Imputation: Fills missing values using the average of nearest neighbors.

- Iterative Imputation (MICE): Builds a model to predict missing values using all other features.

- Random Forest/Regression Models: Model-based imputers work well when missingness is predictable.

-