Data Preprocessing Part 2: Mastering the Mess with Effective Data Cleaning

- Data Quality Checks: Consistency, Duplicates, and Structural Integrity

- Creating a Demo Dataset for Quality Checks

- A. Why Data Quality Checks Are Essential

- B. Consistency and Type Validation: Ensuring Features Make Sense

- C. Duplicate Detection and De-duplication: Keeping Data Unique

- D. Structural and Relational Integrity: Validating Data Relationships

- E. Practical Guidance: When, Why, and How to Apply Checks

- F. Key Takeaways

- Handling Missing Data

- A. Taxonomy of Missingness: MCAR, MAR, and MNAR

- B. Diagnosing Missingness: Visual and Statistical Approaches

- C. Strategies for Addressing Missing Data

- D. Choosing the Right Strategy: A Decision Framework

- E. Practical Considerations for Model Performance and Production

- F. Common Pitfalls and How to Avoid Them

- G. Concluding Insight

- Outlier Treatment: Detection and Decisions Under Uncertainty

- Data Consistency: Fixing the Semantics of Structure

- Wrapping Up

“There is no such thing as clean data. Only data we haven’t looked at closely enough.”

In the lifecycle of a data science project, data cleaning occupies a paradoxical space: it is both foundational and often underappreciated. While model selection and performance metrics receive the spotlight, the integrity and usability of data remain the bedrock on which all modeling efforts rest. Without rigorous data cleaning, even the most advanced algorithms can draw unreliable, biased, or spurious conclusions.

Far from being a mere technical formality, data cleaning is a process of statistical discernment and contextual understanding. It demands answers to questions that are often more philosophical than procedural: What constitutes an error in this domain? When is a data point an outlier, and when is it a discovery? Should we impute what is missing, or respect the voids as part of the data’s signal? These are not checklist items — they are modeling choices that shape the epistemological boundaries of the analysis.

Real-world datasets rarely come ready for analysis. They are filled with inconsistencies: missing observations, duplicated records, invalid types, and subtle anomalies that may signal measurement error or reflect latent processes. These imperfections are not noise in the pejorative sense; they are statistical artifacts with the power to influence inferences and affect downstream model behavior.

This post focuses on the practice and theory of Data Cleaning, the second entry in our preprocessing series. We will explore principled methods for assessing data quality, delve into the statistical structure of missingness (including MCAR, MAR, and MNAR frameworks), and examine both simple and advanced imputation strategies. Outlier detection, too, will be treated not as an arbitrary trimming exercise but as a domain-sensitive process requiring careful methodological thought.

Topics Covered

- Conceptualizing and detecting data integrity issues

- Understanding different types of missing data and their statistical implications

- Evaluating and implementing imputation strategies, from mean substitution to model-based techniques

- Detecting and handling outliers using robust statistics and learning-based methods

Data cleaning is not a mechanical precursor to modeling. It is a modeling act in itself — one that encodes assumptions, defines boundaries, and ultimately determines what your algorithm will see and learn. In this post, we treat data cleaning not as housekeeping, but as epistemology in action.

Data Quality Checks: Consistency, Duplicates, and Structural Integrity

Before tackling missing values or outliers, you must ensure the dataset is structurally sound and internally consistent. Poor data quality can introduce silent errors that skew analyses, mislead models, or invalidate conclusions without raising obvious flags. This section provides a systematic, practical framework for identifying and resolving foundational data quality issues, complete with real-world scenarios and guidance on when, why, and how to apply these checks. To make these concepts concrete, we’ll first create a demo dataset (sales_data.csv) with intentional imperfections, then apply the quality checks to it, demonstrating how to uncover and address issues in practice.

Creating a Demo Dataset for Quality Checks

To illustrate the data quality checks, we’ll generate a synthetic sales dataset (sales_data.csv) that mimics a real-world e-commerce scenario. This dataset includes common issues like invalid types, duplicates, and inconsistent values, allowing us to apply and validate our cleaning techniques. The dataset will have columns for order ID, customer ID, customer name, order date, price, quantity, payment mode, and customer age, with deliberate errors to simulate real-world data challenges.

Here’s the Python code to create the dataset, followed by the quality checks applied to it.

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

# Set random seed for reproducibility

np.random.seed(42)

# Generate synthetic sales data

n = 100

order_ids = [f"ORD{str(i).zfill(4)}" for i in range(1, n+1)]

customer_ids = [f"CUST{str(np.random.randint(1, 50)).zfill(3)}" for _ in range(n)]

customer_names = [

np.random.choice(["John Smith", "Jane Doe", "J. Smith", "John Smyth", "Alice Brown", None])

for _ in range(n)

]

order_dates = [

(datetime(2024, 1, 1) + timedelta(days=np.random.randint(0, 365))).strftime("%Y-%m-%d")

for _ in range(n)

]

prices = np.random.uniform(10, 500, n).round(2).tolist()

prices[5] = "N/A" # Introduce invalid type

prices[10] = -50.0 # Introduce negative price

quantities = np.random.randint(1, 10, n).tolist()

quantities[15] = -2 # Introduce negative quantity

payment_modes = [

np.random.choice(["Credit", "Debit", "Cash", "Other", None]) for _ in range(n)

]

ages = np.random.randint(18, 80, n).tolist()

ages[20] = 150 # Introduce invalid age

ages[25] = -5 # Introduce negative age

# Create DataFrame

data = {

"order_id": order_ids,

"customer_id": customer_ids,

"customer_name": customer_names,

"order_date": order_dates,

"price": prices,

"quantity": quantities,

"payment_mode": payment_modes,

"age": ages

}

# Introduce duplicates

data["order_id"][90:95] = ["ORD0001"] * 5 # Duplicate order IDs

df = pd.DataFrame(data)

# Save to CSV

df.to_csv("sales_data.csv", index=False)

df.head()

This script generates a dataset with:

- Invalid types: Text (“N/A”) in the price column.

- Domain violations: Negative prices, quantities, and unrealistic ages (e.g., 150, -5).

- Duplicates: Repeated order IDs and potential fuzzy duplicates in customer names.

- Missing values: Nulls in customer_name and payment_mode.

- Relational issues: Customer IDs that may not map to a valid customer table (simulated later).

We’ll now load this dataset and apply the quality checks described below, showing how to detect and address these issues.

| order_id | customer_id | customer_name | order_date | price | quantity | payment_mode | age | |

|---|---|---|---|---|---|---|---|---|

| 0 | ORD0001 | CUST039 | John Smith | 2024-02-21 | 96.2 | 7 | None | 54 |

| 1 | ORD0002 | CUST029 | John Smyth | 2024-09-24 | 18.86 | 9 | Cash | 53 |

| 2 | ORD0003 | CUST015 | J. Smith | 2024-10-21 | 252.01 | 5 | Cash | 52 |

| 3 | ORD0004 | CUST043 | J. Smith | 2024-04-22 | 97.62 | 1 | Credit | 36 |

| 4 | ORD0005 | CUST008 | John Smith | 2024-04-10 | 189.57 | 1 | Other | 37 |

A. Why Data Quality Checks Are Essential

Think of a dataset as a matrix \(\mathbf{D} \in \mathbb{R}^{n \times p}\), where rows are observations (e.g., customers, transactions) and columns are features (e.g., age, purchase amount). Data quality checks verify that:

- Entries are valid for their feature’s type and domain (e.g., no text in numeric fields).

- Rows are unique, avoiding duplicates that inflate counts or bias results.

- Relationships between variables (e.g., foreign keys, logical constraints) align with business rules.

Skipping these checks risks “garbage in, garbage out.” For example, a single duplicated transaction could double reported revenue, or an invalid timestamp could throw off time-series predictions. These checks aren’t just cleanup—they’re the foundation of trustworthy analysis.

B. Consistency and Type Validation: Ensuring Features Make Sense

Objective: Confirm that each column’s values match its expected data type and domain (e.g., numbers, categories, dates).

Why It Matters:

Type mismatches or invalid values (e.g., “N/A” in a price column) can break calculations or mislead models. For example, in our sales dataset, a text entry in the “price” column will crash a sum operation or skew revenue reports.

When to Use:

- At data ingestion: When loading raw data from CSV, APIs, or databases.

- Before computations: To ensure calculations (e.g., averages, sums) are valid.

- After merges: To catch errors introduced during data integration.

1. Type Enforcement

Each feature should map to a specific domain:

- Numeric: Continuous (e.g., price \(\in \mathbb{R}\)) or discrete (e.g., quantity \(\in \mathbb{Z}\)).

- Categorical: Values from a set (e.g., payment_status = {“paid”, “pending”, “failed”}).

- Temporal: Valid timestamps (e.g., ISO 8601 format).

Practical Example:

Using our demo dataset, let’s check if the “price” column is numeric and “order_date” is a valid timestamp.

import pandas as pd

df = pd.read_csv("sales_data.csv")

print(df.dtypes) # Inspect inferred types

df['price'] = pd.to_numeric(df['price'], errors='coerce') # Convert to numeric, flag invalid as NaN

df['order_date'] = pd.to_datetime(df['order_date'], errors='coerce') # Parse dates

# Check for NaNs introduced by coercion

print(f"Invalid prices: {df['price'].isna().sum()}")

print(f"Invalid dates: {df['order_date'].isna().sum()}")

order_id object

customer_id object

customer_name object

order_date object

price float64

quantity int64

payment_mode object

age int64

dtype: object

Invalid prices: 1

Invalid dates: 0

Insight: In our dataset, the “N/A” in the price column will be flagged as NaN, alerting us to investigate the source (e.g., data entry error). Use errors='coerce' to identify problematic entries without crashing the pipeline. Review flagged rows to decide whether to impute, drop, or investigate further.

2. Domain Constraints

Features often have logical bounds or allowed values:

- Age: [0, 120]

- Order quantity: Positive integers

- Categories: Predefined sets (e.g., payment modes)

Real-World Scenario:

In our sales dataset, prices or quantities below 0 are invalid, and ages outside [0, 120] are unrealistic. The “payment_mode” column should only contain expected categories (e.g., “Credit”, “Debit”, “Cash”, “Other”).

# Flag invalid ages

invalid_ages = df.query("age < 0 or age > 120")

print(f"Found {len(invalid_ages)} invalid age entries")

# Flag invalid prices and quantities

invalid_prices = df.query("price < 0")

invalid_quantities = df.query("quantity <= 0")

print(f"Invalid prices: {len(invalid_prices)}")

print(f"Invalid quantities: {len(invalid_quantities)}")

# Check categorical values

valid_categories = {'Credit', 'Debit', 'Cash', 'Other'}

invalid_payment_modes = df[~df['payment_mode'].isin(valid_categories)]

print(f"Invalid payment modes: {len(invalid_payment_modes)}")

Found 2 invalid age entries

Invalid prices: 1

Invalid quantities: 1

Invalid payment modes: 28

Why It’s Critical: In our dataset, negative prices (-50.0) or quantities (-2) could skew revenue or inventory calculations, while an age of 150 might distort customer demographics. Invalid payment modes (e.g., None) could break downstream analytics expecting fixed categories.

Trade-Off: Strict validation may flag legitimate but rare cases (e.g., a 100-year-old customer). Balance automation with manual review for edge cases.

C. Duplicate Detection and De-duplication: Keeping Data Unique

Objective: Identify and remove duplicate rows or entries that inflate counts or bias results.

Why It Matters:

Duplicates distort statistics and models. In our sales dataset, duplicated order IDs could inflate reported revenue, while repeated customer names might skew marketing analytics.

When to Use:

- After data collection: Logs, surveys, or IoT data often contain duplicates due to system errors.

- Post-merging: Joins can introduce duplicates if keys aren’t unique.

- Before modeling: To ensure i.i.d. assumptions hold for statistical or ML models.

Mathematical View: Duplicates reduce the effective sample size \(n_{\text{eff}}\) and bias empirical distributions. For a dataset with \(n\) rows and \(d\) duplicates, \(n_{\text{eff}} = n - d\).

1. Exact Duplicates

Rows where all columns are identical.

# Count duplicates

print(f"Duplicates: {df.duplicated().sum()}")

# Remove duplicates

df = df.drop_duplicates()

Duplicates: 0

Use Case: In our dataset, exact duplicates might occur if the data collection system logged the same transaction twice.

2. Subset-Based Duplicates

Duplicates based on key columns (e.g., same order ID).

# Check for duplicate order IDs

duplicate_orders = df[df['order_id'].duplicated(keep=False)]

print(f"Duplicate order IDs: {len(duplicate_orders)}")

# Keep latest transaction per order ID

df = df.sort_values('order_date').drop_duplicates(subset='order_id', keep='last')

Duplicate order IDs: 6

Use Case: Our dataset has intentional duplicate order IDs (ORD0001 appearing multiple times). Keeping the latest ensures we retain the most recent transaction.

3. Fuzzy Duplicates

Near-identical entries requiring string matching (e.g., “John Smith” vs. “J. Smith”).

from fuzzywuzzy import fuzz, process

# Find similar names

matches = process.extract("John Smith", df['customer_name'], limit=3, scorer=fuzz.token_sort_ratio)

print("Potential fuzzy duplicates for 'John Smith':", matches)

Potential fuzzy duplicates for 'John Smith': [('John Smith', 100, 12), ('J. Smith', 82, 84), ('John Smyth', 90, 66)]

Use Case: In our dataset, “John Smith” and “J. Smith” might represent the same customer. Fuzzy matching helps consolidate records for accurate customer counts.

Trade-Off: Fuzzy matching is computationally expensive and may over-match (e.g., different people with similar names). Use it selectively on high-impact fields like customer_name.

Why It’s Critical: In our dataset, duplicate order IDs could inflate revenue by counting the same transaction multiple times, while fuzzy duplicates in customer names could lead to overestimating unique customers.

D. Structural and Relational Integrity: Validating Data Relationships

Objective: Ensure multi-table datasets respect relational constraints (e.g., primary and foreign keys).

Why It Matters:

In relational databases, invalid keys break joins or lead to orphaned records. In our sales dataset, orders referencing non-existent customer IDs would produce incomplete reports.

When to Use:

- In multi-table systems: When working with databases or merged datasets.

- Before joins: To avoid nulls or errors in merged outputs.

- During ETL: To validate data pipelines.

Mathematical View:

- Primary key uniqueness: \(\text{PK}(A) \rightarrow \text{unique}(A)\).

- Foreign key validity: \(\text{FK}(B) \subseteq \text{PK}(A)\).

Practical Example:

Let’s simulate a customers table and ensure all customer IDs in our sales dataset are valid.

# Simulate customers table

customers = pd.DataFrame({

'customer_id': [f"CUST{str(i).zfill(3)}" for i in range(1, 45)] # Only 44 valid customers

})

# Check primary key uniqueness

assert df['order_id'].is_unique, "Duplicate order IDs found"

# Validate foreign keys

valid_customers = set(customers['customer_id'])

invalid_orders = df[~df['customer_id'].isin(valid_customers)]

print(f"Invalid customer IDs in orders: {len(invalid_orders)}")

Invalid customer IDs in orders: 7

Use Case: In our dataset, some customer IDs (e.g., CUST045 and above) don’t exist in the customers table, indicating orphaned orders that need resolution (e.g., data entry errors or missing customer records).

Trade-Off: Enforcing strict relational checks may slow down pipelines for large datasets. Consider sampling for initial validation.

E. Practical Guidance: When, Why, and How to Apply Checks

| Check Type | When to Use | Why It Matters | Tools/Techniques |

|---|---|---|---|

| Type and format checks | At data load, after merges, before computations | Prevents crashes and ensures calculations are valid | df.dtypes, pd.to_numeric, pd.to_datetime |

| Domain constraints | During EDA, before modeling | Ensures values are realistic and align with domain knowledge | query(), range filters, set comparisons |

| Duplicate detection | Post-collection, after merges, before modeling | Avoids inflated metrics or biased models | duplicated(), drop_duplicates(), fuzzywuzzy |

| Relational integrity | In multi-table systems, before joins, during ETL | Prevents join errors and orphaned records | isin(), assert, joins |

| Visual diagnosis | During EDA, before modeling, when debugging | Reveals hidden patterns and prioritizes cleaning efforts | missingno, seaborn, .value_counts() |

Decision Framework:

- Start with types and formats: These are quick and catch low-hanging issues.

- Check duplicates early: Especially for logs or user-generated data.

- Validate relationships: Critical for relational databases or merged datasets.

- Use visuals iteratively: To refine checks and guide deeper cleaning.

- Automate where possible: Build checks into ETL pipelines but allow manual review for edge cases.

Real-World Insights:

- E-commerce: Duplicate orders from system retries can inflate revenue. Use subset-based de-duplication on order IDs and timestamps.

- Healthcare: Invalid blood pressure readings can skew clinical studies. Enforce domain constraints and visualize distributions.

- Marketing: Fuzzy matching consolidates customer records, improving campaign targeting.

- Finance: Relational checks ensure transactions map to valid accounts, preventing audit failures.

F. Key Takeaways

Data quality checks are the bedrock of reliable analysis. They:

- Preserve statistical integrity by ensuring data reflects reality.

- Prevent downstream errors in modeling or reporting.

- Save time by catching issues early.

Treat these checks as axioms for your analysis, not optional cleanup. By applying these checks to our demo dataset, we identified invalid types, duplicates, and relational issues, demonstrating their practical impact. Embedding these practices into your workflow transforms raw data into a trustworthy asset.

Handling Missing Data

Missing data is not merely an inconvenience — it is a statistical phenomenon that can subtly distort conclusions, bias distributions, reduce power, and undermine the credibility of any analysis or model built atop it. Addressing missing data requires more than just a mechanical patchwork of imputation; it demands thoughtful modeling assumptions, diagnostic rigor, and awareness of the mechanism behind the missingness itself.

Before we discuss machine learning or prediction, we must understand this invisible data-generating process. In this section, we delve into the theoretical taxonomy of missingness, diagnostic tools to detect it, and principled strategies for imputation or deletion — all while grounding our discussion in practical scenarios, real-world production concerns, and strategies to align imputation with business objectives.

A. Taxonomy of Missingness: MCAR, MAR, and MNAR

Statistically, let \(X = \{X_1, X_2, ..., X_p\}\) denote the observed variables, and let \(R = \{R_1, R_2, ..., R_p\}\) be the missingness indicators, where:

- \(R_j = 1\) if \(X_j\) is observed,

- \(R_j = 0\) if \(X_j\) is missing.

The core insight is that the mechanism governing \(R\), which determines whether a value is missing, can be independent of or dependent on \(X\). This yields three conceptual categories:

1. MCAR: Missing Completely at Random

Definition: The probability of missingness is unrelated to both observed and unobserved data:

P(R | X_obs, X_mis) = P(R)

Interpretation: The missingness occurs purely by chance — like accidentally losing a survey page or a sensor failing uniformly across time.

Implication: MCAR data, though inefficient, leads to unbiased estimates when using listwise deletion or simple imputations. However, MCAR is rare in practice.

Example: A weather station’s sensor randomly fails due to power outages, unrelated to temperature or humidity values. In production, MCAR might occur in IoT systems with intermittent connectivity issues.

2. MAR: Missing at Random

Definition: The probability of missingness depends only on the observed data, not the missing values themselves:

P(R | X_obs, X_mis) = P(R | X_obs)

Example: Blood pressure might be missing more often for younger patients (age is observed), but not based on the actual missing BP value.

Implication: With MAR, imputations conditioned on observed variables can yield unbiased estimates — motivating methods like MICE or model-based imputers. This is the most commonly assumed (but not guaranteed) scenario.

Example: In e-commerce, customers who don’t fill out optional fields like “preferred payment method” may be younger or less frequent shoppers (observable traits), but the missing value isn’t tied to their choice of payment.

3. MNAR: Missing Not at Random

Definition: The probability of missingness depends on the unobserved value:

P(R | X_obs, X_mis) ≠ P(R | X_obs)

Example: Patients with very high income might decline to report it; their missingness is due to the value itself.

Implication: MNAR mechanisms lead to biased imputations unless the missingness process is modeled explicitly. This is the most difficult to handle and may require external data, sensitivity analysis, or Bayesian models.

Example: In a mental health survey, respondents with severe symptoms may skip questions about their condition, making missingness dependent on the severity (unobserved). In production, MNAR is common in sensitive domains like finance or healthcare where users self-censor.

Insight: MNAR often requires domain expertise to model. For instance, in finance, you might use proxy variables (e.g., ZIP code for income) or external datasets (e.g., census data) to approximate the missingness mechanism. Sensitivity analyses, such as testing imputations under different MNAR assumptions, can quantify uncertainty.

B. Diagnosing Missingness: Visual and Statistical Approaches

Understanding whether data is MCAR, MAR, or MNAR is often nontrivial. We may not know the true generating process, but we can gather evidence through exploratory and formal diagnostics.

1. Visualization

Visual tools help uncover patterns in missingness:

import missingno as msno

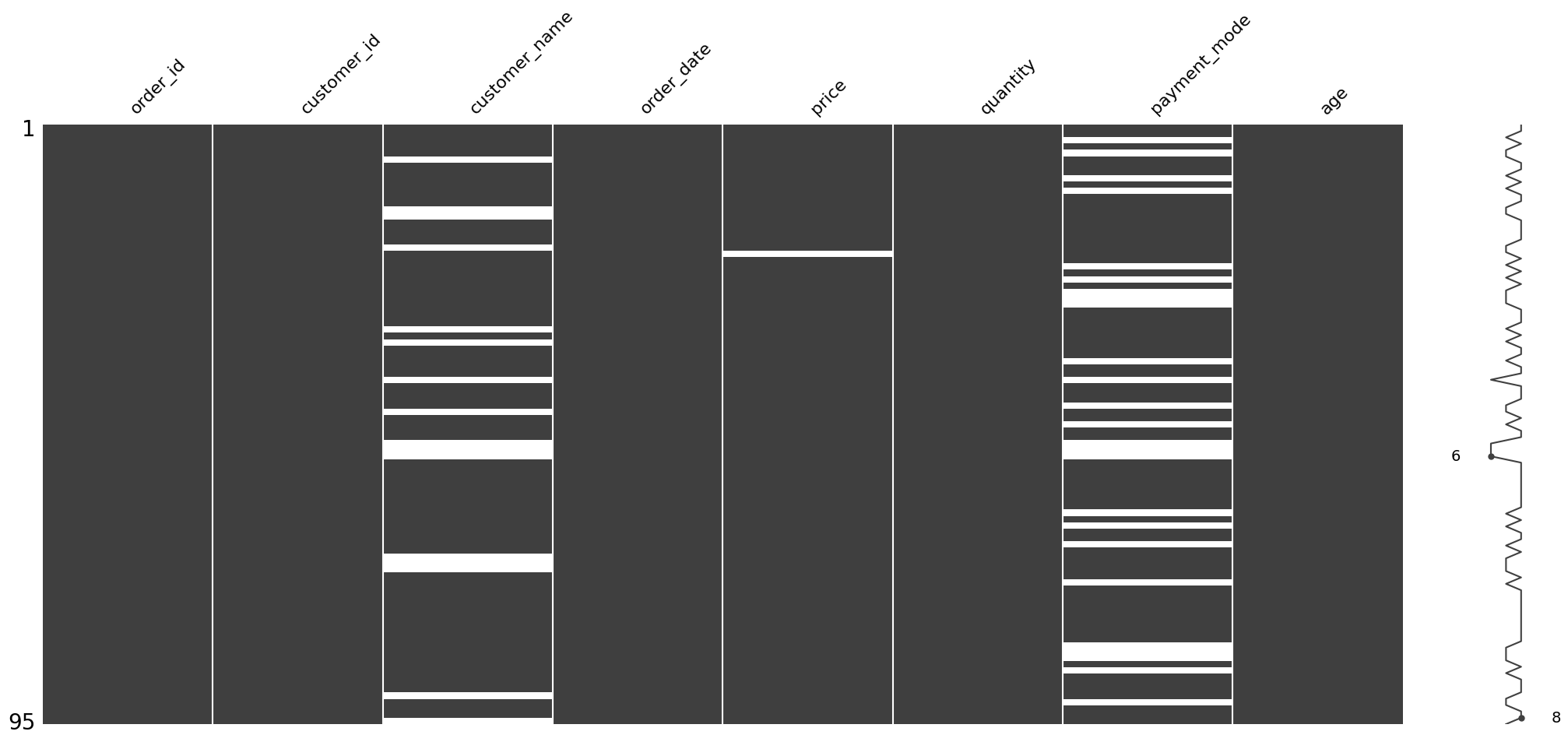

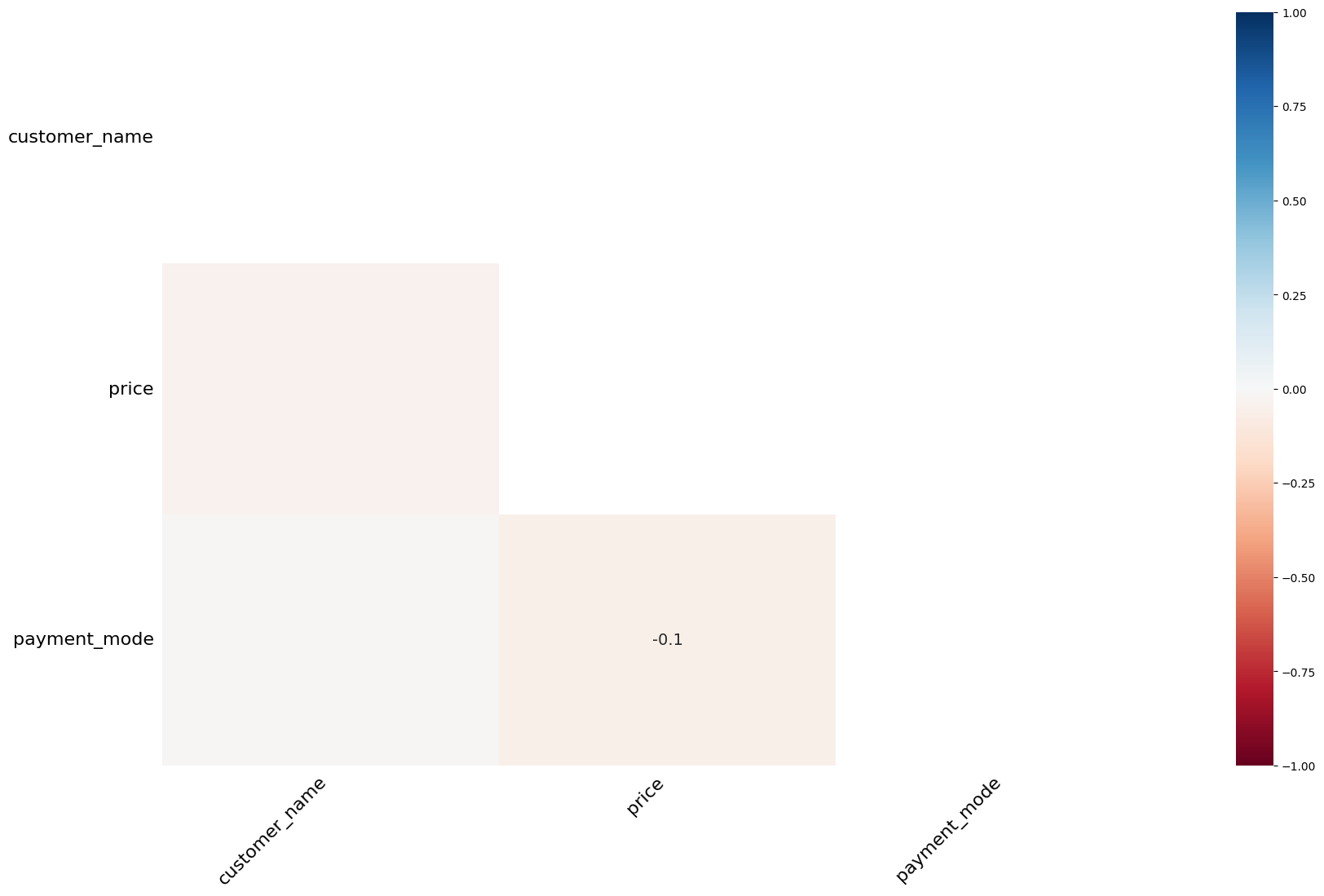

msno.matrix(df)

msno.heatmap(df)

- Matrix view shows where missingness occurs by row.

- Heatmap shows correlation of missingness across variables — helpful to detect if one variable’s absence predicts another’s.

Insight: Correlated missingness suggests a MAR or MNAR mechanism.

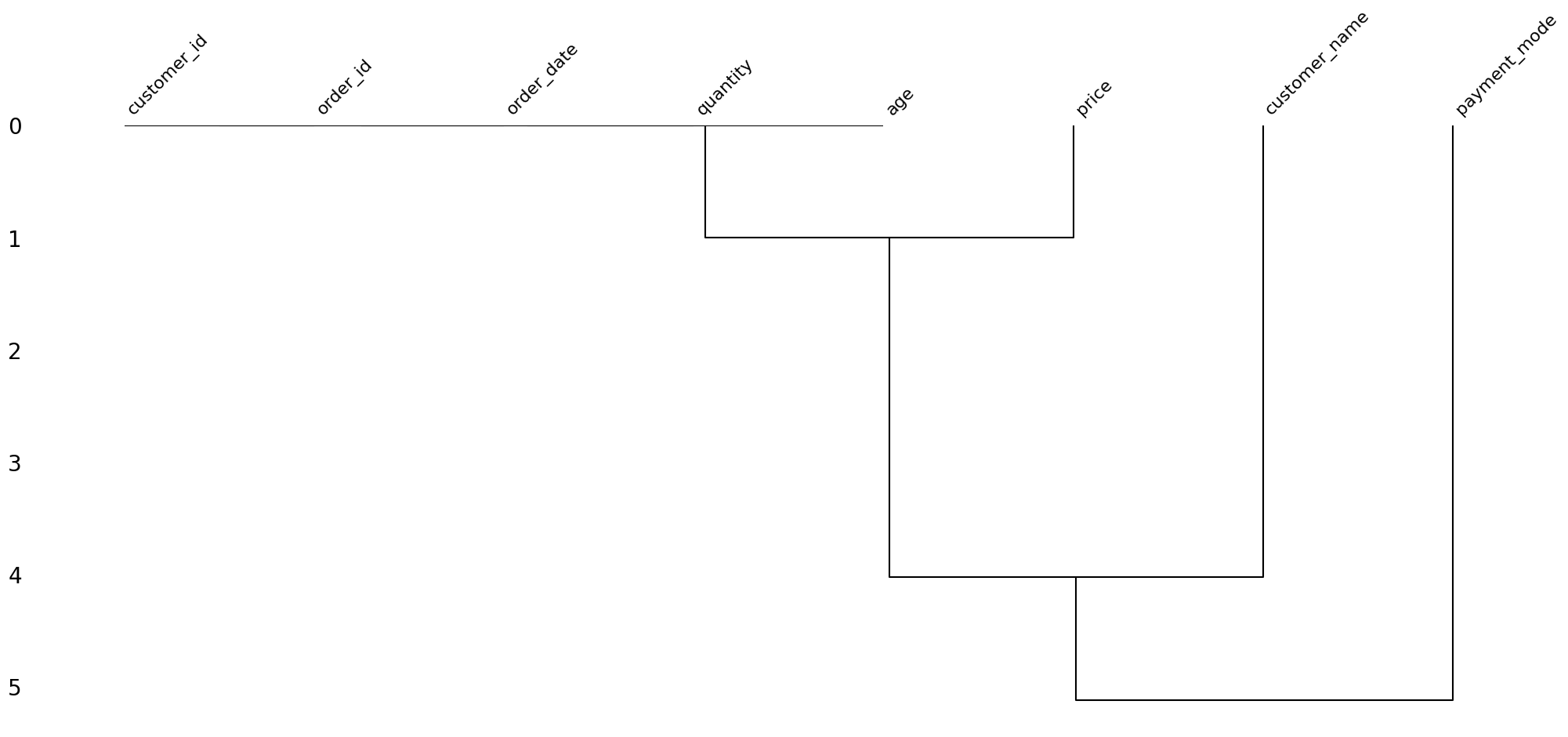

Tip: Use dendrograms to cluster variables with similar missingness patterns:

msno.dendrogram(df)

This can reveal groups of variables missing together (e.g., all fields in a form section), guiding imputation or data collection fixes. In production, visualize missingness trends over time to detect data pipeline issues, like API failures or schema changes.

2. Little’s MCAR Test

This is a statistical test of the null hypothesis that data is MCAR.

- H₀: Data is MCAR

- H₁: Data is not MCAR

from statsmodels.imputation import mice

from impyute.imputation.cs import mcar

p_value = mcar(df.values)

Interpretation: A small \(p\)-value leads us to reject MCAR — suggesting the need for more careful imputation strategies than mean substitution or listwise deletion.

Caution: Little’s test assumes normality and may lack power in small samples or with high missingness. Combine it with domain knowledge and visualizations. For example, if missingness correlates with a business event (e.g., a website outage), MCAR is unlikely.

Tool: For MAR/MNAR exploration, use logistic regression to model missingness:

import pandas as pd

df['missing_flag'] = df['target_var'].isnull().astype(int)

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(df[['observed_var1', 'observed_var2']].dropna(), df['missing_flag'].dropna())

If coefficients are significant, missingness depends on observed variables (MAR). If no clear predictors emerge, suspect MNAR.

C. Strategies for Addressing Missing Data

Once we understand the nature of the missingness, we must decide how to address it. There are three principal strategies: deletion, imputation, and modeling the missingness itself.

1. Deletion Methods

a. Listwise Deletion

Remove entire rows with any missing values:

df_clean = df.dropna()

Pros:

- Simple and fast

- Valid under MCAR

Cons:

- Loss of data can be substantial

- Biased under MAR or MNAR

Insight: In production, listwise deletion is risky for real-time systems (e.g., fraud detection), as dropping rows can lead to delayed or missed decisions. Consider partial predictions or fallback imputations instead. Evaluate deletion’s impact on sample size and model performance via cross-validation.

b. Pairwise Deletion

Use available data for each variable pair in correlation or covariance calculations.

df.corr(method='pearson', min_periods=50)

Trade-offs:

- Retains more data than listwise

- Inconsistent sample sizes across analyses

- Not suitable for modeling pipelines

Note: Pairwise deletion is common in exploratory analysis but problematic in machine learning, as it disrupts consistent feature sets. Avoid in production pipelines where reproducibility is critical.

2. Simple Imputation

a. Mean/Median/Mode Imputation

df['age'].fillna(df['age'].mean(), inplace=True)

Use When:

- Data is MCAR or approximately symmetric

- Feature is not critical or deeply skewed

Drawbacks:

- Underestimates variance

- Biased estimates if data is MAR or MNAR

- Breaks correlation structure

Tip: For skewed data (e.g., income), use median imputation to avoid distortion. In production, cache precomputed statistics (e.g., mean/median) to ensure consistent imputation during inference, especially for streaming data.

b. Constant/Flag Imputation

Used when missingness is informative (e.g., product not used = 0).

df['num_logins'].fillna(0, inplace=True)

df['num_logins_missing'] = df['num_logins'].isnull().astype(int)

Best Practice: Add a binary “missingness indicator” column to preserve the signal of missingness.

Insight: In domains like marketing, missingness flags can be powerful features. For example, a missing “last_purchase_date” might indicate churn risk. However, overusing flags can increase dimensionality, so validate their predictive value via feature importance analysis.

3. Advanced Model-Based Imputation

These methods better preserve distributions, correlations, and are robust to MAR.

a. KNN Imputation

Find the \(k\)-nearest neighbors based on complete features and impute the average/mode.

from sklearn.impute import KNNImputer

imputer = KNNImputer(n_neighbors=5)

df_imputed = imputer.fit_transform(df)

Assumption: Similar rows have similar values

Cons: Sensitive to scaling, doesn’t handle MNAR, computationally expensive for large datasets

Tip: Standardize features before KNN imputation to ensure fair distance calculations. For large datasets, use approximate nearest neighbors (e.g., Annoy or HNSW) to reduce computation time. In production, precompute neighbor indices for static datasets to speed up inference.

b. Iterative Imputer (Multivariate Imputation)

Each feature with missing values is regressed on other features in a round-robin fashion.

from sklearn.experimental import enable_iterative_imputer

from sklearn.impute import IterativeImputer

imputer = IterativeImputer()

df_imputed = imputer.fit_transform(df)

How It Works:

- Imputes initial values (e.g., mean)

- Regresses missing column on observed columns

- Cycles through columns iteratively

Special Case: MICE (Multiple Imputation by Chained Equations) — generates multiple imputed datasets, builds models on each, and pools results.

Insight: MICE is ideal for statistical inference (e.g., clinical trials) but less practical for single-model machine learning pipelines. In production, use IterativeImputer with a fixed random seed for reproducibility. Test imputation stability by comparing results across multiple runs.

c. Random Forest Imputation

Nonlinear, ensemble-based method — more flexible than KNN.

from missingpy import MissForest

imputer = MissForest()

df_imputed = imputer.fit_transform(df)

Pros:

- Handles mixed-type data

- Captures nonlinear interactions

Cons:

- Slower

- Sensitive to hyperparameters

Tip: Tune MissForest’s hyperparameters (e.g., number of trees) using cross-validation to balance accuracy and speed. For mixed-type data, encode categoricals carefully to avoid bias. In production, consider lighter alternatives like KNN for low-latency systems.

d. Interpolation (Time Series)

Suitable for temporally indexed data:

df['temp'].interpolate(method='linear', inplace=True)

Variants: linear, spline, polynomial, time-weighted

Use Cases: Sensor logs, medical monitoring, financial series

Insight: For irregular time series (e.g., stock trades), use time-weighted interpolation to account for uneven gaps. In production, handle missing data at the edge (e.g., latest sensor reading) with forward-fill or model-based forecasting. Validate interpolation by checking for unrealistic jumps in imputed values.

e. Deep Learning-Based Imputation

Neural networks, such as autoencoders or GANs, can model complex patterns for imputation.

from datawig import SimpleImputer

imputer = SimpleImputer(input_columns=['col1', 'col2'], output_column='target')

imputer.fit(df)

df_imputed = imputer.predict(df)

Use Cases: High-dimensional data, nonlinear relationships (e.g., image or text embeddings).

Pros: Captures complex dependencies.

Cons: Requires large datasets, computationally intensive, harder to interpret.

Tip: Use deep learning imputation for datasets with rich features (e.g., user behavior logs). In production, deploy pre-trained imputation models to avoid retraining on new data.

D. Choosing the Right Strategy: A Decision Framework

| Condition | Suggested Strategy |

|---|---|

| Data is MCAR | Listwise deletion, mean/median imputation |

| MAR with strong predictors | MICE, Iterative Imputer, Random Forest |

| Time-series data | Interpolation (linear/spline) |

| High missingness + important variable | Avoid deletion, use MICE or domain-driven imputation |

| Categorical variable (few levels) | Mode imputation or indicator encoding |

| Missingness is informative | Constant + missing flag |

| Data is suspected MNAR | Model missingness explicitly or seek external data |

Decision Factors:

- Data Size: For small datasets (<1,000 rows), avoid complex imputations like MICE due to overfitting risk. Use simple methods or deletion if MCAR.

- Feature Importance: Prioritize advanced imputation for high-impact features (e.g., revenue predictors in sales models).

- Latency Requirements: In real-time systems (e.g., recommendation engines), prefer fast methods like constant imputation or precomputed KNN.

- Regulatory Constraints: In healthcare or finance, document imputation assumptions for compliance (e.g., FDA audits).

Example: In a credit scoring model, if “debt-to-income ratio” is missing for 20% of applicants and suspected MNAR (e.g., high-debt applicants avoid reporting), use a combination of external data (e.g., credit bureau scores) and sensitivity analysis to assess imputation bias.

E. Practical Considerations for Model Performance and Production

1. Imputation Affects Distributions

Imputation alters marginal and joint distributions. For instance, mean imputation compresses variance and weakens correlation estimates.

Always re-check distributions:

import seaborn as sns

sns.histplot(df['feature'], kde=True)

sns.histplot(df_imputed['feature'], kde=True)

Tip: Use Kolmogorov-Smirnov or Wasserstein distance tests to quantify distribution shifts post-imputation:

from scipy.stats import ks_2samp

statistic, p_value = ks_2samp(df['feature'].dropna(), df_imputed['feature'])

If shifts are significant, consider alternative imputations or feature engineering.

2. Pipeline Integration

Imputation should be part of a reproducible modeling pipeline.

from sklearn.pipeline import Pipeline

pipeline = Pipeline([

("imputer", IterativeImputer()),

("scaler", StandardScaler()),

("model", LogisticRegression())

])

This ensures no leakage and consistent handling between train/test and production.

Insight: Use ColumnTransformer for feature-specific imputation:

from sklearn.compose import ColumnTransformer

preprocessor = ColumnTransformer([

('num_impute', IterativeImputer(), ['age', 'income']),

('cat_impute', SimpleImputer(strategy='most_frequent'), ['gender'])

])

pipeline = Pipeline([('preprocessor', preprocessor), ('model', LogisticRegression())])

This allows tailored imputation per feature type, improving accuracy and interpretability.

3. Handling in Production Systems

- Consistency: Same imputation logic must be deployed.

- Monitoring: Track imputation frequency as a metric (e.g., high imputation rates may signal data collection failure).

- Fallbacks: Set default values or alerts if imputation fails (e.g., in real-time scoring).

Advice:

- Versioning: Store imputation parameters (e.g., mean values, trained imputers) in a model registry to ensure consistency across deployments.

- Drift Detection: Monitor input data for changes in missingness patterns (e.g., new API fields). Use tools like Evidently AI:

from evidently.report import Report

from evidently.metric import DataDriftPreset

report = Report(metrics=[DataDriftPreset()])

report.run(reference_data=df_train, current_data=df_prod)

- Error Handling: In streaming systems, implement retry logic for transient missingness (e.g., API timeouts) before falling back to imputation.

- Business Alignment: Engage stakeholders to define acceptable imputation strategies. For example, in fraud detection, aggressive imputation might mask suspicious patterns, so prefer deletion or flags.

Example: In a retail recommendation system, missing “user ratings” might be imputed with a user’s average rating. Monitor imputation rates weekly; a spike could indicate a UI bug preventing rating submissions.

F. Common Pitfalls and How to Avoid Them

To ensure robust handling of missing data, avoid these frequent mistakes:

-

Ignoring Missingness Mechanisms: Assuming MCAR without diagnostics can lead to biased models. Solution: Use visualizations, Little’s test, and domain knowledge to assess MAR/MNAR.

-

Over-Imputing: Applying complex imputations to features with >50% missingness can introduce noise. Solution: Consider dropping such features or using missingness flags unless external data is available.

-

Data Leakage: Imputing test data using train+test statistics skews performance. Solution: Fit imputers on training data only within a pipeline.

-

Neglecting Downstream Impact: Imputation can affect model interpretability (e.g., SHAP values). Solution: Validate model explanations post-imputation and document changes.

-

Static Imputation in Dynamic Systems: Using fixed imputations (e.g., historical means) in evolving datasets can misrepresent trends. Solution: Periodically retrain imputers or use online learning techniques.

Example: A churn prediction model used mean imputation for “time since last login,” ignoring that missing values often indicated inactive users (MNAR). This led to underestimating churn risk. Using a missingness flag and Random Forest imputation improved AUC by 5%.

G. Concluding Insight

Handling missing data is not merely about filling blanks — it’s a philosophical and statistical commitment. Every imputation carries an assumption. Every deletion encodes a belief about irrelevance. To treat missing data with rigor is to respect the epistemic uncertainty in real-world datasets and build models that do not lie about what they do not know.

Reflection: The best missing data strategy balances statistical rigor with operational constraints. Engage domain experts to validate assumptions, monitor imputation effects in production, and iterate as new data or business needs emerge. By treating missingness as a signal rather than noise, you can uncover insights that drive better decisions.

In our next section, we will explore a different kind of anomaly — outliers — and how their detection and treatment shapes the robustness and sensitivity of your analysis.

Outlier Treatment: Detection and Decisions Under Uncertainty

Outliers are edge cases. They are statistical aberrations — values that lie far from the central mass of a distribution. But more importantly, they are epistemic events. They challenge assumptions, provoke skepticism, and force analysts to ask whether what they are observing is an error, a rare truth, or something in between.

In real-world data, outliers are everywhere. They may be typos in clinical records, fraudulent spikes in credit card transactions, sensor glitches in IoT devices, or just rare but valid phenomena like billion-dollar business deals. Hence, outlier detection and treatment is not just a cleanup step — it is a form of statistical storytelling, and must be approached with both rigor and contextual intelligence.

This section will equip you with the mathematical tools, algorithmic frameworks, and practical heuristics necessary to identify and handle outliers in structured datasets. We’ll start with detection — both statistical and model-based — and then move into various strategies for treatment and domain-sensitive decision-making.

A. Outlier Detection

Outlier detection is the act of identifying observations that deviate significantly from the rest of the data under some notion of distance, density, or distributional probability. The key is to define a reference norm — a measure of what constitutes “normal” — and then compute how far each data point lies from it.

A.1 Statistical Methods

These approaches rely on assumptions about the underlying distribution (typically Gaussian) and summary statistics like the mean and standard deviation.

1. Z-score Method (Standard Score)

If a feature \(X\) follows a normal distribution \(\mathcal{N}(\mu, \sigma^2)\), its Z-score for value \(x\) is:

z = \frac{x - \mu}{\sigma}

| Typical rule of thumb: flag outliers when $$ | z | > 3$$ |

from scipy.stats import zscore

z = zscore(df['feature'])

df_outliers = df[(z > 3) | (z < -3)]

Limitation: Sensitive to skewed distributions and already-influential outliers that distort \(\mu\) and \(\sigma\).

2. IQR Method (Interquartile Range)

The IQR is a robust, non-parametric method.

Define:

- \[Q_1 = \text{25th percentile}\]

- \[Q_3 = \text{75th percentile}\]

- \[IQR = Q_3 - Q_1\]

A data point is an outlier if:

x < Q_1 - 1.5 \cdot IQR \quad \text{or} \quad x > Q_3 + 1.5 \cdot IQR

Q1 = df['feature'].quantile(0.25)

Q3 = df['feature'].quantile(0.75)

IQR = Q3 - Q1

outliers = df[(df['feature'] < Q1 - 1.5 * IQR) | (df['feature'] > Q3 + 1.5 * IQR)]

3. Mahalanobis Distance (Multivariate)

For multivariate normal data \(\mathbf{X} \sim \mathcal{N}(\mu, \Sigma)\), the Mahalanobis distance of a point \(\mathbf{x}\) is:

D^2(\mathbf{x}) = (\mathbf{x} - \mu)^\top \Sigma^{-1} (\mathbf{x} - \mu)

Outliers can be identified by comparing \(D^2\) to a \(\chi^2\) distribution with \(p\) degrees of freedom.

from scipy.spatial import distance

from numpy.linalg import inv

X = df[['x1', 'x2', 'x3']].values

mean = np.mean(X, axis=0)

cov = np.cov(X, rowvar=False)

inv_cov = inv(cov)

mahal = [distance.mahalanobis(x, mean, inv_cov) for x in X]

Limitation: Highly sensitive to multicollinearity and covariance matrix stability.

A.2 Model-Based Methods

These approaches are more flexible and assume no strict parametric form.

1. Isolation Forest

Builds random binary trees by partitioning the data. Outliers get isolated faster (i.e., require fewer splits).

from sklearn.ensemble import IsolationForest

iso = IsolationForest(contamination=0.05)

df['outlier_flag'] = iso.fit_predict(df[features])

2. Local Outlier Factor (LOF)

Uses k-nearest neighbors to estimate local densities. Points with substantially lower local density than their neighbors are flagged.

from sklearn.neighbors import LocalOutlierFactor

lof = LocalOutlierFactor(n_neighbors=20)

df['outlier_flag'] = lof.fit_predict(df[features])

3. One-Class SVM

Trains a model on “normal” data and classifies anything outside the learned region as an anomaly.

from sklearn.svm import OneClassSVM

svm = OneClassSVM(kernel='rbf', nu=0.05)

df['outlier_flag'] = svm.fit_predict(df[features])

B. Outlier Handling Strategies

Detection is only half the battle. Once identified, we must decide how to handle outliers. This depends on domain knowledge, model sensitivity, and statistical goals.

B.1 Trimming (Removal)

Simply remove outliers if they are likely errors or if your model is highly sensitive (e.g., OLS regression).

df_trimmed = df[df['feature'] <= threshold]

Risk: May discard rare but meaningful patterns.

B.2 Winsorization

Cap extreme values at fixed percentiles (e.g., 5th and 95th):

from scipy.stats.mstats import winsorize

df['feature'] = winsorize(df['feature'], limits=[0.05, 0.05])

Use Case: Retain sample size while reducing influence of extremes.

B.3 Capping (Custom Winsorization)

Cap values at domain-defined thresholds.

df['income'] = df['income'].clip(lower=1000, upper=50000)

Useful When: Domain knowledge provides reasonable bounds.

B.4 Transformation

Apply transformations to reduce skew and compress extreme values.

df['log_feature'] = np.log1p(df['feature']) # log(x + 1)

df['sqrt_feature'] = np.sqrt(df['feature'])

Works well for skewed distributions or features with long tails (e.g., income, transaction size).

B.5 Robust Scaling

Use robust statistics (median and IQR) instead of mean and standard deviation for scaling:

from sklearn.preprocessing import RobustScaler

scaler = RobustScaler()

df_scaled = scaler.fit_transform(df)

Benefit: Reduces impact of extreme values during normalization.

C. Domain-Specific Strategies

In practice, outlier treatment depends on why the outlier exists.

1. Fraud Detection

Outliers are the signal. Don’t remove them; instead, model them.

- Use unsupervised outlier detection as feature engineering

- Augment with domain-specific metadata (e.g., IP address, merchant ID)

2. Sensor or IoT Data

Sensors may spike due to environmental or hardware noise.

- Use rolling median filters

- Impute with interpolation or smoothing

3. Financial Transactions

High-variance is expected; outliers might represent VIP customers.

- Cap but retain indicator flags for modeling (e.g.,

is_high_txn) - Avoid aggressive removal that destroys predictive signal

Suppose we’re analyzing vital signs for ICU patients:

- Missing values in lab test results can be imputed using MICE.

- Heart rate above 220 or below 30 is likely an error — remove.

- Outlier glucose readings (e.g., > 600 mg/dL) could indicate emergencies — keep, but flag.

- Log-transform creatinine levels to reduce right skew.

- Add derived features: e.g.,

is_extreme_bp = 1if blood pressure outside 90–180 mmHg.

This hybrid approach — combining trimming, transformation, imputation, and domain-specific flagging — reflects best practices in medical analytics.

Outliers are not necessarily bad data — they are unusual data. Whether they’re noise or novelty depends on your modeling goals and domain constraints. A robust data scientist does not simply discard what doesn’t fit; they study it, model it, and only then decide what to preserve and what to discard.

Data Consistency: Fixing the Semantics of Structure

Consistency in data is often overlooked because it’s subtle. Unlike missing values or glaring outliers, inconsistencies don’t crash scripts or trigger red flags. Instead, they quietly undermine trust in the data — duplicate categories that should be the same, misspellings that inflate cardinality, and silent type coercions that confuse logic. In practice, these issues can propagate misleading trends, distort distributions, and severely impact models that rely on categorical representations or feature encoding.

This section focuses on semantic and categorical consistency — identifying and reconciling variations that are logically equivalent but syntactically distinct.

A. The Nature of Inconsistency

Let’s define a dataset as a set of \(n\) records, each represented as a vector \(\mathbf{x}_i \in \mathbb{R}^p \cup \mathcal{C}^q\), where the continuous features lie in real-valued space and the categorical features lie in a discrete set of categories.

Consistency requires that:

- Identical concepts map to a single canonical representation

- Feature values conform to declared formats and expected hierarchies

- No two distinct values convey the same real-world meaning

Inconsistencies often arise due to:

- Human data entry (typos, casing, spacing)

- Multiple systems with overlapping but non-aligned taxonomies

- Language or cultural variants (e.g., color vs. colour)

- Implicit missing values (e.g., “N/A”, “none”, “missing”)

B. Fixing Typos and Spelling Variants

1. Standardizing Text Case and Stripping Whitespace

df['state'] = df['state'].str.strip().str.lower()

Why? “California “, “california”, and “California” should all collapse to a single category.

2. Fuzzy Matching for Misspelled Categories

When typos or variants are frequent:

from fuzzywuzzy import process

unique_vals = df['employer_name'].unique()

process.extract("international business machines", unique_vals, limit=5)

Use a fuzzy matching threshold (e.g., 90%) to map close variants to a canonical label.

3. String Normalization (Regex-Based)

Useful for removing punctuation or standardizing formats:

import re

df['product_code'] = df['product_code'].str.replace(r'[^A-Za-z0-9]', '', regex=True).str.upper()

C. Consolidating Categories

When categorical features contain many levels, consolidation improves signal-to-noise ratio and reduces model complexity.

1. Mapping Synonyms or Hierarchies

Example: grouping education levels

education_map = {

'high school diploma': 'high school',

'hs diploma': 'high school',

'bachelors': 'undergrad',

'ba': 'undergrad', 'b.sc': 'undergrad',

'masters': 'postgrad',

'phd': 'doctorate'

}

df['education'] = df['education'].map(lambda x: education_map.get(x.lower(), x.lower()))

2. Binning Rare Categories

Group low-frequency categories under a common label:

counts = df['category'].value_counts()

threshold = 100

df['category'] = df['category'].apply(lambda x: x if counts[x] > threshold else 'Other')

This reduces dimensionality in one-hot or target encoding.

3. Hierarchical Collapsing

Use business-defined hierarchies:

| Raw Category | Mapped Category |

|---|---|

| visa, mastercard | Credit Card |

| upi, phonepe | UPI |

| cash, cheque | Offline |

D. Detecting Inconsistencies

1. Unexpected Cardinality

Check whether a feature with expected low cardinality has too many unique values:

df['gender'].value_counts(dropna=False)

If gender has entries like “Male”, “male”, “MALE”, “m”, “M”, it indicates inconsistent encodings.

2. Cross-Field Logical Checks

E.g., “employment_status” = “unemployed” and “employer_name” ≠ null → inconsistent

df.loc[(df['employment_status'] == 'unemployed') & (df['employer_name'].notna())]

E. Practical Recommendations

| Problem Type | Strategy |

|---|---|

| Inconsistent casing or spacing | .str.lower(), .str.strip() |

| Misspellings | Fuzzy matching, manual mapping |

| Redundant categories | Mapping to canonical forms |

| Rare categories | Binning or grouping into "Other" |

| Structured inconsistencies | Cross-field rules and validations |

| Ambiguous nulls (e.g., “none”) | Convert to np.nan, then handle missingness |

F. Why It Matters for Modeling

- Encoding Inflation: “USA”, “usa”, and “United States” will be treated as distinct categories in one-hot encoding.

- Data Leakage: Conflicting categories may split the statistical strength across variants.

- Model Robustness: Algorithms like decision trees are sensitive to splits on inconsistent categories.

G. Case Example: Cleaning Survey Responses

Let’s say you’re analyzing survey responses on employment.

Raw Data:

| Respondent | Employment_Status | Employer |

|---|---|---|

| 1 | Employed | |

| 2 | EMPLOYED | google inc. |

| 3 | Unemployed | None |

| 4 | unemployed | Amazon |

| 5 | Working | AMAZON |

Fixes:

- Normalize casing:

.str.lower() - Consolidate employment statuses: map “working” → “employed”

- Normalize employer names: map “google inc.” → “google”

- Logical flag: Row 4 violates rule (unemployed with employer name)

Wrapping Up

If data science is a bridge between raw information and intelligent decision-making, then data cleaning is the engineering beneath that bridge — unseen, but indispensable. In this second installment of the preprocessing series, we didn’t just learn to “fix messy data” — we learned to interrogate it, to listen to what its structure, gaps, and anomalies reveal about how it was collected, what it represents, and how it might mislead us if left unexamined.

We began with the fundamentals of data quality — not just as a checklist, but as a mindset. Enforcing type constraints, removing duplicates, validating relationships — these aren’t technicalities, they’re truth tests. If your data says one thing in one row and contradicts itself in another, then it is not yet ready to teach your model anything meaningful.

Then we moved into missingness — a topic that looks deceptively simple. But behind those blank cells lies a rich statistical terrain. Knowing whether data is Missing Completely at Random (MCAR), at Random (MAR), or Not at Random (MNAR) is not just academic — it shapes whether imputation helps or hurts, whether you can model naively or must acknowledge hidden bias. And the tools we explored — from simple means to model-based imputers — are not silver bullets, but levers to be used with care and context.

Outliers, too, required a careful touch. We learned how detection isn’t about drawing arbitrary lines, but about understanding what “normal” means for your data — and whether deviations are errors, exceptions, or the very insights you’re after. And we saw how trimming, winsorizing, or scaling each carry assumptions that must be matched to domain and use case.

Finally, we zoomed in on consistency — those quiet mismatches in category labels, typos, and semantic drift that silently corrupt analysis. A variable like “gender” with five different spellings for “male” will fool your model into thinking it’s diverse, when it’s simply disorganized. Cleaning isn’t about aesthetic neatness. It’s about epistemological clarity.

So what have we really done in this chapter?

We’ve developed an intuition — not just for tools and techniques, but for how to think like a data custodian. Every missing value, duplicate entry, or misspelled label is a signpost, not an obstacle. It’s the data talking back to us.

Let’s keep going to the next chapter of our Data Preprocessing series — Data Transformation.